Enterprise AI teams face a fundamental paradox. The more specialized your domain, the more valuable AI becomes, yet the harder it is to build. Healthcare organizations sit on vast imaging archives that could transform diagnostics, but expert radiologist annotations cost $100-500 per image. Manufacturing plants generate terabytes of sensor data daily, but identifying and labeling defects requires years of domain expertise. Traditional supervised learning demands these expensive labels at scale, creating bottlenecks that delay AI initiatives by months or years.

Self-supervised learning eliminates this dependency entirely.

The supervised learning paradigm that powered the last decade of AI breakthroughs carries hidden costs that scale poorly for enterprises. Consider a mid-sized healthcare provider building a diagnostic imaging system. Training a traditional convolutional neural network requires 50,000-100,000 labeled examples for production-grade accuracy. At $200 per expert annotation, that's $10-20 million before writing a single line of code.

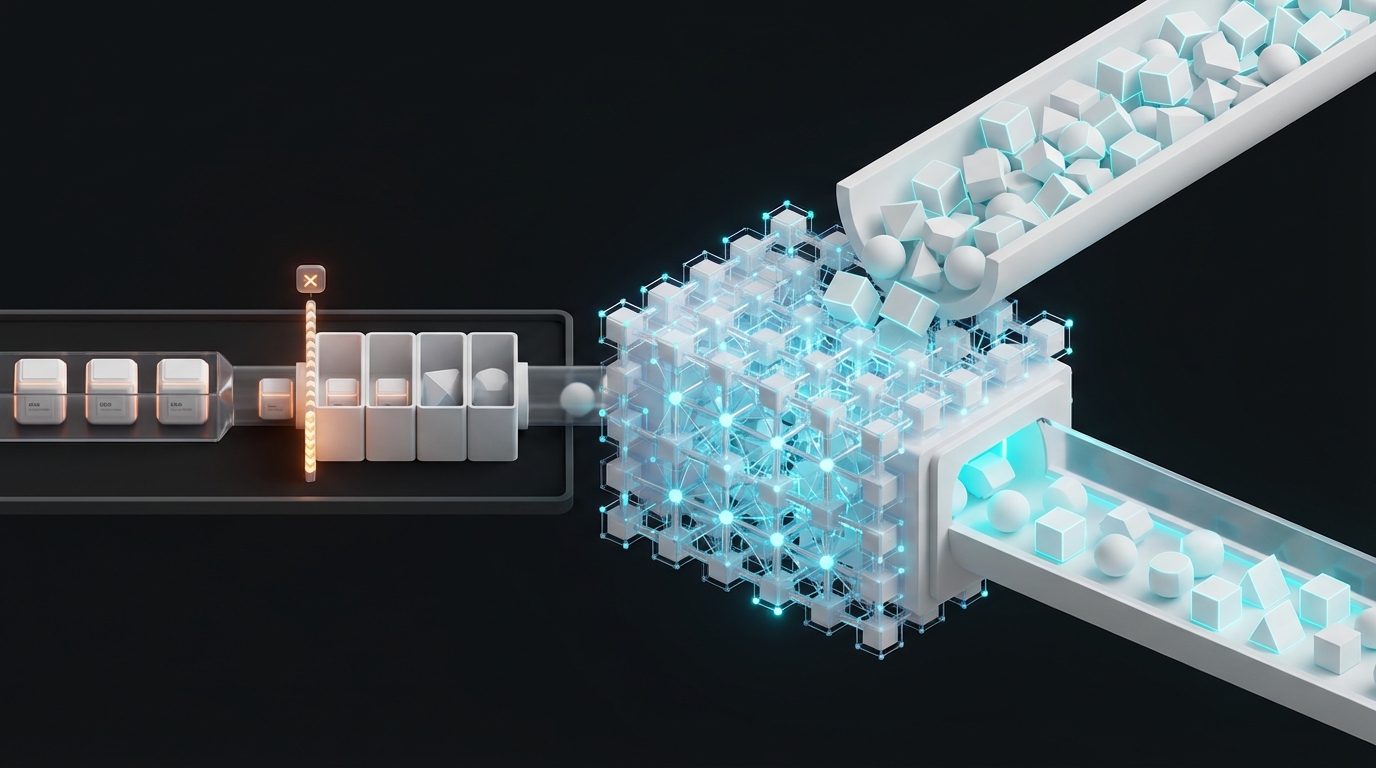

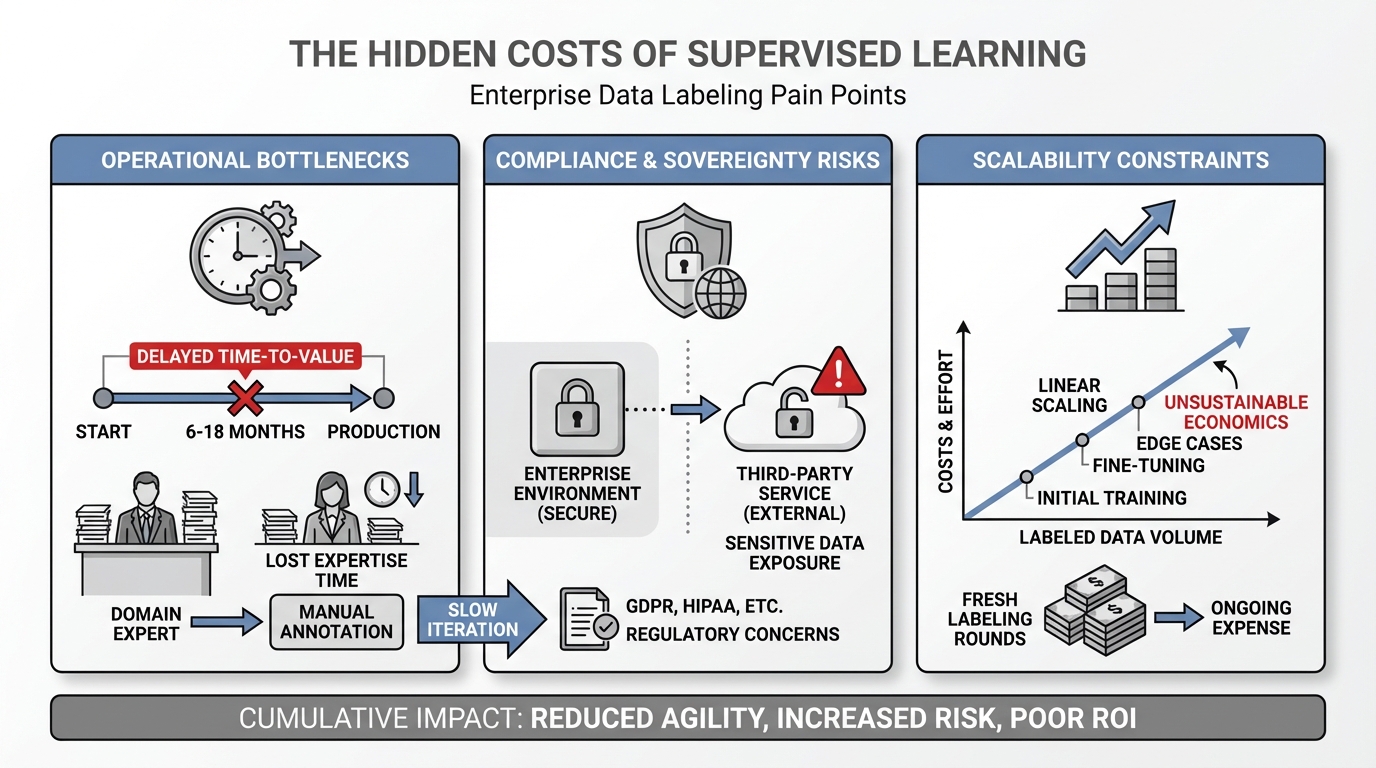

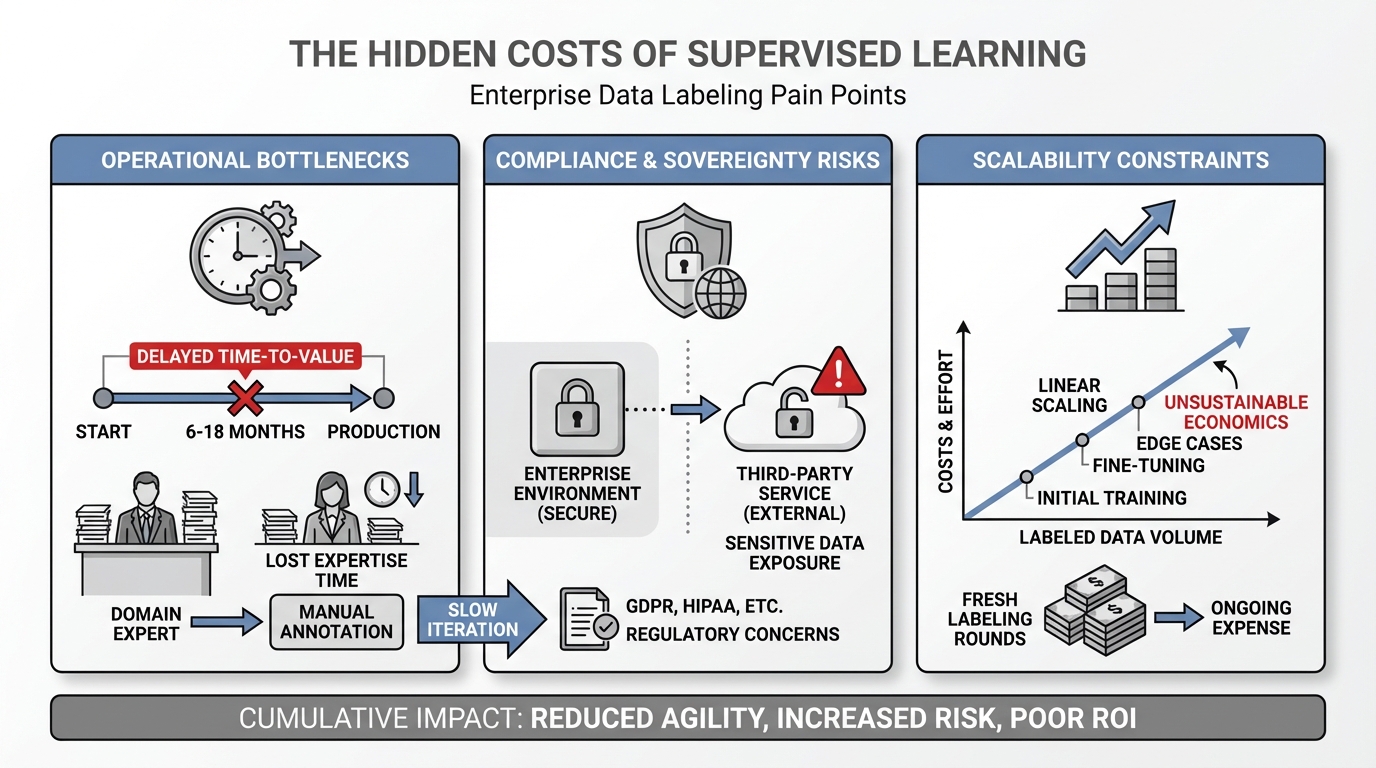

The financial burden is only part of the problem. Data labeling creates three critical pain points for regulated enterprises:

Operational bottlenecks: Manual annotation pipelines can take 6-18 months, extending time-to-production and reducing competitive advantage. Domain experts spend valuable time labeling data instead of applying their expertise to higher-value activities.

Compliance and sovereignty risks: Outsourcing annotation to third-party services means sensitive data leaves your environment. For organizations in healthcare, finance, or government sectors, this creates unacceptable regulatory exposure. Even anonymized data can pose compliance challenges under GDPR, HIPAA, or industry-specific frameworks.

Scalability constraints: Supervised learning scales linearly with labeled data requirements. Adding new capabilities, fine-tuning for edge cases, or adapting to distribution shift all demand fresh rounds of expensive labeling. This creates ongoing costs that make AI economics unfavorable for all but the largest budgets.

These challenges are particularly acute in computer vision applications where visual inspection requires specialized expertise. A manufacturing quality control system might need to distinguish between dozens of defect types, each requiring expert labeling across thousands of examples.

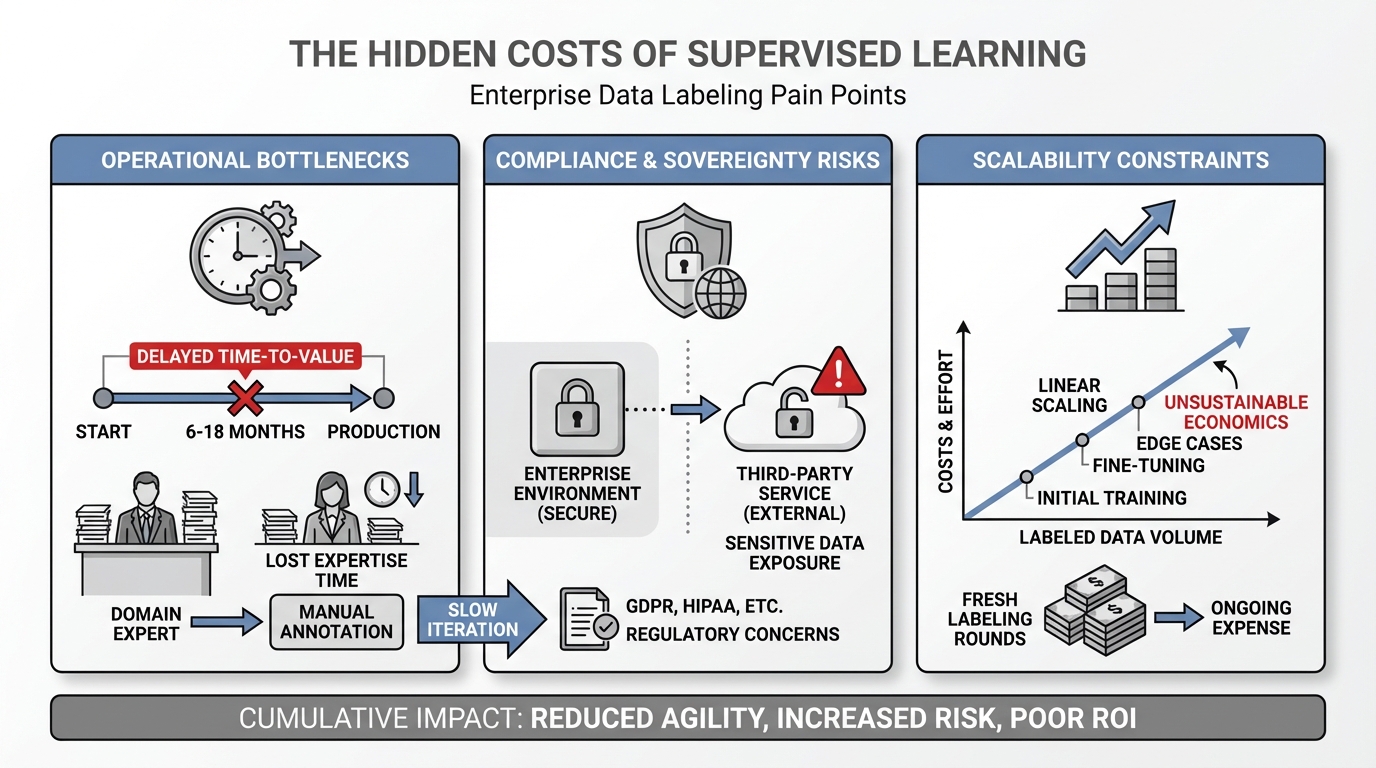

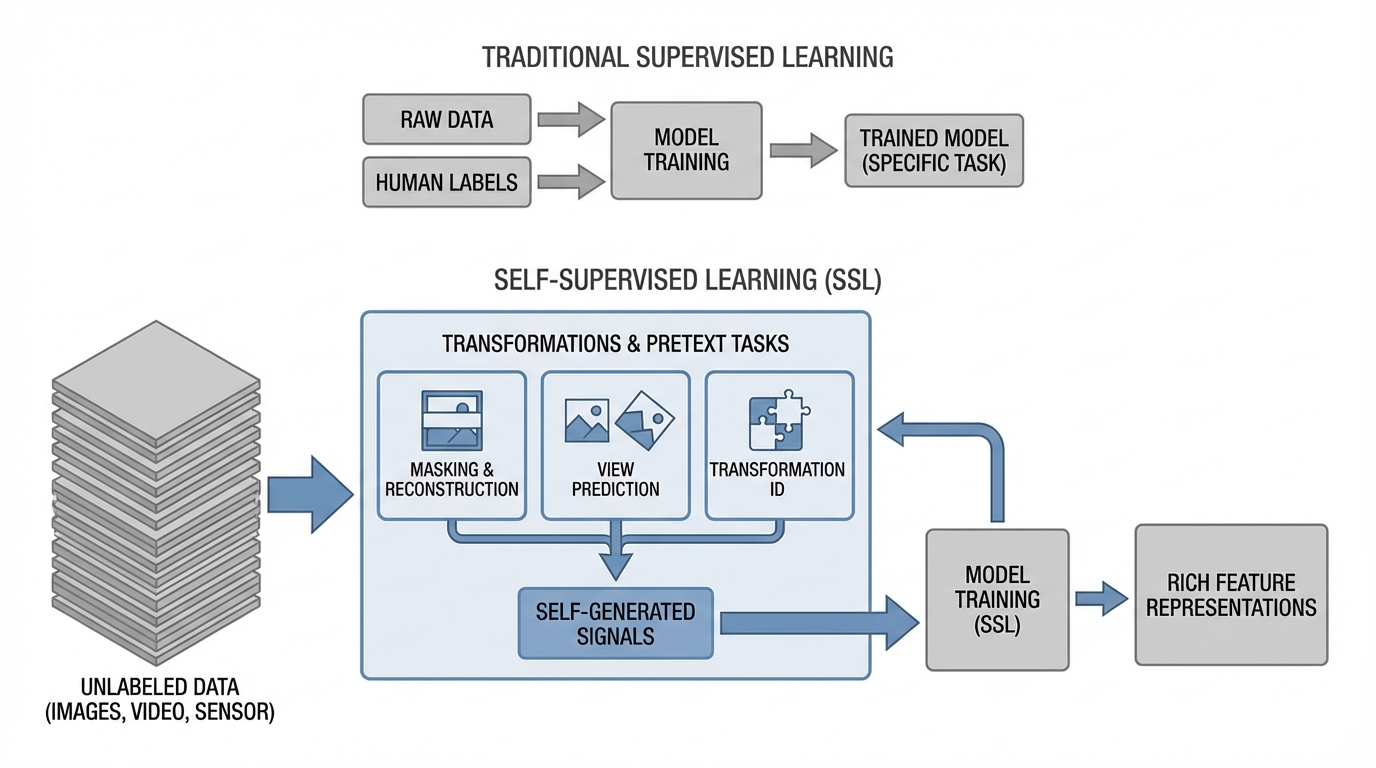

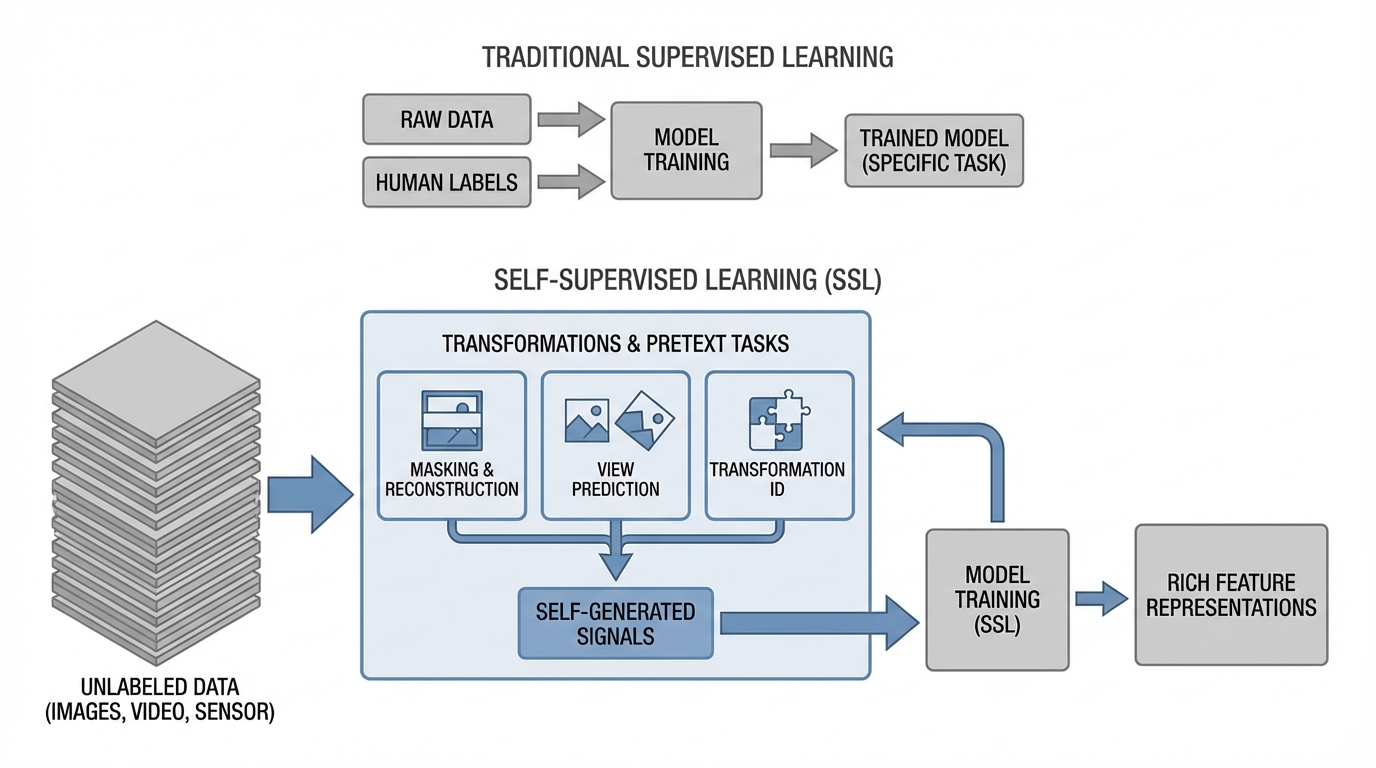

Self-supervised learning fundamentally reimagines the training process by generating supervisory signals from the data itself, rather than relying on human annotations. The methodology creates pretext tasks that force models to learn meaningful representations of underlying patterns and structures.

The technical approach typically follows this pattern: The system takes unlabeled data (images, video, sensor readings) and creates multiple views or transformations of the same input. The model learns by solving tasks like predicting one view from another, reconstructing masked portions of the input, or identifying which transformations were applied. Through this process, the network develops rich feature representations that capture semantic meaning without ever seeing a human label.

Vision transformers have emerged as the dominant architecture for self-supervised learning in computer vision. Unlike convolutional neural networks that process images through fixed hierarchical filters, transformers treat images as sequences of patches and learn relationships between them through attention mechanisms. This architecture naturally suits self-supervised objectives.

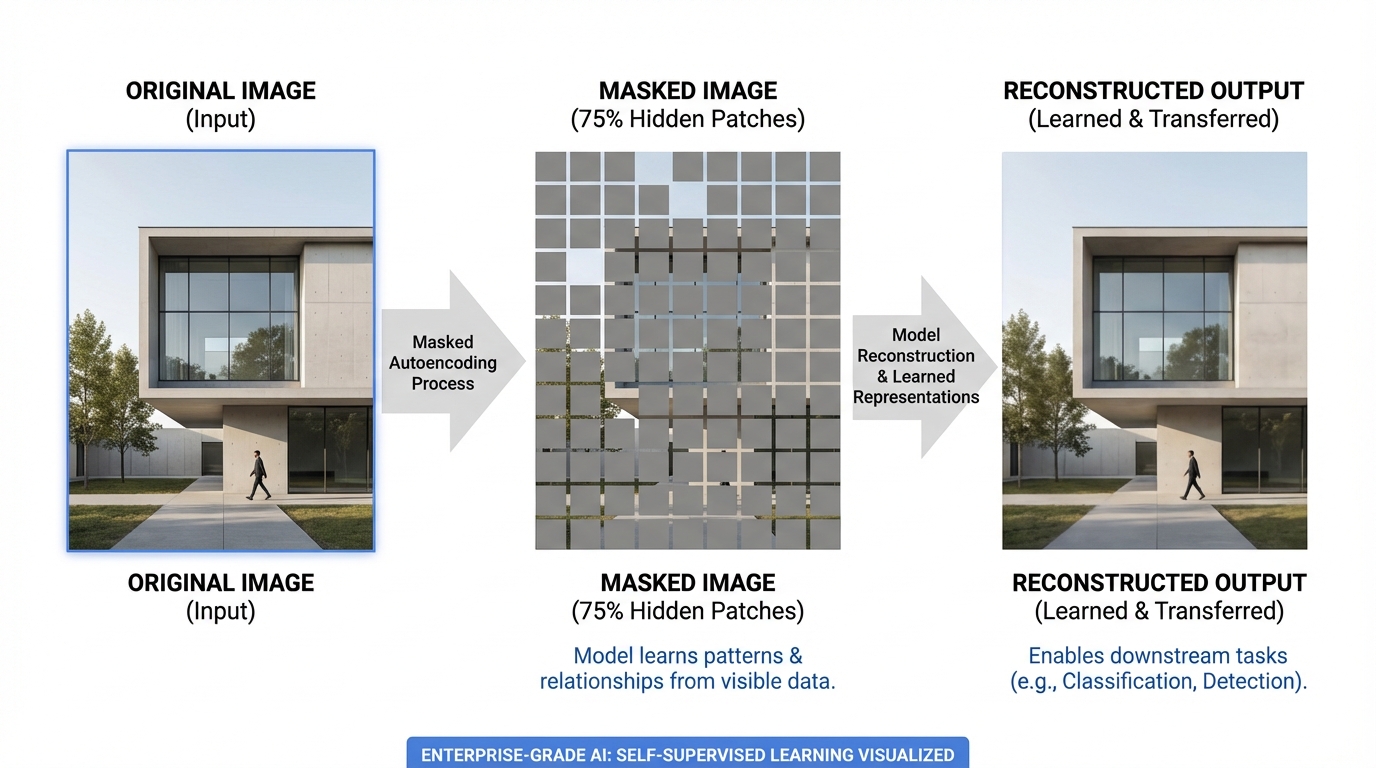

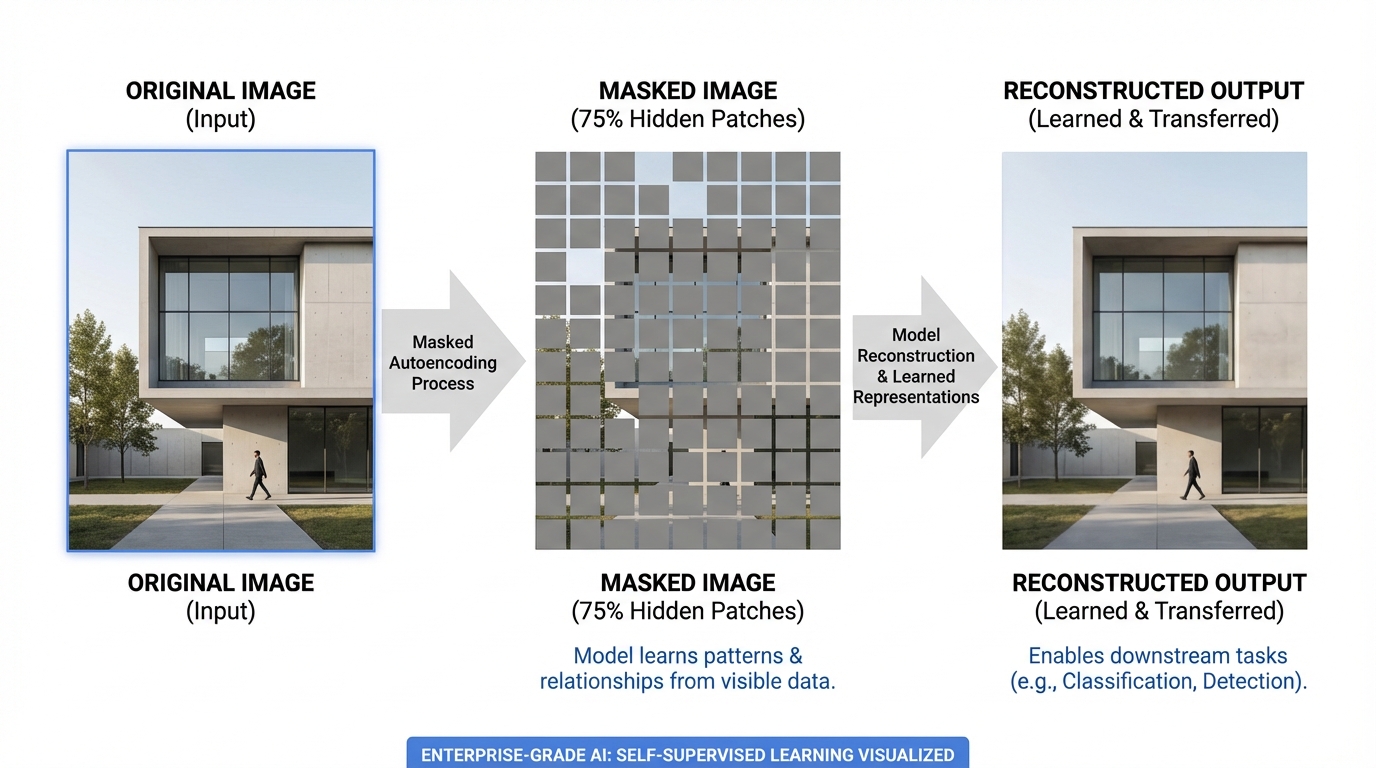

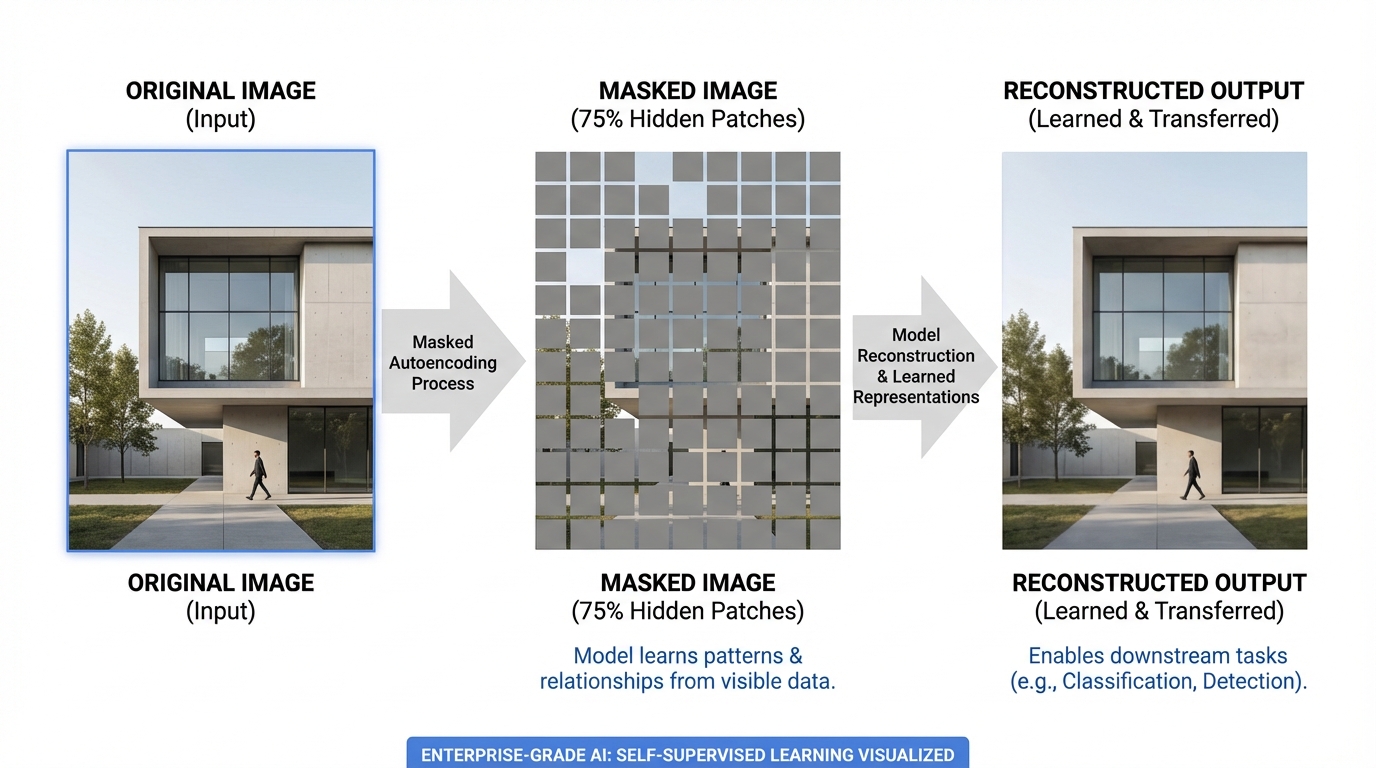

Consider a concrete example. A masked autoencoding approach might randomly hide 75% of image patches and train the model to reconstruct the missing portions. To succeed, the network must learn what objects typically look like, how textures behave, and what spatial relationships make sense. These learned representations transfer remarkably well to downstream tasks like classification, detection, or segmentation, often matching or exceeding supervised learning performance.

The mathematics underlying these methods builds on contrastive learning principles. The model learns to pull together representations of the same image under different transformations while pushing apart representations of different images. This creates embedding spaces where semantically similar inputs cluster together, even though the model never saw explicit category labels.

Recent architectures like MAE (Masked Autoencoders) and DINO (self-distillation with no labels) have demonstrated that models can learn visual concepts approaching human-level semantic understanding purely from unlabeled data. A model trained on millions of unlabeled medical images can learn to distinguish tissue types, identify anatomical structures, and recognize pathological patterns without seeing a single diagnosis.

While reducing training costs by 60% makes compelling budget presentations, the strategic value of self-supervised learning extends into architectural and operational advantages that reshape AI economics.

Data sovereignty becomes achievable at scale. Organizations can extract maximum value from proprietary unlabeled data entirely within their own infrastructure. A regional hospital network can train powerful diagnostic models on their complete imaging archive without sending data to external annotation services or cloud providers. This keeps sensitive information within controlled environments while still accessing state-of-the-art capabilities.

The methodology democratizes advanced AI for mid-market enterprises. Organizations without hyperscaler budgets can now deploy high-performance computer vision systems using their existing data assets. Lower computational requirements mean models train effectively on modest GPU clusters rather than requiring massive distributed training infrastructure.

Adaptation speed increases dramatically. When business conditions change or new edge cases emerge, teams can retrain models using fresh unlabeled data without waiting for annotation cycles. A manufacturing plant responding to new product lines can quickly adapt quality control systems using production data from the first few hundred units.

Model performance often exceeds supervised baselines because self-supervised approaches can leverage vastly larger datasets. Where supervised learning might use 50,000 labeled examples, self-supervised methods can train on 5 million unlabeled images from the same domain, learning more nuanced and robust representations.

Healthcare organizations are deploying self-supervised learning for diagnostic imaging where expert annotations are prohibitively expensive. A radiological AI system can pre-train on millions of unlabeled X-rays, CT scans, and MRIs to learn anatomical structures and tissue characteristics. Fine-tuning for specific diagnostic tasks then requires only hundreds of labeled examples rather than tens of thousands, reducing both cost and time-to-deployment.

Manufacturing quality control benefits from self-supervised approaches trained on normal production data. The model learns what "good" looks like across thousands of unlabeled examples, then identifies anomalies without requiring extensive defect libraries. This addresses the common challenge where defects are rare and labeling requires specialized inspection expertise.

Financial services apply self-supervised learning to document processing and fraud detection. Models can learn document structure and typical transaction patterns from vast unlabeled datasets, then transfer this knowledge to specialized detection tasks with minimal labeled examples. This maintains data within regulated environments while building sophisticated analysis capabilities.

Environmental monitoring and infrastructure inspection leverage self-supervised vision transformers for real-time analysis of satellite imagery, drone footage, and sensor networks. Systems can identify changes, anomalies, or patterns of interest by learning normal environmental conditions from unlabeled temporal data.

Successful deployment requires addressing several technical and organizational factors. Infrastructure needs shift from label management platforms to computational resources for pre-training. While self-supervised learning reduces labeling costs, initial model training requires GPU resources, though optimized architectures have reduced these requirements significantly in 2025.

Data quality matters differently than in supervised settings. Rather than needing perfect labels, teams need large volumes of diverse, representative unlabeled data. Data engineering focuses on collection, storage, and preprocessing pipelines rather than annotation workflows.

Model selection depends on your specific domain and deployment constraints. Vision transformers offer superior performance but require more computational resources than optimized convolutional architectures. Edge deployment scenarios may favor smaller models or hybrid approaches that balance capability with resource constraints.

Teams should plan for a two-stage process: self-supervised pre-training on large unlabeled datasets, followed by supervised fine-tuning on smaller labeled sets for specific tasks. This hybrid approach delivers the cost benefits of self-supervised learning while maintaining task-specific performance.

Monitoring and validation require new approaches. Without explicit labels during training, teams need methods to evaluate whether models are learning meaningful representations. Techniques like linear probe evaluation and transfer learning benchmarks help assess pre-trained model quality before investing in fine-tuning.

Implementing self-supervised learning within sovereignty requirements means deploying complete ML infrastructure inside your own environment. This includes not just training frameworks but the entire ecosystem of tools for data processing, experimentation, model versioning, and deployment.

Shakudo's platform addresses this by providing 170+ pre-integrated AI tools including PyTorch, TensorFlow, and specialized computer vision libraries, all deployable within your VPC. Teams can experiment with emerging self-supervised architectures like MAE or DINO without building integration layers or compromising data sovereignty. The platform eliminates vendor lock-in while ensuring proprietary unlabeled data never leaves organizational control.

Self-supervised learning represents more than a cost optimization technique. It fundamentally changes what's possible for enterprise AI by removing the labeled data dependency that has constrained deployment for years. Organizations can now extract value from their complete data assets, not just the small fraction they can afford to label.

The convergence of optimized architectures, lower training costs, and proven methodologies makes 2025 the inflection point for enterprise adoption. Teams that establish self-supervised capabilities now will build sustainable competitive advantages in their domains while maintaining the data sovereignty that regulated industries demand.

For organizations ready to move beyond supervised learning constraints, the path forward starts with assessing your unlabeled data assets and identifying high-value computer vision applications where annotation costs currently block progress. Similar challenges around data sovereignty and model governance are explored in our guide to AI governance frameworks for 2025, while teams needing to train on distributed, privacy-sensitive datasets should consider when enterprises need federated learning as a complementary approach. The technical foundations are mature, the tools are accessible, and the business case is clear.

Enterprise AI teams face a fundamental paradox. The more specialized your domain, the more valuable AI becomes, yet the harder it is to build. Healthcare organizations sit on vast imaging archives that could transform diagnostics, but expert radiologist annotations cost $100-500 per image. Manufacturing plants generate terabytes of sensor data daily, but identifying and labeling defects requires years of domain expertise. Traditional supervised learning demands these expensive labels at scale, creating bottlenecks that delay AI initiatives by months or years.

Self-supervised learning eliminates this dependency entirely.

The supervised learning paradigm that powered the last decade of AI breakthroughs carries hidden costs that scale poorly for enterprises. Consider a mid-sized healthcare provider building a diagnostic imaging system. Training a traditional convolutional neural network requires 50,000-100,000 labeled examples for production-grade accuracy. At $200 per expert annotation, that's $10-20 million before writing a single line of code.

The financial burden is only part of the problem. Data labeling creates three critical pain points for regulated enterprises:

Operational bottlenecks: Manual annotation pipelines can take 6-18 months, extending time-to-production and reducing competitive advantage. Domain experts spend valuable time labeling data instead of applying their expertise to higher-value activities.

Compliance and sovereignty risks: Outsourcing annotation to third-party services means sensitive data leaves your environment. For organizations in healthcare, finance, or government sectors, this creates unacceptable regulatory exposure. Even anonymized data can pose compliance challenges under GDPR, HIPAA, or industry-specific frameworks.

Scalability constraints: Supervised learning scales linearly with labeled data requirements. Adding new capabilities, fine-tuning for edge cases, or adapting to distribution shift all demand fresh rounds of expensive labeling. This creates ongoing costs that make AI economics unfavorable for all but the largest budgets.

These challenges are particularly acute in computer vision applications where visual inspection requires specialized expertise. A manufacturing quality control system might need to distinguish between dozens of defect types, each requiring expert labeling across thousands of examples.

Self-supervised learning fundamentally reimagines the training process by generating supervisory signals from the data itself, rather than relying on human annotations. The methodology creates pretext tasks that force models to learn meaningful representations of underlying patterns and structures.

The technical approach typically follows this pattern: The system takes unlabeled data (images, video, sensor readings) and creates multiple views or transformations of the same input. The model learns by solving tasks like predicting one view from another, reconstructing masked portions of the input, or identifying which transformations were applied. Through this process, the network develops rich feature representations that capture semantic meaning without ever seeing a human label.

Vision transformers have emerged as the dominant architecture for self-supervised learning in computer vision. Unlike convolutional neural networks that process images through fixed hierarchical filters, transformers treat images as sequences of patches and learn relationships between them through attention mechanisms. This architecture naturally suits self-supervised objectives.

Consider a concrete example. A masked autoencoding approach might randomly hide 75% of image patches and train the model to reconstruct the missing portions. To succeed, the network must learn what objects typically look like, how textures behave, and what spatial relationships make sense. These learned representations transfer remarkably well to downstream tasks like classification, detection, or segmentation, often matching or exceeding supervised learning performance.

The mathematics underlying these methods builds on contrastive learning principles. The model learns to pull together representations of the same image under different transformations while pushing apart representations of different images. This creates embedding spaces where semantically similar inputs cluster together, even though the model never saw explicit category labels.

Recent architectures like MAE (Masked Autoencoders) and DINO (self-distillation with no labels) have demonstrated that models can learn visual concepts approaching human-level semantic understanding purely from unlabeled data. A model trained on millions of unlabeled medical images can learn to distinguish tissue types, identify anatomical structures, and recognize pathological patterns without seeing a single diagnosis.

While reducing training costs by 60% makes compelling budget presentations, the strategic value of self-supervised learning extends into architectural and operational advantages that reshape AI economics.

Data sovereignty becomes achievable at scale. Organizations can extract maximum value from proprietary unlabeled data entirely within their own infrastructure. A regional hospital network can train powerful diagnostic models on their complete imaging archive without sending data to external annotation services or cloud providers. This keeps sensitive information within controlled environments while still accessing state-of-the-art capabilities.

The methodology democratizes advanced AI for mid-market enterprises. Organizations without hyperscaler budgets can now deploy high-performance computer vision systems using their existing data assets. Lower computational requirements mean models train effectively on modest GPU clusters rather than requiring massive distributed training infrastructure.

Adaptation speed increases dramatically. When business conditions change or new edge cases emerge, teams can retrain models using fresh unlabeled data without waiting for annotation cycles. A manufacturing plant responding to new product lines can quickly adapt quality control systems using production data from the first few hundred units.

Model performance often exceeds supervised baselines because self-supervised approaches can leverage vastly larger datasets. Where supervised learning might use 50,000 labeled examples, self-supervised methods can train on 5 million unlabeled images from the same domain, learning more nuanced and robust representations.

Healthcare organizations are deploying self-supervised learning for diagnostic imaging where expert annotations are prohibitively expensive. A radiological AI system can pre-train on millions of unlabeled X-rays, CT scans, and MRIs to learn anatomical structures and tissue characteristics. Fine-tuning for specific diagnostic tasks then requires only hundreds of labeled examples rather than tens of thousands, reducing both cost and time-to-deployment.

Manufacturing quality control benefits from self-supervised approaches trained on normal production data. The model learns what "good" looks like across thousands of unlabeled examples, then identifies anomalies without requiring extensive defect libraries. This addresses the common challenge where defects are rare and labeling requires specialized inspection expertise.

Financial services apply self-supervised learning to document processing and fraud detection. Models can learn document structure and typical transaction patterns from vast unlabeled datasets, then transfer this knowledge to specialized detection tasks with minimal labeled examples. This maintains data within regulated environments while building sophisticated analysis capabilities.

Environmental monitoring and infrastructure inspection leverage self-supervised vision transformers for real-time analysis of satellite imagery, drone footage, and sensor networks. Systems can identify changes, anomalies, or patterns of interest by learning normal environmental conditions from unlabeled temporal data.

Successful deployment requires addressing several technical and organizational factors. Infrastructure needs shift from label management platforms to computational resources for pre-training. While self-supervised learning reduces labeling costs, initial model training requires GPU resources, though optimized architectures have reduced these requirements significantly in 2025.

Data quality matters differently than in supervised settings. Rather than needing perfect labels, teams need large volumes of diverse, representative unlabeled data. Data engineering focuses on collection, storage, and preprocessing pipelines rather than annotation workflows.

Model selection depends on your specific domain and deployment constraints. Vision transformers offer superior performance but require more computational resources than optimized convolutional architectures. Edge deployment scenarios may favor smaller models or hybrid approaches that balance capability with resource constraints.

Teams should plan for a two-stage process: self-supervised pre-training on large unlabeled datasets, followed by supervised fine-tuning on smaller labeled sets for specific tasks. This hybrid approach delivers the cost benefits of self-supervised learning while maintaining task-specific performance.

Monitoring and validation require new approaches. Without explicit labels during training, teams need methods to evaluate whether models are learning meaningful representations. Techniques like linear probe evaluation and transfer learning benchmarks help assess pre-trained model quality before investing in fine-tuning.

Implementing self-supervised learning within sovereignty requirements means deploying complete ML infrastructure inside your own environment. This includes not just training frameworks but the entire ecosystem of tools for data processing, experimentation, model versioning, and deployment.

Shakudo's platform addresses this by providing 170+ pre-integrated AI tools including PyTorch, TensorFlow, and specialized computer vision libraries, all deployable within your VPC. Teams can experiment with emerging self-supervised architectures like MAE or DINO without building integration layers or compromising data sovereignty. The platform eliminates vendor lock-in while ensuring proprietary unlabeled data never leaves organizational control.

Self-supervised learning represents more than a cost optimization technique. It fundamentally changes what's possible for enterprise AI by removing the labeled data dependency that has constrained deployment for years. Organizations can now extract value from their complete data assets, not just the small fraction they can afford to label.

The convergence of optimized architectures, lower training costs, and proven methodologies makes 2025 the inflection point for enterprise adoption. Teams that establish self-supervised capabilities now will build sustainable competitive advantages in their domains while maintaining the data sovereignty that regulated industries demand.

For organizations ready to move beyond supervised learning constraints, the path forward starts with assessing your unlabeled data assets and identifying high-value computer vision applications where annotation costs currently block progress. Similar challenges around data sovereignty and model governance are explored in our guide to AI governance frameworks for 2025, while teams needing to train on distributed, privacy-sensitive datasets should consider when enterprises need federated learning as a complementary approach. The technical foundations are mature, the tools are accessible, and the business case is clear.

Enterprise AI teams face a fundamental paradox. The more specialized your domain, the more valuable AI becomes, yet the harder it is to build. Healthcare organizations sit on vast imaging archives that could transform diagnostics, but expert radiologist annotations cost $100-500 per image. Manufacturing plants generate terabytes of sensor data daily, but identifying and labeling defects requires years of domain expertise. Traditional supervised learning demands these expensive labels at scale, creating bottlenecks that delay AI initiatives by months or years.

Self-supervised learning eliminates this dependency entirely.

The supervised learning paradigm that powered the last decade of AI breakthroughs carries hidden costs that scale poorly for enterprises. Consider a mid-sized healthcare provider building a diagnostic imaging system. Training a traditional convolutional neural network requires 50,000-100,000 labeled examples for production-grade accuracy. At $200 per expert annotation, that's $10-20 million before writing a single line of code.

The financial burden is only part of the problem. Data labeling creates three critical pain points for regulated enterprises:

Operational bottlenecks: Manual annotation pipelines can take 6-18 months, extending time-to-production and reducing competitive advantage. Domain experts spend valuable time labeling data instead of applying their expertise to higher-value activities.

Compliance and sovereignty risks: Outsourcing annotation to third-party services means sensitive data leaves your environment. For organizations in healthcare, finance, or government sectors, this creates unacceptable regulatory exposure. Even anonymized data can pose compliance challenges under GDPR, HIPAA, or industry-specific frameworks.

Scalability constraints: Supervised learning scales linearly with labeled data requirements. Adding new capabilities, fine-tuning for edge cases, or adapting to distribution shift all demand fresh rounds of expensive labeling. This creates ongoing costs that make AI economics unfavorable for all but the largest budgets.

These challenges are particularly acute in computer vision applications where visual inspection requires specialized expertise. A manufacturing quality control system might need to distinguish between dozens of defect types, each requiring expert labeling across thousands of examples.

Self-supervised learning fundamentally reimagines the training process by generating supervisory signals from the data itself, rather than relying on human annotations. The methodology creates pretext tasks that force models to learn meaningful representations of underlying patterns and structures.

The technical approach typically follows this pattern: The system takes unlabeled data (images, video, sensor readings) and creates multiple views or transformations of the same input. The model learns by solving tasks like predicting one view from another, reconstructing masked portions of the input, or identifying which transformations were applied. Through this process, the network develops rich feature representations that capture semantic meaning without ever seeing a human label.

Vision transformers have emerged as the dominant architecture for self-supervised learning in computer vision. Unlike convolutional neural networks that process images through fixed hierarchical filters, transformers treat images as sequences of patches and learn relationships between them through attention mechanisms. This architecture naturally suits self-supervised objectives.

Consider a concrete example. A masked autoencoding approach might randomly hide 75% of image patches and train the model to reconstruct the missing portions. To succeed, the network must learn what objects typically look like, how textures behave, and what spatial relationships make sense. These learned representations transfer remarkably well to downstream tasks like classification, detection, or segmentation, often matching or exceeding supervised learning performance.

The mathematics underlying these methods builds on contrastive learning principles. The model learns to pull together representations of the same image under different transformations while pushing apart representations of different images. This creates embedding spaces where semantically similar inputs cluster together, even though the model never saw explicit category labels.

Recent architectures like MAE (Masked Autoencoders) and DINO (self-distillation with no labels) have demonstrated that models can learn visual concepts approaching human-level semantic understanding purely from unlabeled data. A model trained on millions of unlabeled medical images can learn to distinguish tissue types, identify anatomical structures, and recognize pathological patterns without seeing a single diagnosis.

While reducing training costs by 60% makes compelling budget presentations, the strategic value of self-supervised learning extends into architectural and operational advantages that reshape AI economics.

Data sovereignty becomes achievable at scale. Organizations can extract maximum value from proprietary unlabeled data entirely within their own infrastructure. A regional hospital network can train powerful diagnostic models on their complete imaging archive without sending data to external annotation services or cloud providers. This keeps sensitive information within controlled environments while still accessing state-of-the-art capabilities.

The methodology democratizes advanced AI for mid-market enterprises. Organizations without hyperscaler budgets can now deploy high-performance computer vision systems using their existing data assets. Lower computational requirements mean models train effectively on modest GPU clusters rather than requiring massive distributed training infrastructure.

Adaptation speed increases dramatically. When business conditions change or new edge cases emerge, teams can retrain models using fresh unlabeled data without waiting for annotation cycles. A manufacturing plant responding to new product lines can quickly adapt quality control systems using production data from the first few hundred units.

Model performance often exceeds supervised baselines because self-supervised approaches can leverage vastly larger datasets. Where supervised learning might use 50,000 labeled examples, self-supervised methods can train on 5 million unlabeled images from the same domain, learning more nuanced and robust representations.

Healthcare organizations are deploying self-supervised learning for diagnostic imaging where expert annotations are prohibitively expensive. A radiological AI system can pre-train on millions of unlabeled X-rays, CT scans, and MRIs to learn anatomical structures and tissue characteristics. Fine-tuning for specific diagnostic tasks then requires only hundreds of labeled examples rather than tens of thousands, reducing both cost and time-to-deployment.

Manufacturing quality control benefits from self-supervised approaches trained on normal production data. The model learns what "good" looks like across thousands of unlabeled examples, then identifies anomalies without requiring extensive defect libraries. This addresses the common challenge where defects are rare and labeling requires specialized inspection expertise.

Financial services apply self-supervised learning to document processing and fraud detection. Models can learn document structure and typical transaction patterns from vast unlabeled datasets, then transfer this knowledge to specialized detection tasks with minimal labeled examples. This maintains data within regulated environments while building sophisticated analysis capabilities.

Environmental monitoring and infrastructure inspection leverage self-supervised vision transformers for real-time analysis of satellite imagery, drone footage, and sensor networks. Systems can identify changes, anomalies, or patterns of interest by learning normal environmental conditions from unlabeled temporal data.

Successful deployment requires addressing several technical and organizational factors. Infrastructure needs shift from label management platforms to computational resources for pre-training. While self-supervised learning reduces labeling costs, initial model training requires GPU resources, though optimized architectures have reduced these requirements significantly in 2025.

Data quality matters differently than in supervised settings. Rather than needing perfect labels, teams need large volumes of diverse, representative unlabeled data. Data engineering focuses on collection, storage, and preprocessing pipelines rather than annotation workflows.

Model selection depends on your specific domain and deployment constraints. Vision transformers offer superior performance but require more computational resources than optimized convolutional architectures. Edge deployment scenarios may favor smaller models or hybrid approaches that balance capability with resource constraints.

Teams should plan for a two-stage process: self-supervised pre-training on large unlabeled datasets, followed by supervised fine-tuning on smaller labeled sets for specific tasks. This hybrid approach delivers the cost benefits of self-supervised learning while maintaining task-specific performance.

Monitoring and validation require new approaches. Without explicit labels during training, teams need methods to evaluate whether models are learning meaningful representations. Techniques like linear probe evaluation and transfer learning benchmarks help assess pre-trained model quality before investing in fine-tuning.

Implementing self-supervised learning within sovereignty requirements means deploying complete ML infrastructure inside your own environment. This includes not just training frameworks but the entire ecosystem of tools for data processing, experimentation, model versioning, and deployment.

Shakudo's platform addresses this by providing 170+ pre-integrated AI tools including PyTorch, TensorFlow, and specialized computer vision libraries, all deployable within your VPC. Teams can experiment with emerging self-supervised architectures like MAE or DINO without building integration layers or compromising data sovereignty. The platform eliminates vendor lock-in while ensuring proprietary unlabeled data never leaves organizational control.

Self-supervised learning represents more than a cost optimization technique. It fundamentally changes what's possible for enterprise AI by removing the labeled data dependency that has constrained deployment for years. Organizations can now extract value from their complete data assets, not just the small fraction they can afford to label.

The convergence of optimized architectures, lower training costs, and proven methodologies makes 2025 the inflection point for enterprise adoption. Teams that establish self-supervised capabilities now will build sustainable competitive advantages in their domains while maintaining the data sovereignty that regulated industries demand.

For organizations ready to move beyond supervised learning constraints, the path forward starts with assessing your unlabeled data assets and identifying high-value computer vision applications where annotation costs currently block progress. Similar challenges around data sovereignty and model governance are explored in our guide to AI governance frameworks for 2025, while teams needing to train on distributed, privacy-sensitive datasets should consider when enterprises need federated learning as a complementary approach. The technical foundations are mature, the tools are accessible, and the business case is clear.