The 2025 enterprise AI market reveals a decisive shift: safety, reliability, and regulatory compliance have emerged as primary criteria for AI vendor selection, overtaking raw model performance as the critical differentiator. This transformation comes at a pivotal moment. Enterprise AI spending reached $37 billion in 2025, up from $11.5 billion in 2024, representing a 3.2x year-over-year increase. Yet despite this massive investment surge, 74% of companies had yet to see tangible value from their AI initiatives in 2024, with nearly two-thirds of organizations remaining stuck in pilot stage as of mid-2025.

The stakes are extraordinarily high. Organizations that cannot scale AI effectively face a 75% risk of business failure, according to recent industry analysis. Meanwhile, 92% of AI vendors claim broad data usage rights, far exceeding the market average of 63%, creating significant intellectual property and data governance concerns that most enterprises discover too late in the procurement process.

For engineering, data, and IT leaders navigating this landscape, vendor selection has evolved from a technical evaluation into a strategic risk calculation. The questions you ask before signing a contract will determine whether your AI initiatives deliver transformative value or join the 90% of pilots that never reach production.

Before diving into specific evaluation questions, it's essential to understand the complete cost structure of enterprise AI vendor relationships. The advertised subscription price represents only a fraction of true total cost of ownership. Enterprise implementations typically cost 3-5 times the advertised subscription price when accounting for integration, customization, infrastructure scaling, and operational overhead required to maintain AI systems in production.

Two years and several million dollars later, what should have revolutionized Ford's maintenance approach remained stuck in what industry experts call 'pilot purgatory'—a common fate for 70-90% of enterprise AI initiatives. This failure pattern repeats across industries, and poor vendor selection amplifies every underlying challenge: data integration becomes impossible, deployment timelines stretch from months to years, and switching costs create de facto lock-in even when performance disappoints.

Only 22% of organizations have moved beyond experimentation to strategic AI deployment. The distinguishing factor between these successful deployments and failed pilots often traces back to vendor evaluation criteria established during initial selection.

The volume of data that enterprises need to manage continues to grow exponentially, while regulations around data locality, residency and sovereignty simultaneously continue to multiply across jurisdictions worldwide. Companies must be vigilant about keeping up with rapidly evolving national and regional policies around who can access specific data; how it's collected, processed and stored; and where it's accessed from or transferred to.

Demand specificity: which cloud regions, which data centers, which jurisdictions. Cloud-based AI platforms create immediate data sovereignty conflicts for regulated industries. Countries including India, China, and EU members are enforcing strict data localization requirements that make vendor location and deployment options critical decision factors.

Scrutinize the fine print. With 92% of AI vendors claiming broad data usage rights, understanding exactly what happens to your proprietary data, training inputs, and model outputs is non-negotiable. Ask explicitly: Will our data train your models? Can you access our prompts and responses? What happens to our data if we terminate the relationship?

Some laws set conditions around cross-border transfers, while others prohibit them altogether. For instance, in some jurisdictions, companies need to demonstrate a legal requirement to move the data, retain a local copy of the data for compliance reasons, or both. Other regulations govern whether companies can access data stored in a region, generate insights and then export those insights to HQ for further analysis or model training.

This complexity affects 71% of organizations who cite cross-border data transfer compliance as their top regulatory challenge in 2025.

Financial institutions face intense regulatory scrutiny and high-stakes AI applications. Demand evidence of SOC 2, ISO 27001, GDPR compliance, and industry-specific certifications (HIPAA for healthcare, FedRAMP for government). Banks must validate models, document assumptions and limitations, perform ongoing monitoring, conduct independent reviews, and maintain governance over third-party AI tools and vendors. Your vendor must support these requirements with documented audit trails, not promises.

Timelines vary: simple pilots with clean data and clear integration points can move to production in 3–6 months, while complex systems with multiple data sources and compliance requirements may take 9–18 months. Demand case studies from similar implementations in your industry. Ask about the longest deployment the vendor has experienced and what caused the delays.

The best performers follow a 14-month timeline from initial pilot to meaningful ROI. Any vendor promising significantly faster timelines without understanding your data infrastructure, integration requirements, and organizational readiness should raise red flags.

Integration complexity kills more AI projects than technical performance issues. 45% of teams cite data quality and pipeline consistency as their top production obstacle. Another 40% point to security and compliance challenges. Ask for detailed technical architecture reviews, API documentation, and integration examples with systems similar to yours.

McKinsey's 2025 State of AI survey shows just one-third of companies have managed enterprise-wide scaling. Vendors often excel at small pilots but lack the infrastructure, support model, or architectural design to support hundreds or thousands of users across multiple business units. Request architecture diagrams showing how the solution scales, performance benchmarks at different user volumes, and customer references who have successfully scaled beyond initial deployments.

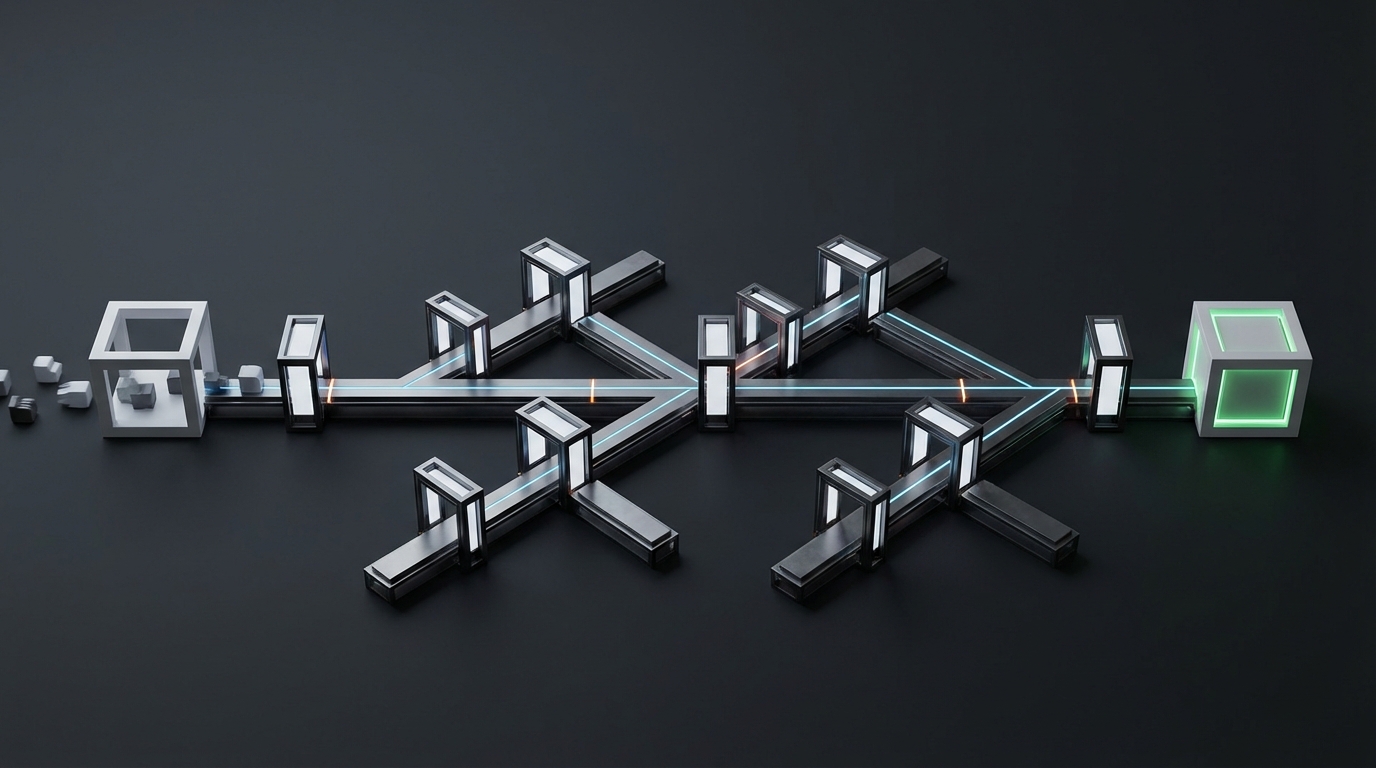

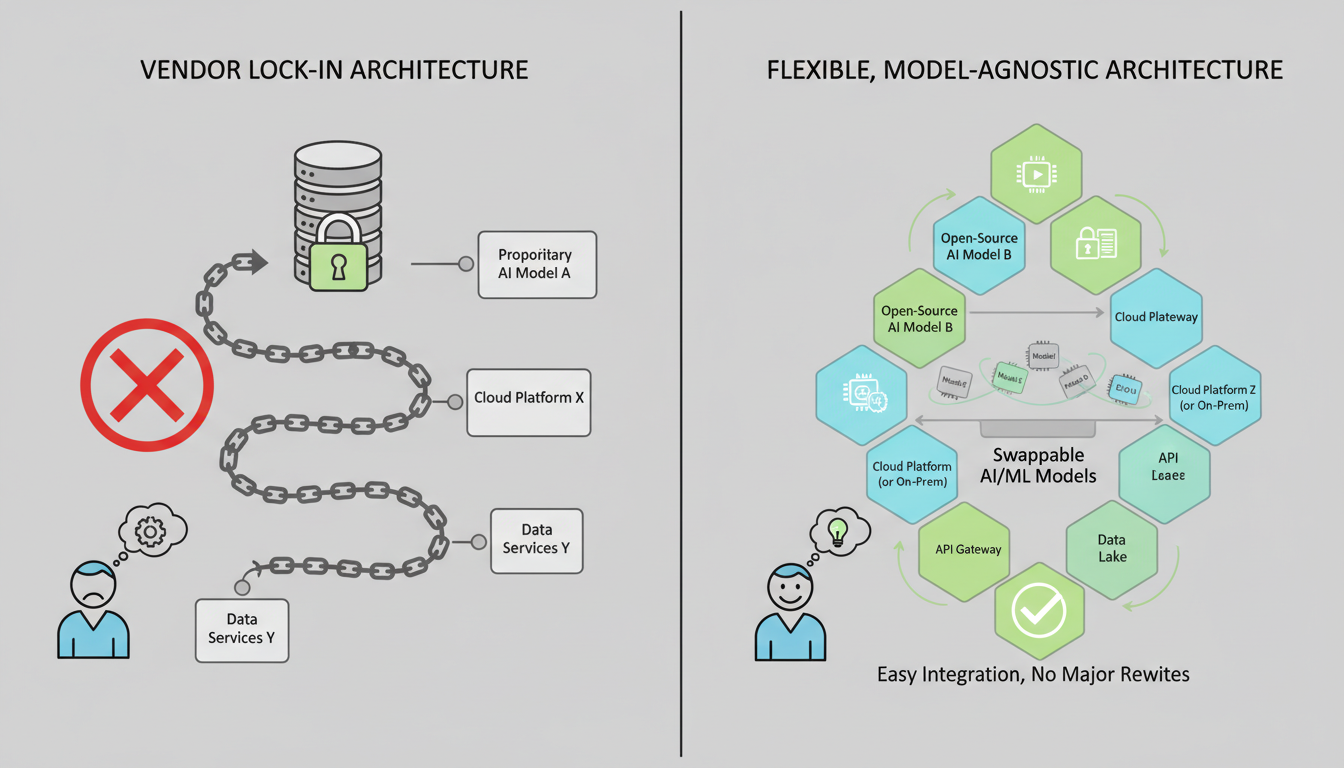

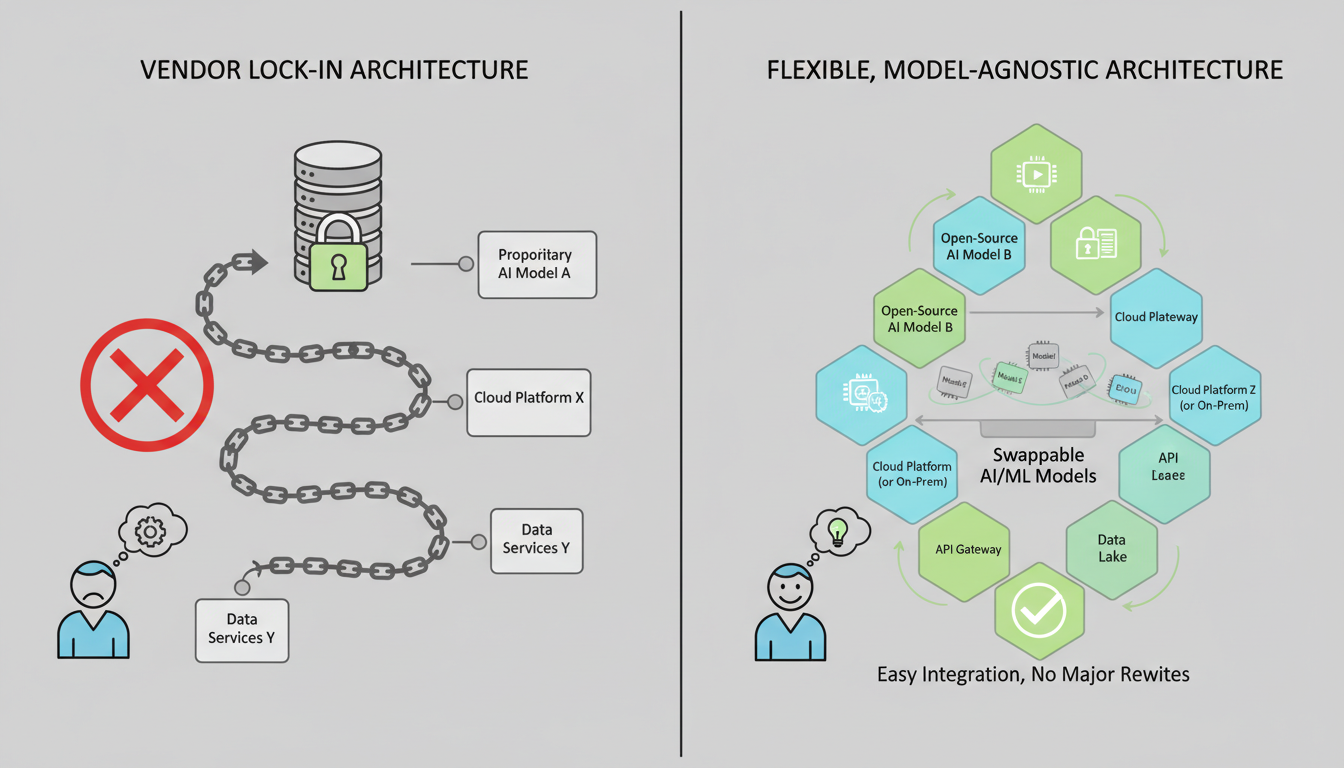

As enterprises scale their AI initiatives, one of the biggest architectural risks they face is vendor lock-in—being tied too tightly to a single model provider or cloud platform. In the rapidly evolving AI ecosystem, where new foundation models and APIs emerge almost weekly, this dependency can quickly limit innovation and flexibility. Teams that commit early to one ecosystem often find themselves unable to adopt newer, better, or more cost-effective models without rewriting large portions of their stack.

Demand contractual guarantees for data export in standard formats, model portability, and reasonable termination terms. Contracts should include provisions for data portability and code access to mitigate risks of lock-in.

AI model capabilities improve exponentially every 12 to 18 months, meaning today's best-in-class solution may become obsolete within months. The return of competitive open-weight models means enterprises can pair a proprietary default with targeted open-weight deployments to meet sensitivity, sovereignty, or cost objectives.

Vendors that lock you into proprietary models create strategic liability. For example, TrueFoundry's gateway supports any OpenAI-compatible model, so if you write your code against TrueFoundry's OpenAI-style API, you can switch between OpenAI, Azure OpenAI, Anthropic, or your own models with a configuration change—no code rewrite required. This architectural pattern should be your standard expectation.

AI vendors are experimenting with pricing rates and models, creating cost uncertainty for enterprise CIOs deploying the technology. While many AI vendors have moved to hybrid pricing models that combine subscriptions with use- or outcome-based pricing, these strategies are not set in stone. In some cases, AI vendors are changing their pricing rates or models every few weeks.

Demand transparent, predictable pricing models with usage caps. CIOs should set budget limits when employees are working with use-based AI tools because API use can drive huge, unexpected costs. Negotiate contractual protections against mid-term price changes and understand exactly what triggers additional costs.

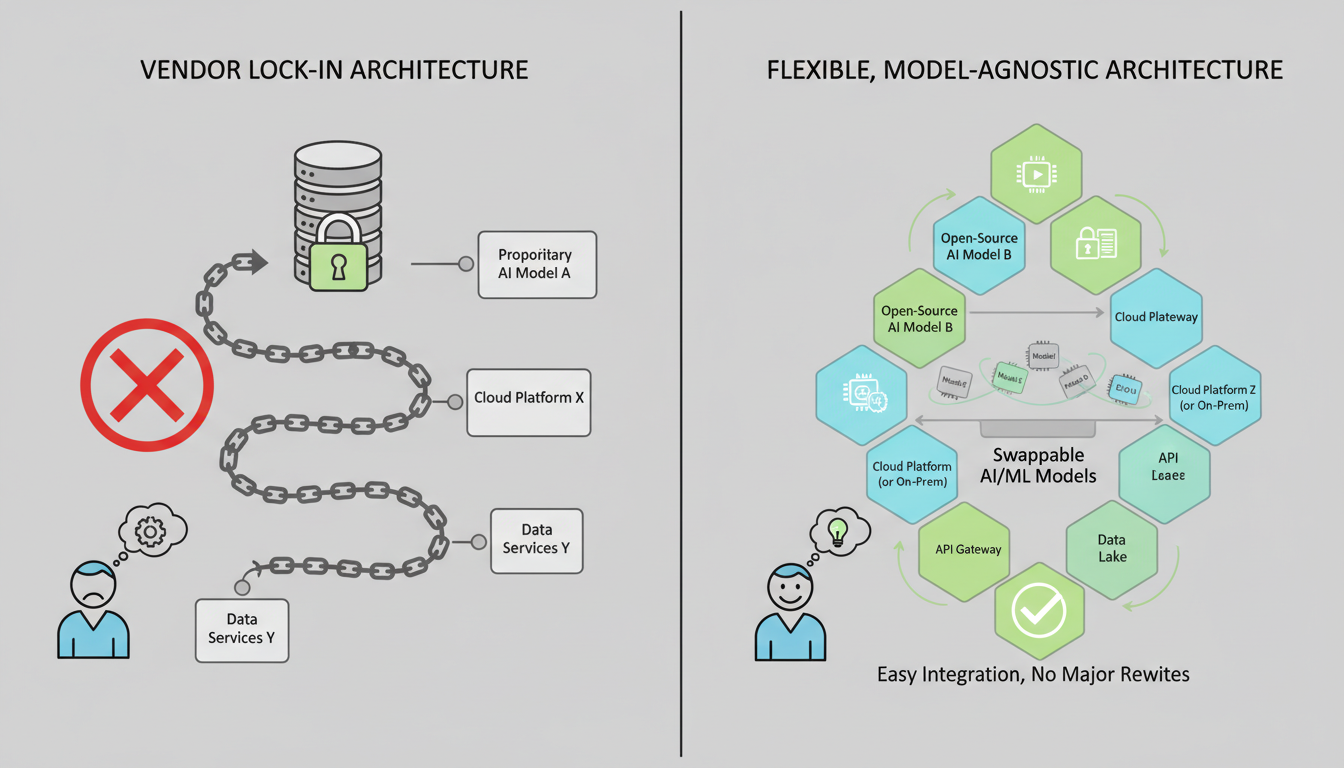

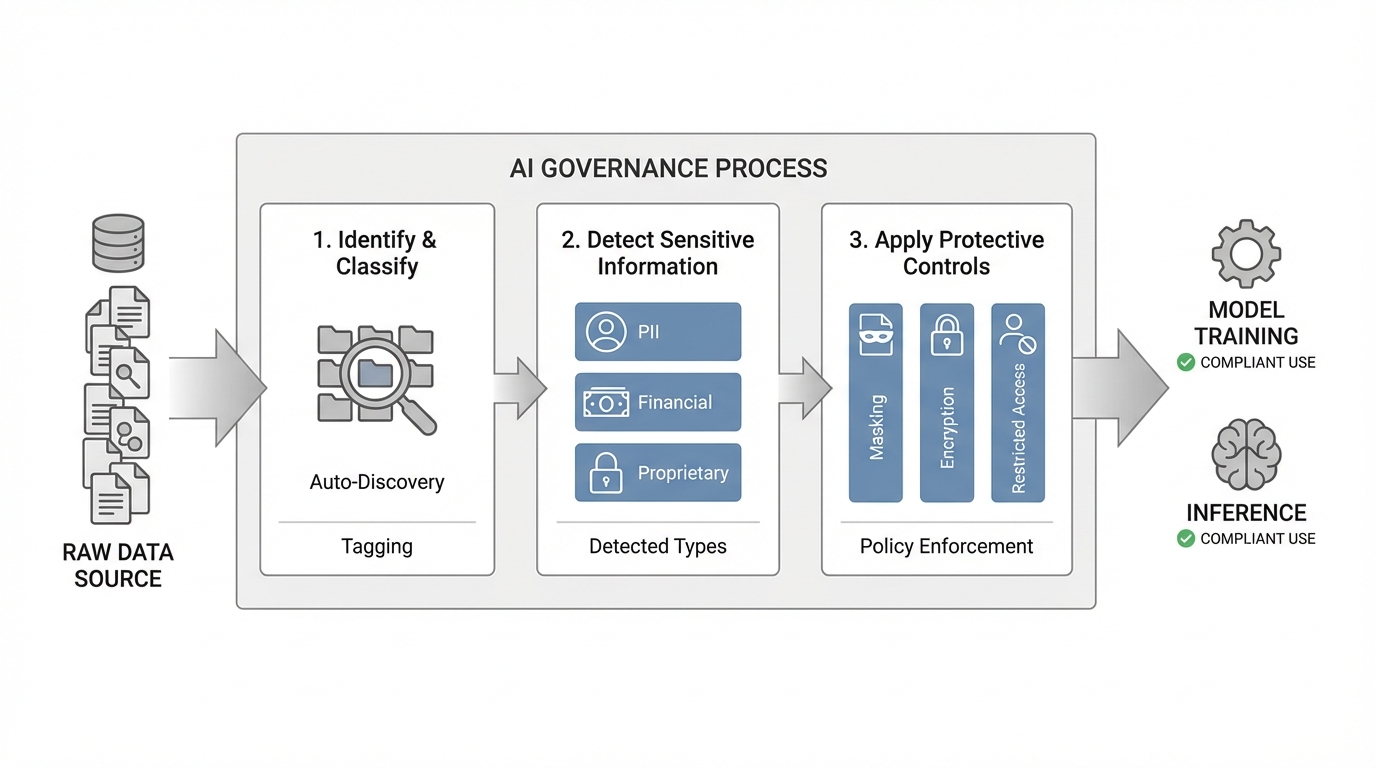

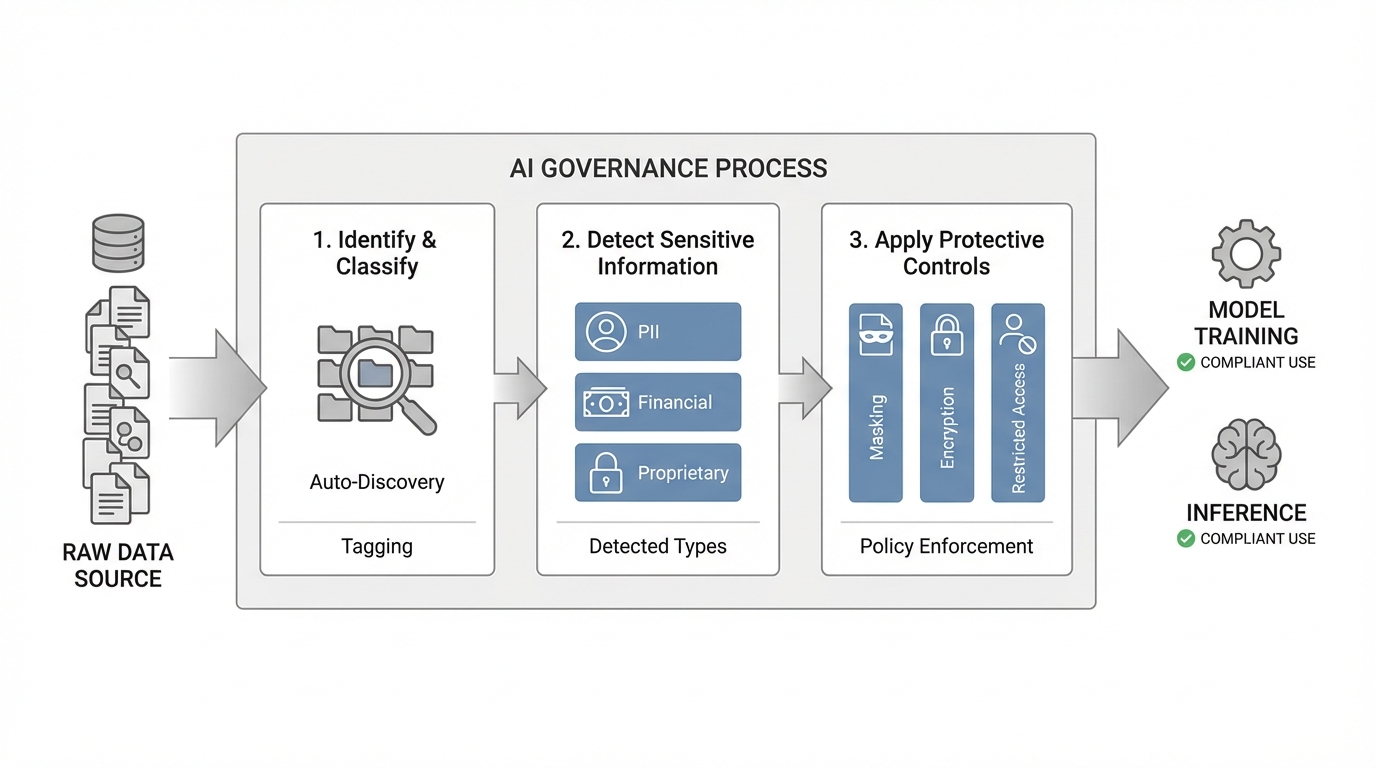

AI governance tools automatically identify, classify, and tag sensitive data before it is used in model training or inference. They detect personal, financial, or proprietary information and apply protective controls such as masking, encryption, or restricted access. This ensures that sensitive datasets remain compliant with privacy regulations and corporate data-handling policies.

Without comprehensive visibility, governance programs have critical blind spots, exposing organizations to regulatory violations, operational risks, and reputational damage. Evaluate vendors based on role-based access controls, audit logging, anomaly detection, and shadow AI discovery capabilities. Leaders implementing AI governance frameworks should prioritize vendors that provide continuous compliance monitoring rather than point-in-time audits.

This question becomes particularly critical for models fine-tuned on your data, custom workflows, and AI-generated outputs. Who owns the model fine-tuning? Who controls the deployment keys? In many cases, not the client. Demand explicit contractual language confirming that your organization retains full ownership of training data, fine-tuned models, and all outputs generated by the system.

The recent collapse of Builder.ai, a once $1.3B AI platform, exposed a dangerous reality: businesses that depend too heavily on a single cloud vendor face serious risk. Beyond technical security, evaluate vendor financial stability, redundancy architecture, disaster recovery capabilities, and contractual SLAs. What happens if the vendor experiences an outage, security breach, or business failure? Request documentation of their incident response procedures, backup systems, and business continuity guarantees.

Successful vendor evaluation requires structured comparison across multiple dimensions. Create a scorecard that weights these 13 questions according to your organization's priorities:

For regulated industries (healthcare, finance, government): Questions 1-4 and 11-13 carry the highest weight. Data sovereignty, compliance certifications, and security governance are non-negotiable requirements that eliminate vendors before technical evaluation begins.

For organizations escaping pilot purgatory: Questions 5-7 become critical differentiators. In one IDC study, for every 33 AI prototypes a company built, only 4 made it into production—an 88% failure rate for scaling AI initiatives. Vendors must demonstrate proven deployment methodologies, not just impressive demos. Organizations looking to escape AI purgatory should evaluate vendors based on their track record of production deployments rather than pilot success stories.

For cost-conscious enterprises: Questions 8-10 determine long-term total cost of ownership. Organizations trapped in vendor-locked systems end up diverting precious resources—both financial and human—away from innovation and toward infrastructure management. This results in delayed training cycles, slower model iterations, and missed market opportunities as engineering talent gets consumed by working around limitations rather than building competitive advantages.

Even with the right vendor, successful AI deployment requires organizational readiness beyond vendor capabilities:

Establish clear ownership: Getting to production means someone has to own the outcome. Success means line managers and front-line teams driving adoption—not just the AI lab. When it stays in the hands of specialists, it stays in pilot purgatory. Nobody with operational authority has skin in the game.

Build data foundations first: You cannot run a high-precision assembly line using rusted, mislabeled parts. A reliable 'AI Factory' requires a continuous feed of clean, 'AI-ready' data. No vendor can compensate for poor data governance, fragmented data systems, or inadequate data quality.

Plan for continuous governance: AI systems are iterative and data-driven; a snapshot audit once a year isn't enough. By 2026, compliance programs will require automated pipelines that collect evidence continuously: data lineage for training sets, model evaluation metrics, retraining logs, access histories, and prompt/response audits.

Shakudo's architecture directly addresses the most pressing concerns identified in vendor evaluation:

Data sovereignty by design: Unlike cloud-based AI platforms, Shakudo deploys entirely within your private cloud or on-premises infrastructure, ensuring sensitive data never leaves your jurisdiction. This eliminates the compliance complexity and cross-border transfer challenges that 71% of organizations cite as their top regulatory challenge.

Accelerated deployment timelines: By providing pre-integrated AI/ML tools and automated DevOps, Shakudo collapses the typical 6-18 month deployment timeline to days or weeks. Organizations escape pilot purgatory through production-ready infrastructure rather than custom integration projects.

Zero vendor lock-in: Shakudo's modular, infrastructure-agnostic design prevents vendor lock-in and enables organizations to swap AI models and tools as capabilities evolve. This addresses the critical future-proofing challenge in a market where model capabilities improve exponentially every 12-18 months.

Transparent, predictable costs: With Shakudo, you control your infrastructure costs directly without usage-based pricing experiments or API call fees that create budget uncertainty. Implementation costs remain predictable because pre-integrated tools eliminate the 3-5x multiplier typical of custom enterprise AI deployments.

For regulated enterprises in healthcare, finance, and government, Shakudo's sovereign AI approach means faster compliance, predictable costs, and the ability to innovate without compromising data control.

The questions you ask during vendor evaluation determine whether your AI investments deliver lasting value or join the growing pile of abandoned pilots. According to MIT's State of AI in Business 2025 report, more than 95 percent of companies are seeing little or no measurable return. The difference between these outcomes rarely lies in the sophistication of the AI models themselves.

Instead, success correlates with vendors who provide data sovereignty guarantees, proven deployment methodologies, architectural flexibility, transparent pricing, and comprehensive governance capabilities. Organizations that prioritize these criteria during selection position themselves to scale AI effectively while competitors remain trapped in pilot purgatory or locked into restrictive vendor relationships. As outlined in our comprehensive AI platform evaluation guide, systematic vendor assessment using these 13 questions creates a defensible framework for strategic AI procurement decisions.

As AI spending continues its exponential growth toward a projected $390.9 billion market by 2025, the enterprises that ask tough vendor questions upfront will capture disproportionate value. Those that focus solely on model performance metrics while ignoring deployment reality, data sovereignty, and long-term flexibility will continue contributing to the 74% of companies struggling to see tangible AI value.

The 13 questions outlined here provide a framework for rigorous vendor evaluation. Use them to move beyond impressive demos and marketing promises toward vendors that can deliver production-grade AI systems that scale, comply with regulations, and adapt as your needs evolve. Your technical teams, compliance officers, and CFO will thank you when your AI initiatives reach production on time, on budget, and under your control.

Ready to evaluate AI deployment options that put data sovereignty and deployment speed first? Explore how Shakudo's sovereign AI platform enables enterprises to scale AI initiatives without the typical vendor lock-in, compliance compromises, or prolonged deployment timelines that plague traditional vendors.

The 2025 enterprise AI market reveals a decisive shift: safety, reliability, and regulatory compliance have emerged as primary criteria for AI vendor selection, overtaking raw model performance as the critical differentiator. This transformation comes at a pivotal moment. Enterprise AI spending reached $37 billion in 2025, up from $11.5 billion in 2024, representing a 3.2x year-over-year increase. Yet despite this massive investment surge, 74% of companies had yet to see tangible value from their AI initiatives in 2024, with nearly two-thirds of organizations remaining stuck in pilot stage as of mid-2025.

The stakes are extraordinarily high. Organizations that cannot scale AI effectively face a 75% risk of business failure, according to recent industry analysis. Meanwhile, 92% of AI vendors claim broad data usage rights, far exceeding the market average of 63%, creating significant intellectual property and data governance concerns that most enterprises discover too late in the procurement process.

For engineering, data, and IT leaders navigating this landscape, vendor selection has evolved from a technical evaluation into a strategic risk calculation. The questions you ask before signing a contract will determine whether your AI initiatives deliver transformative value or join the 90% of pilots that never reach production.

Before diving into specific evaluation questions, it's essential to understand the complete cost structure of enterprise AI vendor relationships. The advertised subscription price represents only a fraction of true total cost of ownership. Enterprise implementations typically cost 3-5 times the advertised subscription price when accounting for integration, customization, infrastructure scaling, and operational overhead required to maintain AI systems in production.

Two years and several million dollars later, what should have revolutionized Ford's maintenance approach remained stuck in what industry experts call 'pilot purgatory'—a common fate for 70-90% of enterprise AI initiatives. This failure pattern repeats across industries, and poor vendor selection amplifies every underlying challenge: data integration becomes impossible, deployment timelines stretch from months to years, and switching costs create de facto lock-in even when performance disappoints.

Only 22% of organizations have moved beyond experimentation to strategic AI deployment. The distinguishing factor between these successful deployments and failed pilots often traces back to vendor evaluation criteria established during initial selection.

The volume of data that enterprises need to manage continues to grow exponentially, while regulations around data locality, residency and sovereignty simultaneously continue to multiply across jurisdictions worldwide. Companies must be vigilant about keeping up with rapidly evolving national and regional policies around who can access specific data; how it's collected, processed and stored; and where it's accessed from or transferred to.

Demand specificity: which cloud regions, which data centers, which jurisdictions. Cloud-based AI platforms create immediate data sovereignty conflicts for regulated industries. Countries including India, China, and EU members are enforcing strict data localization requirements that make vendor location and deployment options critical decision factors.

Scrutinize the fine print. With 92% of AI vendors claiming broad data usage rights, understanding exactly what happens to your proprietary data, training inputs, and model outputs is non-negotiable. Ask explicitly: Will our data train your models? Can you access our prompts and responses? What happens to our data if we terminate the relationship?

Some laws set conditions around cross-border transfers, while others prohibit them altogether. For instance, in some jurisdictions, companies need to demonstrate a legal requirement to move the data, retain a local copy of the data for compliance reasons, or both. Other regulations govern whether companies can access data stored in a region, generate insights and then export those insights to HQ for further analysis or model training.

This complexity affects 71% of organizations who cite cross-border data transfer compliance as their top regulatory challenge in 2025.

Financial institutions face intense regulatory scrutiny and high-stakes AI applications. Demand evidence of SOC 2, ISO 27001, GDPR compliance, and industry-specific certifications (HIPAA for healthcare, FedRAMP for government). Banks must validate models, document assumptions and limitations, perform ongoing monitoring, conduct independent reviews, and maintain governance over third-party AI tools and vendors. Your vendor must support these requirements with documented audit trails, not promises.

Timelines vary: simple pilots with clean data and clear integration points can move to production in 3–6 months, while complex systems with multiple data sources and compliance requirements may take 9–18 months. Demand case studies from similar implementations in your industry. Ask about the longest deployment the vendor has experienced and what caused the delays.

The best performers follow a 14-month timeline from initial pilot to meaningful ROI. Any vendor promising significantly faster timelines without understanding your data infrastructure, integration requirements, and organizational readiness should raise red flags.

Integration complexity kills more AI projects than technical performance issues. 45% of teams cite data quality and pipeline consistency as their top production obstacle. Another 40% point to security and compliance challenges. Ask for detailed technical architecture reviews, API documentation, and integration examples with systems similar to yours.

McKinsey's 2025 State of AI survey shows just one-third of companies have managed enterprise-wide scaling. Vendors often excel at small pilots but lack the infrastructure, support model, or architectural design to support hundreds or thousands of users across multiple business units. Request architecture diagrams showing how the solution scales, performance benchmarks at different user volumes, and customer references who have successfully scaled beyond initial deployments.

As enterprises scale their AI initiatives, one of the biggest architectural risks they face is vendor lock-in—being tied too tightly to a single model provider or cloud platform. In the rapidly evolving AI ecosystem, where new foundation models and APIs emerge almost weekly, this dependency can quickly limit innovation and flexibility. Teams that commit early to one ecosystem often find themselves unable to adopt newer, better, or more cost-effective models without rewriting large portions of their stack.

Demand contractual guarantees for data export in standard formats, model portability, and reasonable termination terms. Contracts should include provisions for data portability and code access to mitigate risks of lock-in.

AI model capabilities improve exponentially every 12 to 18 months, meaning today's best-in-class solution may become obsolete within months. The return of competitive open-weight models means enterprises can pair a proprietary default with targeted open-weight deployments to meet sensitivity, sovereignty, or cost objectives.

Vendors that lock you into proprietary models create strategic liability. For example, TrueFoundry's gateway supports any OpenAI-compatible model, so if you write your code against TrueFoundry's OpenAI-style API, you can switch between OpenAI, Azure OpenAI, Anthropic, or your own models with a configuration change—no code rewrite required. This architectural pattern should be your standard expectation.

AI vendors are experimenting with pricing rates and models, creating cost uncertainty for enterprise CIOs deploying the technology. While many AI vendors have moved to hybrid pricing models that combine subscriptions with use- or outcome-based pricing, these strategies are not set in stone. In some cases, AI vendors are changing their pricing rates or models every few weeks.

Demand transparent, predictable pricing models with usage caps. CIOs should set budget limits when employees are working with use-based AI tools because API use can drive huge, unexpected costs. Negotiate contractual protections against mid-term price changes and understand exactly what triggers additional costs.

AI governance tools automatically identify, classify, and tag sensitive data before it is used in model training or inference. They detect personal, financial, or proprietary information and apply protective controls such as masking, encryption, or restricted access. This ensures that sensitive datasets remain compliant with privacy regulations and corporate data-handling policies.

Without comprehensive visibility, governance programs have critical blind spots, exposing organizations to regulatory violations, operational risks, and reputational damage. Evaluate vendors based on role-based access controls, audit logging, anomaly detection, and shadow AI discovery capabilities. Leaders implementing AI governance frameworks should prioritize vendors that provide continuous compliance monitoring rather than point-in-time audits.

This question becomes particularly critical for models fine-tuned on your data, custom workflows, and AI-generated outputs. Who owns the model fine-tuning? Who controls the deployment keys? In many cases, not the client. Demand explicit contractual language confirming that your organization retains full ownership of training data, fine-tuned models, and all outputs generated by the system.

The recent collapse of Builder.ai, a once $1.3B AI platform, exposed a dangerous reality: businesses that depend too heavily on a single cloud vendor face serious risk. Beyond technical security, evaluate vendor financial stability, redundancy architecture, disaster recovery capabilities, and contractual SLAs. What happens if the vendor experiences an outage, security breach, or business failure? Request documentation of their incident response procedures, backup systems, and business continuity guarantees.

Successful vendor evaluation requires structured comparison across multiple dimensions. Create a scorecard that weights these 13 questions according to your organization's priorities:

For regulated industries (healthcare, finance, government): Questions 1-4 and 11-13 carry the highest weight. Data sovereignty, compliance certifications, and security governance are non-negotiable requirements that eliminate vendors before technical evaluation begins.

For organizations escaping pilot purgatory: Questions 5-7 become critical differentiators. In one IDC study, for every 33 AI prototypes a company built, only 4 made it into production—an 88% failure rate for scaling AI initiatives. Vendors must demonstrate proven deployment methodologies, not just impressive demos. Organizations looking to escape AI purgatory should evaluate vendors based on their track record of production deployments rather than pilot success stories.

For cost-conscious enterprises: Questions 8-10 determine long-term total cost of ownership. Organizations trapped in vendor-locked systems end up diverting precious resources—both financial and human—away from innovation and toward infrastructure management. This results in delayed training cycles, slower model iterations, and missed market opportunities as engineering talent gets consumed by working around limitations rather than building competitive advantages.

Even with the right vendor, successful AI deployment requires organizational readiness beyond vendor capabilities:

Establish clear ownership: Getting to production means someone has to own the outcome. Success means line managers and front-line teams driving adoption—not just the AI lab. When it stays in the hands of specialists, it stays in pilot purgatory. Nobody with operational authority has skin in the game.

Build data foundations first: You cannot run a high-precision assembly line using rusted, mislabeled parts. A reliable 'AI Factory' requires a continuous feed of clean, 'AI-ready' data. No vendor can compensate for poor data governance, fragmented data systems, or inadequate data quality.

Plan for continuous governance: AI systems are iterative and data-driven; a snapshot audit once a year isn't enough. By 2026, compliance programs will require automated pipelines that collect evidence continuously: data lineage for training sets, model evaluation metrics, retraining logs, access histories, and prompt/response audits.

Shakudo's architecture directly addresses the most pressing concerns identified in vendor evaluation:

Data sovereignty by design: Unlike cloud-based AI platforms, Shakudo deploys entirely within your private cloud or on-premises infrastructure, ensuring sensitive data never leaves your jurisdiction. This eliminates the compliance complexity and cross-border transfer challenges that 71% of organizations cite as their top regulatory challenge.

Accelerated deployment timelines: By providing pre-integrated AI/ML tools and automated DevOps, Shakudo collapses the typical 6-18 month deployment timeline to days or weeks. Organizations escape pilot purgatory through production-ready infrastructure rather than custom integration projects.

Zero vendor lock-in: Shakudo's modular, infrastructure-agnostic design prevents vendor lock-in and enables organizations to swap AI models and tools as capabilities evolve. This addresses the critical future-proofing challenge in a market where model capabilities improve exponentially every 12-18 months.

Transparent, predictable costs: With Shakudo, you control your infrastructure costs directly without usage-based pricing experiments or API call fees that create budget uncertainty. Implementation costs remain predictable because pre-integrated tools eliminate the 3-5x multiplier typical of custom enterprise AI deployments.

For regulated enterprises in healthcare, finance, and government, Shakudo's sovereign AI approach means faster compliance, predictable costs, and the ability to innovate without compromising data control.

The questions you ask during vendor evaluation determine whether your AI investments deliver lasting value or join the growing pile of abandoned pilots. According to MIT's State of AI in Business 2025 report, more than 95 percent of companies are seeing little or no measurable return. The difference between these outcomes rarely lies in the sophistication of the AI models themselves.

Instead, success correlates with vendors who provide data sovereignty guarantees, proven deployment methodologies, architectural flexibility, transparent pricing, and comprehensive governance capabilities. Organizations that prioritize these criteria during selection position themselves to scale AI effectively while competitors remain trapped in pilot purgatory or locked into restrictive vendor relationships. As outlined in our comprehensive AI platform evaluation guide, systematic vendor assessment using these 13 questions creates a defensible framework for strategic AI procurement decisions.

As AI spending continues its exponential growth toward a projected $390.9 billion market by 2025, the enterprises that ask tough vendor questions upfront will capture disproportionate value. Those that focus solely on model performance metrics while ignoring deployment reality, data sovereignty, and long-term flexibility will continue contributing to the 74% of companies struggling to see tangible AI value.

The 13 questions outlined here provide a framework for rigorous vendor evaluation. Use them to move beyond impressive demos and marketing promises toward vendors that can deliver production-grade AI systems that scale, comply with regulations, and adapt as your needs evolve. Your technical teams, compliance officers, and CFO will thank you when your AI initiatives reach production on time, on budget, and under your control.

Ready to evaluate AI deployment options that put data sovereignty and deployment speed first? Explore how Shakudo's sovereign AI platform enables enterprises to scale AI initiatives without the typical vendor lock-in, compliance compromises, or prolonged deployment timelines that plague traditional vendors.

The 2025 enterprise AI market reveals a decisive shift: safety, reliability, and regulatory compliance have emerged as primary criteria for AI vendor selection, overtaking raw model performance as the critical differentiator. This transformation comes at a pivotal moment. Enterprise AI spending reached $37 billion in 2025, up from $11.5 billion in 2024, representing a 3.2x year-over-year increase. Yet despite this massive investment surge, 74% of companies had yet to see tangible value from their AI initiatives in 2024, with nearly two-thirds of organizations remaining stuck in pilot stage as of mid-2025.

The stakes are extraordinarily high. Organizations that cannot scale AI effectively face a 75% risk of business failure, according to recent industry analysis. Meanwhile, 92% of AI vendors claim broad data usage rights, far exceeding the market average of 63%, creating significant intellectual property and data governance concerns that most enterprises discover too late in the procurement process.

For engineering, data, and IT leaders navigating this landscape, vendor selection has evolved from a technical evaluation into a strategic risk calculation. The questions you ask before signing a contract will determine whether your AI initiatives deliver transformative value or join the 90% of pilots that never reach production.

Before diving into specific evaluation questions, it's essential to understand the complete cost structure of enterprise AI vendor relationships. The advertised subscription price represents only a fraction of true total cost of ownership. Enterprise implementations typically cost 3-5 times the advertised subscription price when accounting for integration, customization, infrastructure scaling, and operational overhead required to maintain AI systems in production.

Two years and several million dollars later, what should have revolutionized Ford's maintenance approach remained stuck in what industry experts call 'pilot purgatory'—a common fate for 70-90% of enterprise AI initiatives. This failure pattern repeats across industries, and poor vendor selection amplifies every underlying challenge: data integration becomes impossible, deployment timelines stretch from months to years, and switching costs create de facto lock-in even when performance disappoints.

Only 22% of organizations have moved beyond experimentation to strategic AI deployment. The distinguishing factor between these successful deployments and failed pilots often traces back to vendor evaluation criteria established during initial selection.

The volume of data that enterprises need to manage continues to grow exponentially, while regulations around data locality, residency and sovereignty simultaneously continue to multiply across jurisdictions worldwide. Companies must be vigilant about keeping up with rapidly evolving national and regional policies around who can access specific data; how it's collected, processed and stored; and where it's accessed from or transferred to.

Demand specificity: which cloud regions, which data centers, which jurisdictions. Cloud-based AI platforms create immediate data sovereignty conflicts for regulated industries. Countries including India, China, and EU members are enforcing strict data localization requirements that make vendor location and deployment options critical decision factors.

Scrutinize the fine print. With 92% of AI vendors claiming broad data usage rights, understanding exactly what happens to your proprietary data, training inputs, and model outputs is non-negotiable. Ask explicitly: Will our data train your models? Can you access our prompts and responses? What happens to our data if we terminate the relationship?

Some laws set conditions around cross-border transfers, while others prohibit them altogether. For instance, in some jurisdictions, companies need to demonstrate a legal requirement to move the data, retain a local copy of the data for compliance reasons, or both. Other regulations govern whether companies can access data stored in a region, generate insights and then export those insights to HQ for further analysis or model training.

This complexity affects 71% of organizations who cite cross-border data transfer compliance as their top regulatory challenge in 2025.

Financial institutions face intense regulatory scrutiny and high-stakes AI applications. Demand evidence of SOC 2, ISO 27001, GDPR compliance, and industry-specific certifications (HIPAA for healthcare, FedRAMP for government). Banks must validate models, document assumptions and limitations, perform ongoing monitoring, conduct independent reviews, and maintain governance over third-party AI tools and vendors. Your vendor must support these requirements with documented audit trails, not promises.

Timelines vary: simple pilots with clean data and clear integration points can move to production in 3–6 months, while complex systems with multiple data sources and compliance requirements may take 9–18 months. Demand case studies from similar implementations in your industry. Ask about the longest deployment the vendor has experienced and what caused the delays.

The best performers follow a 14-month timeline from initial pilot to meaningful ROI. Any vendor promising significantly faster timelines without understanding your data infrastructure, integration requirements, and organizational readiness should raise red flags.

Integration complexity kills more AI projects than technical performance issues. 45% of teams cite data quality and pipeline consistency as their top production obstacle. Another 40% point to security and compliance challenges. Ask for detailed technical architecture reviews, API documentation, and integration examples with systems similar to yours.

McKinsey's 2025 State of AI survey shows just one-third of companies have managed enterprise-wide scaling. Vendors often excel at small pilots but lack the infrastructure, support model, or architectural design to support hundreds or thousands of users across multiple business units. Request architecture diagrams showing how the solution scales, performance benchmarks at different user volumes, and customer references who have successfully scaled beyond initial deployments.

As enterprises scale their AI initiatives, one of the biggest architectural risks they face is vendor lock-in—being tied too tightly to a single model provider or cloud platform. In the rapidly evolving AI ecosystem, where new foundation models and APIs emerge almost weekly, this dependency can quickly limit innovation and flexibility. Teams that commit early to one ecosystem often find themselves unable to adopt newer, better, or more cost-effective models without rewriting large portions of their stack.

Demand contractual guarantees for data export in standard formats, model portability, and reasonable termination terms. Contracts should include provisions for data portability and code access to mitigate risks of lock-in.

AI model capabilities improve exponentially every 12 to 18 months, meaning today's best-in-class solution may become obsolete within months. The return of competitive open-weight models means enterprises can pair a proprietary default with targeted open-weight deployments to meet sensitivity, sovereignty, or cost objectives.

Vendors that lock you into proprietary models create strategic liability. For example, TrueFoundry's gateway supports any OpenAI-compatible model, so if you write your code against TrueFoundry's OpenAI-style API, you can switch between OpenAI, Azure OpenAI, Anthropic, or your own models with a configuration change—no code rewrite required. This architectural pattern should be your standard expectation.

AI vendors are experimenting with pricing rates and models, creating cost uncertainty for enterprise CIOs deploying the technology. While many AI vendors have moved to hybrid pricing models that combine subscriptions with use- or outcome-based pricing, these strategies are not set in stone. In some cases, AI vendors are changing their pricing rates or models every few weeks.

Demand transparent, predictable pricing models with usage caps. CIOs should set budget limits when employees are working with use-based AI tools because API use can drive huge, unexpected costs. Negotiate contractual protections against mid-term price changes and understand exactly what triggers additional costs.

AI governance tools automatically identify, classify, and tag sensitive data before it is used in model training or inference. They detect personal, financial, or proprietary information and apply protective controls such as masking, encryption, or restricted access. This ensures that sensitive datasets remain compliant with privacy regulations and corporate data-handling policies.

Without comprehensive visibility, governance programs have critical blind spots, exposing organizations to regulatory violations, operational risks, and reputational damage. Evaluate vendors based on role-based access controls, audit logging, anomaly detection, and shadow AI discovery capabilities. Leaders implementing AI governance frameworks should prioritize vendors that provide continuous compliance monitoring rather than point-in-time audits.

This question becomes particularly critical for models fine-tuned on your data, custom workflows, and AI-generated outputs. Who owns the model fine-tuning? Who controls the deployment keys? In many cases, not the client. Demand explicit contractual language confirming that your organization retains full ownership of training data, fine-tuned models, and all outputs generated by the system.

The recent collapse of Builder.ai, a once $1.3B AI platform, exposed a dangerous reality: businesses that depend too heavily on a single cloud vendor face serious risk. Beyond technical security, evaluate vendor financial stability, redundancy architecture, disaster recovery capabilities, and contractual SLAs. What happens if the vendor experiences an outage, security breach, or business failure? Request documentation of their incident response procedures, backup systems, and business continuity guarantees.

Successful vendor evaluation requires structured comparison across multiple dimensions. Create a scorecard that weights these 13 questions according to your organization's priorities:

For regulated industries (healthcare, finance, government): Questions 1-4 and 11-13 carry the highest weight. Data sovereignty, compliance certifications, and security governance are non-negotiable requirements that eliminate vendors before technical evaluation begins.

For organizations escaping pilot purgatory: Questions 5-7 become critical differentiators. In one IDC study, for every 33 AI prototypes a company built, only 4 made it into production—an 88% failure rate for scaling AI initiatives. Vendors must demonstrate proven deployment methodologies, not just impressive demos. Organizations looking to escape AI purgatory should evaluate vendors based on their track record of production deployments rather than pilot success stories.

For cost-conscious enterprises: Questions 8-10 determine long-term total cost of ownership. Organizations trapped in vendor-locked systems end up diverting precious resources—both financial and human—away from innovation and toward infrastructure management. This results in delayed training cycles, slower model iterations, and missed market opportunities as engineering talent gets consumed by working around limitations rather than building competitive advantages.

Even with the right vendor, successful AI deployment requires organizational readiness beyond vendor capabilities:

Establish clear ownership: Getting to production means someone has to own the outcome. Success means line managers and front-line teams driving adoption—not just the AI lab. When it stays in the hands of specialists, it stays in pilot purgatory. Nobody with operational authority has skin in the game.

Build data foundations first: You cannot run a high-precision assembly line using rusted, mislabeled parts. A reliable 'AI Factory' requires a continuous feed of clean, 'AI-ready' data. No vendor can compensate for poor data governance, fragmented data systems, or inadequate data quality.

Plan for continuous governance: AI systems are iterative and data-driven; a snapshot audit once a year isn't enough. By 2026, compliance programs will require automated pipelines that collect evidence continuously: data lineage for training sets, model evaluation metrics, retraining logs, access histories, and prompt/response audits.

Shakudo's architecture directly addresses the most pressing concerns identified in vendor evaluation:

Data sovereignty by design: Unlike cloud-based AI platforms, Shakudo deploys entirely within your private cloud or on-premises infrastructure, ensuring sensitive data never leaves your jurisdiction. This eliminates the compliance complexity and cross-border transfer challenges that 71% of organizations cite as their top regulatory challenge.

Accelerated deployment timelines: By providing pre-integrated AI/ML tools and automated DevOps, Shakudo collapses the typical 6-18 month deployment timeline to days or weeks. Organizations escape pilot purgatory through production-ready infrastructure rather than custom integration projects.

Zero vendor lock-in: Shakudo's modular, infrastructure-agnostic design prevents vendor lock-in and enables organizations to swap AI models and tools as capabilities evolve. This addresses the critical future-proofing challenge in a market where model capabilities improve exponentially every 12-18 months.

Transparent, predictable costs: With Shakudo, you control your infrastructure costs directly without usage-based pricing experiments or API call fees that create budget uncertainty. Implementation costs remain predictable because pre-integrated tools eliminate the 3-5x multiplier typical of custom enterprise AI deployments.

For regulated enterprises in healthcare, finance, and government, Shakudo's sovereign AI approach means faster compliance, predictable costs, and the ability to innovate without compromising data control.

The questions you ask during vendor evaluation determine whether your AI investments deliver lasting value or join the growing pile of abandoned pilots. According to MIT's State of AI in Business 2025 report, more than 95 percent of companies are seeing little or no measurable return. The difference between these outcomes rarely lies in the sophistication of the AI models themselves.

Instead, success correlates with vendors who provide data sovereignty guarantees, proven deployment methodologies, architectural flexibility, transparent pricing, and comprehensive governance capabilities. Organizations that prioritize these criteria during selection position themselves to scale AI effectively while competitors remain trapped in pilot purgatory or locked into restrictive vendor relationships. As outlined in our comprehensive AI platform evaluation guide, systematic vendor assessment using these 13 questions creates a defensible framework for strategic AI procurement decisions.

As AI spending continues its exponential growth toward a projected $390.9 billion market by 2025, the enterprises that ask tough vendor questions upfront will capture disproportionate value. Those that focus solely on model performance metrics while ignoring deployment reality, data sovereignty, and long-term flexibility will continue contributing to the 74% of companies struggling to see tangible AI value.

The 13 questions outlined here provide a framework for rigorous vendor evaluation. Use them to move beyond impressive demos and marketing promises toward vendors that can deliver production-grade AI systems that scale, comply with regulations, and adapt as your needs evolve. Your technical teams, compliance officers, and CFO will thank you when your AI initiatives reach production on time, on budget, and under your control.

Ready to evaluate AI deployment options that put data sovereignty and deployment speed first? Explore how Shakudo's sovereign AI platform enables enterprises to scale AI initiatives without the typical vendor lock-in, compliance compromises, or prolonged deployment timelines that plague traditional vendors.