Over 80% of companies are using or exploring AI in 2025, yet only 15% have achieved enterprise-wide implementation. Despite organizations increasing AI infrastructure spending by 97% year-over-year to $47.4 billion, the vast majority remain trapped in what industry analysts call "AI purgatory." Only one in four AI initiatives deliver their expected ROI, and 70-85% of AI projects fail to move past the pilot stage.

The math doesn't add up. More investment should mean more production deployments, but the opposite is happening. The gap between pilot enthusiasm and production reality continues to widen, leaving enterprises with proof-of-concept fatigue and mounting pressure to deliver actual business value.

Why do most AI projects stall? The failure isn't about algorithm sophistication or computing power. It's about the unglamorous infrastructure, compliance, and integration challenges that separate experimental notebooks from production systems.

Over 85% of technology leaders expect to modify infrastructure before deploying AI at scale, with 60% citing integration with legacy systems as their primary challenge. Your organization's existing technology stack wasn't designed for AI workloads. Mainframes running critical business logic, data warehouses optimized for batch processing, and monolithic applications with decades of accumulated complexity create integration nightmares.

The problem intensifies when AI models need real-time access to operational data locked in these systems. Building custom connectors for each integration point creates technical debt that compounds over time.

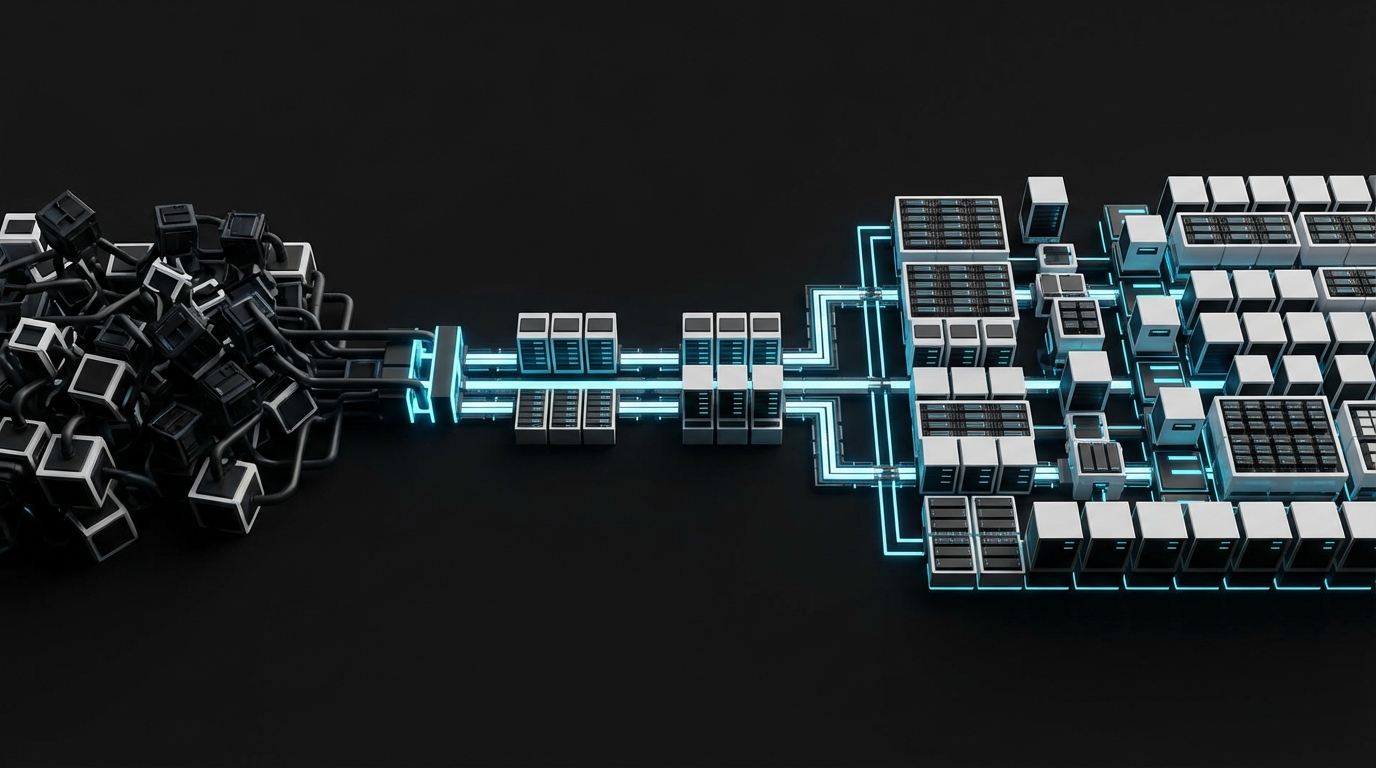

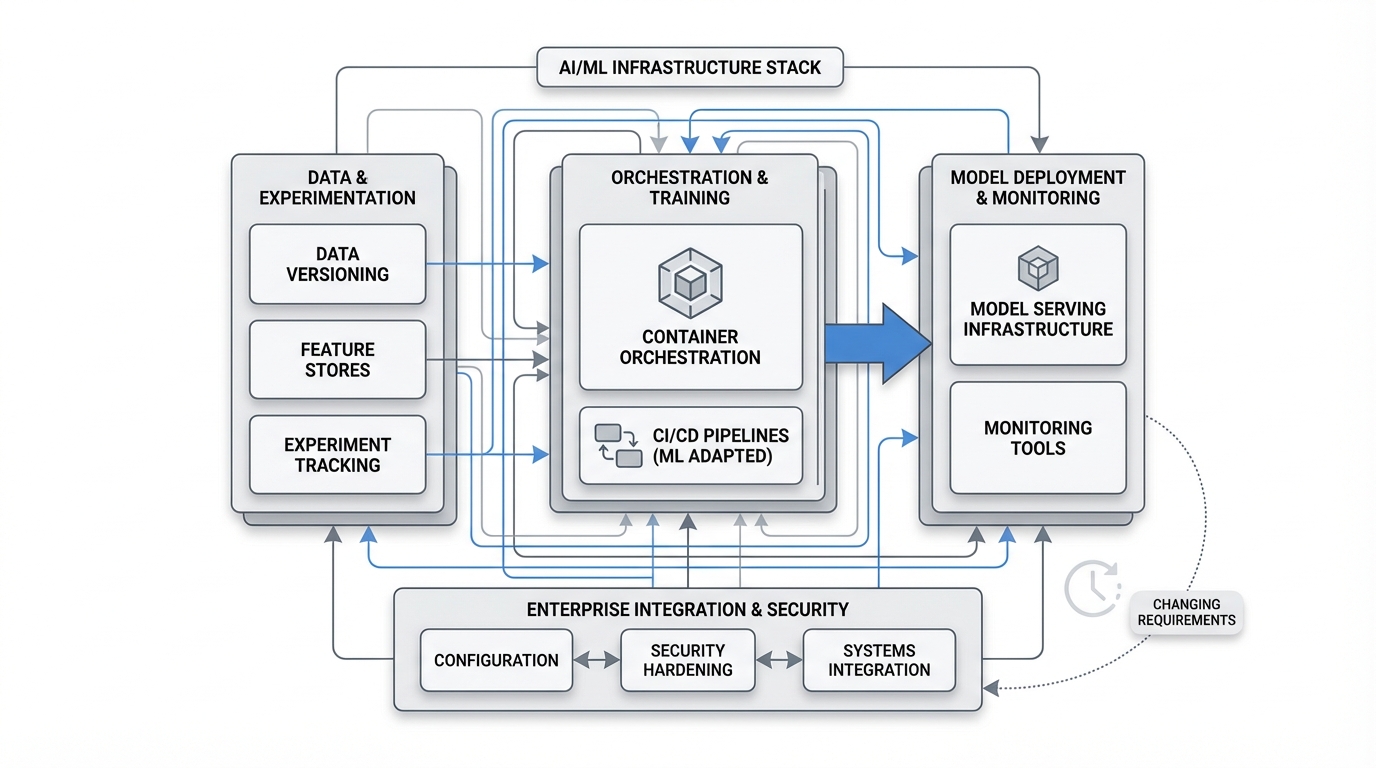

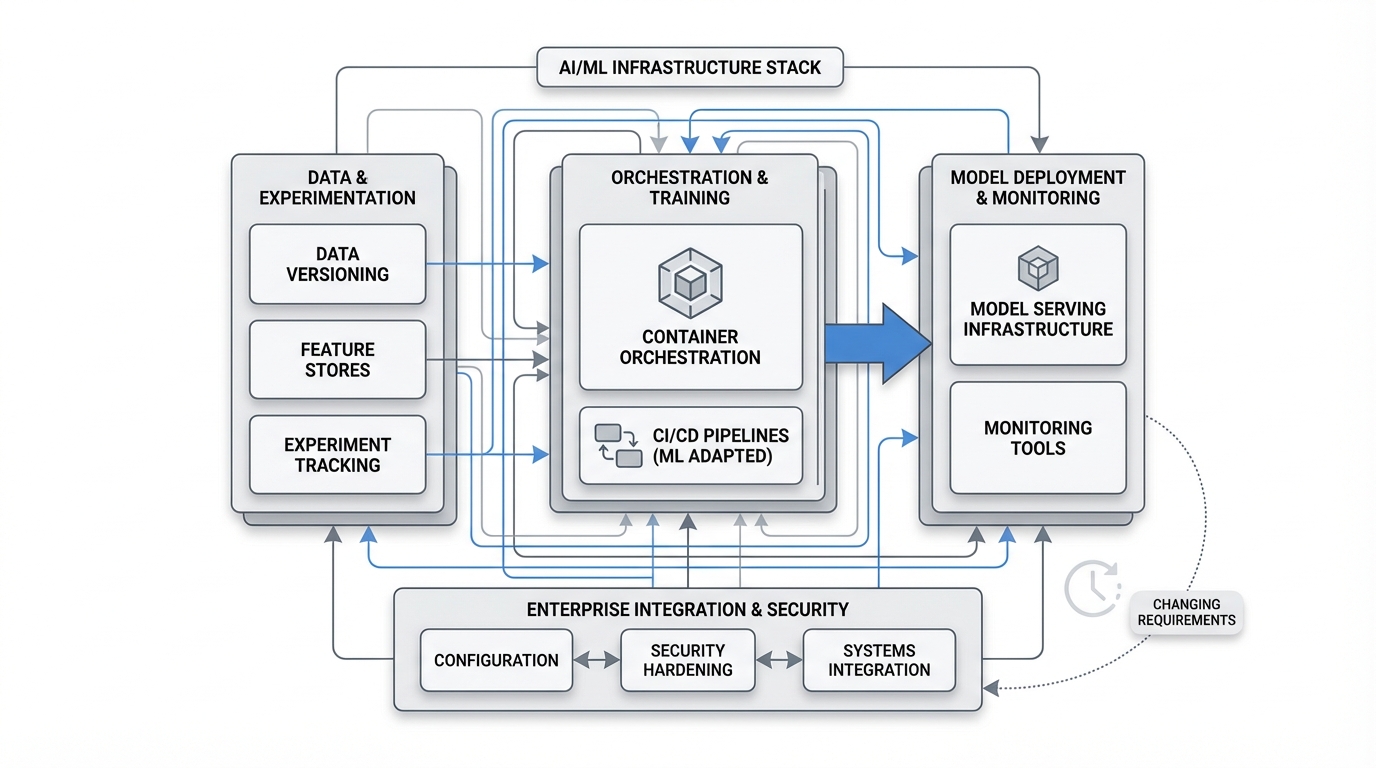

Deploying AI and ML solutions takes up to 9 months on average, with machine learning teams spending seven months to deploy one project to production. This isn't because data scientists are slow. It's because standing up the infrastructure for a single AI application requires orchestrating dozens of specialized tools.

Consider what's needed: container orchestration platforms, model serving infrastructure, feature stores, experiment tracking systems, monitoring tools, data versioning solutions, and CI/CD pipelines adapted for ML workflows. Each tool requires configuration, security hardening, and integration with enterprise systems. By the time infrastructure is ready, business requirements have often changed.

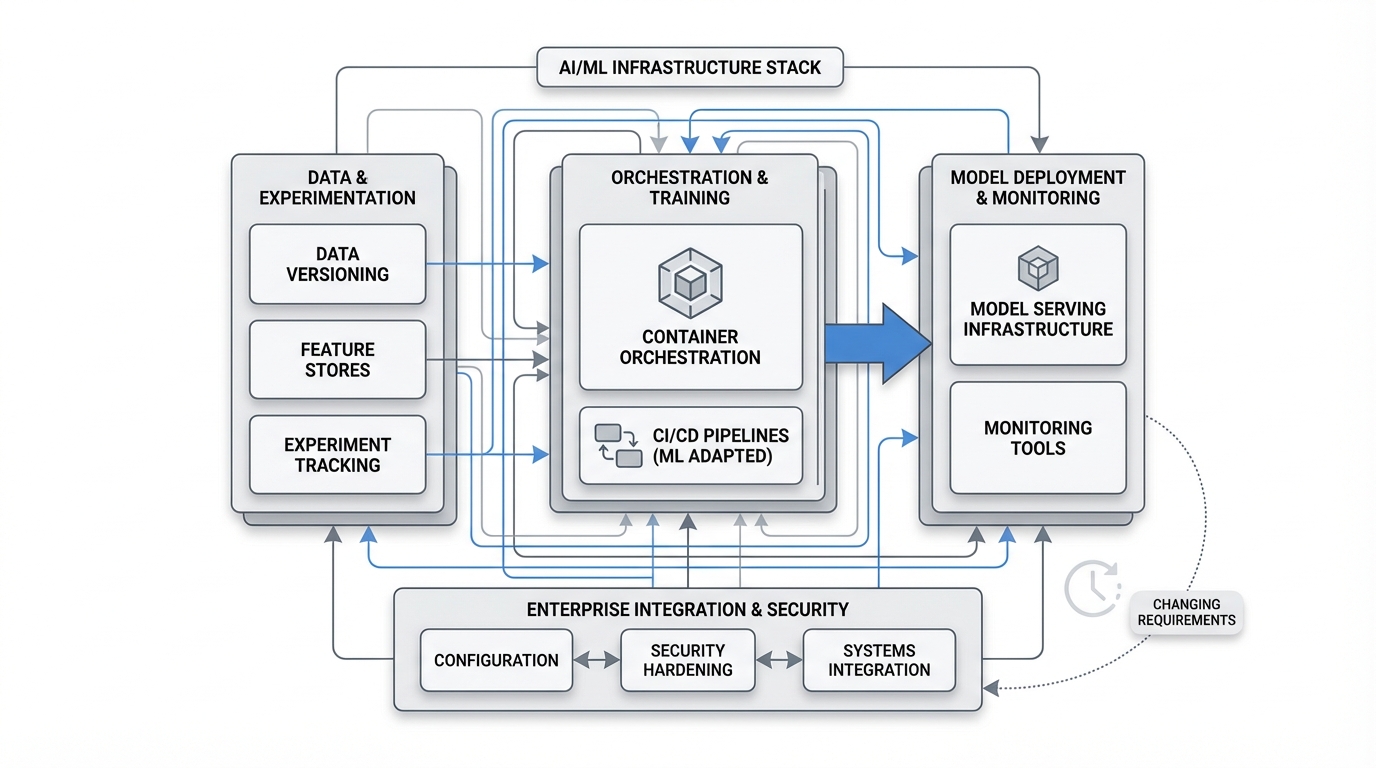

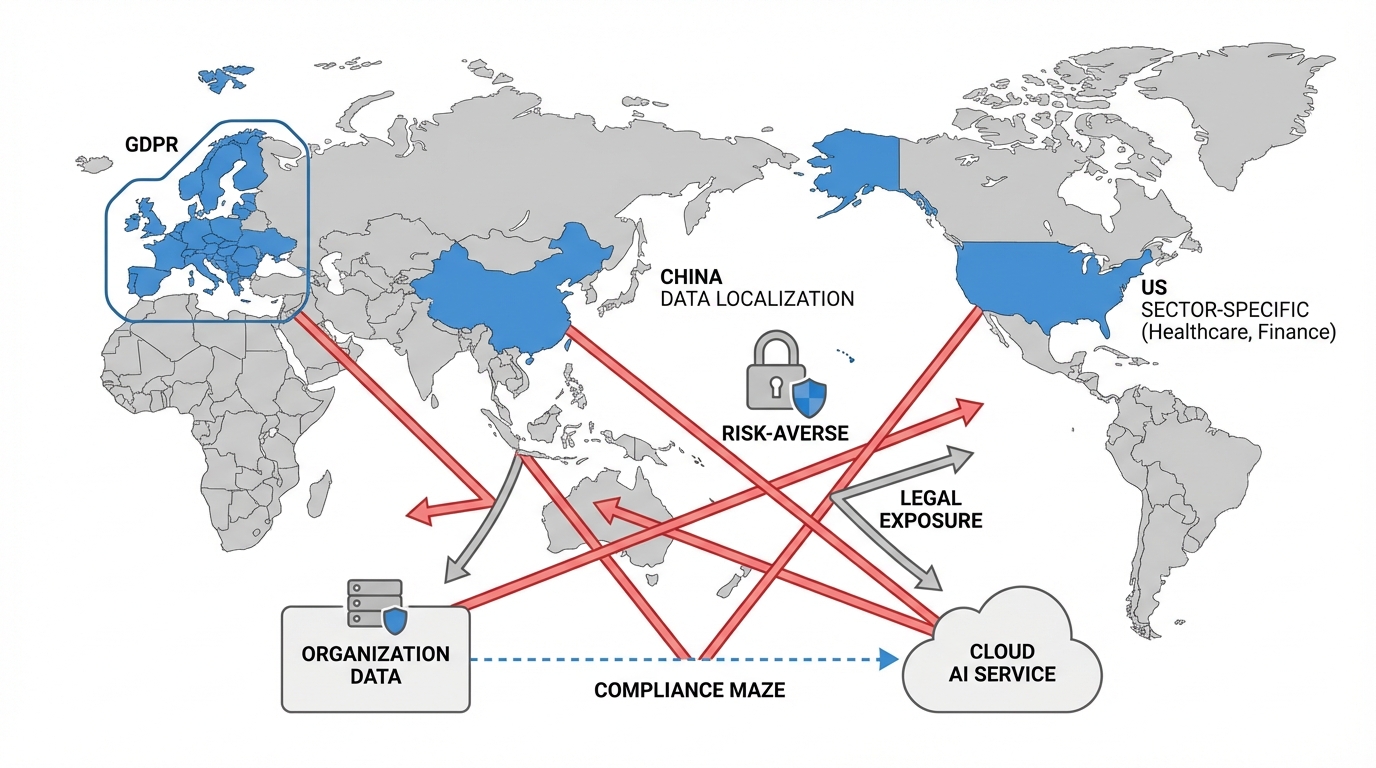

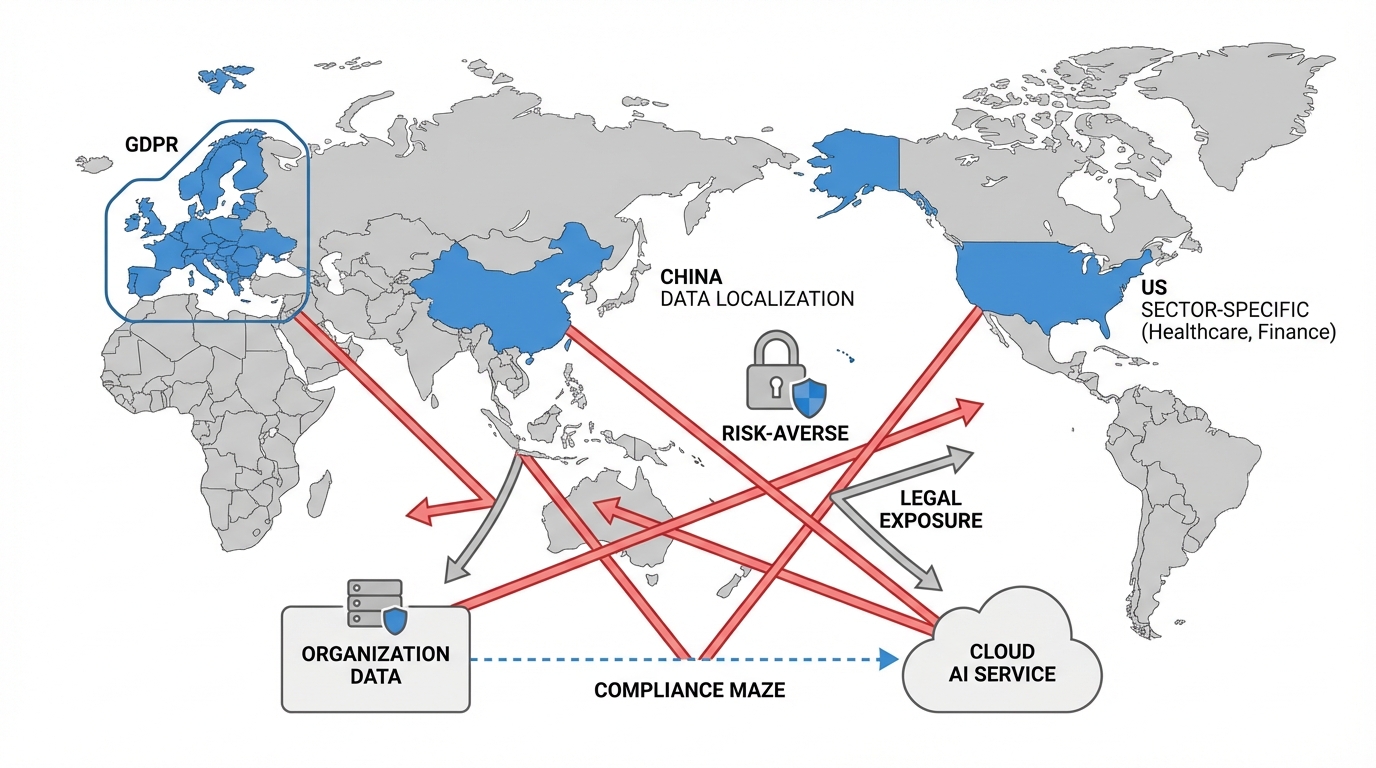

72% of leaders list data sovereignty and regulatory compliance as their top AI-related challenge for 2026, up from 49% in 2024. This represents a fundamental shift in how enterprises view AI deployment. What was once primarily a technical challenge has become a legal and geopolitical one.

71% of organizations cite cross-border data transfer compliance as their top regulatory challenge, with fragmented frameworks across jurisdictions. GDPR in Europe, data localization laws in China, sector-specific regulations in healthcare and finance, and emerging AI-specific legislation create a compliance maze. Cloud-based AI services often require data to leave organizational boundaries, creating legal exposure that risk-averse enterprises cannot accept.

Only 14% of leaders have the right talent to meet AI goals, with 61% reporting skills gaps in managing specialized infrastructure, up from 53% a year ago. The problem isn't just finding data scientists. It's finding people who understand MLOps, can configure Kubernetes for GPU workloads, know how to optimize model serving latency, and can debug distributed training failures.

This skills gap is widening because AI infrastructure tooling evolves faster than training programs can adapt. The MLOps engineer who mastered last year's toolchain faces a different landscape today.

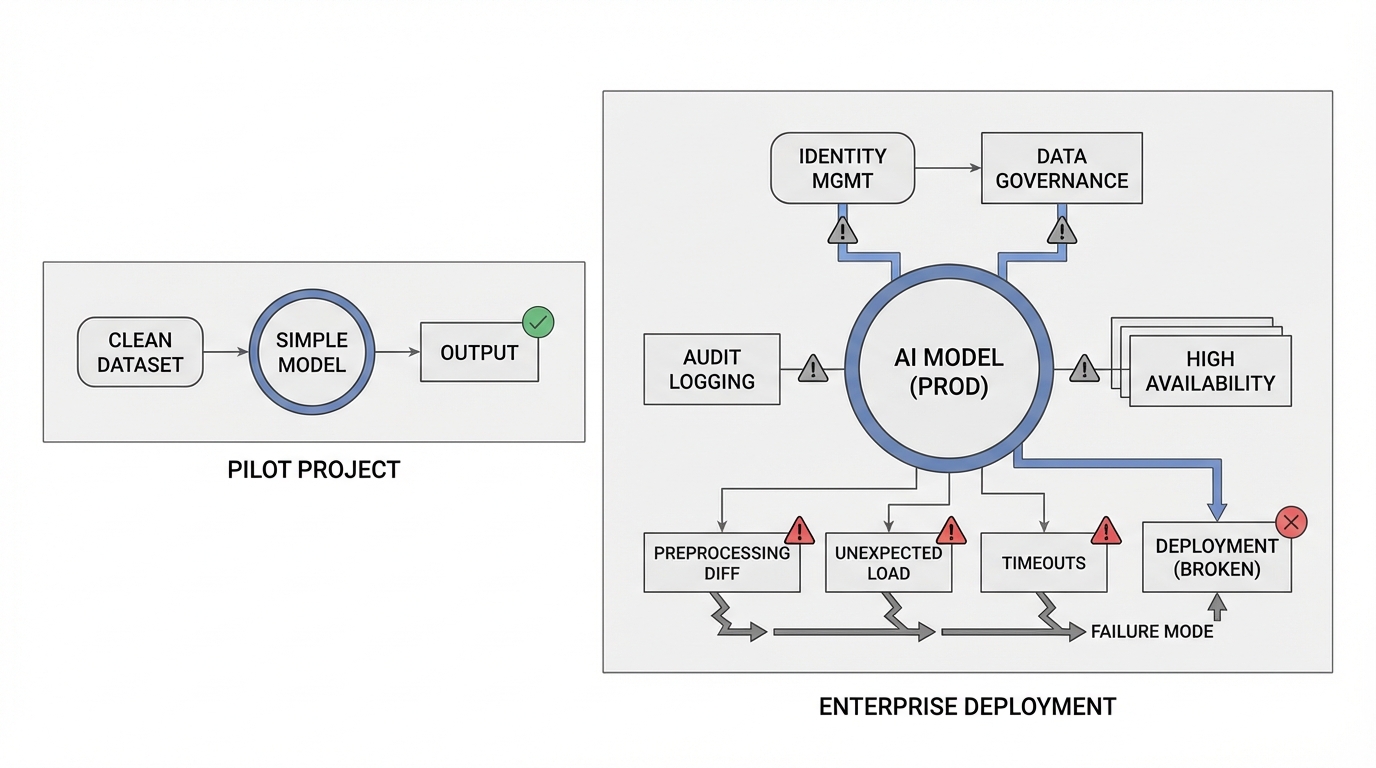

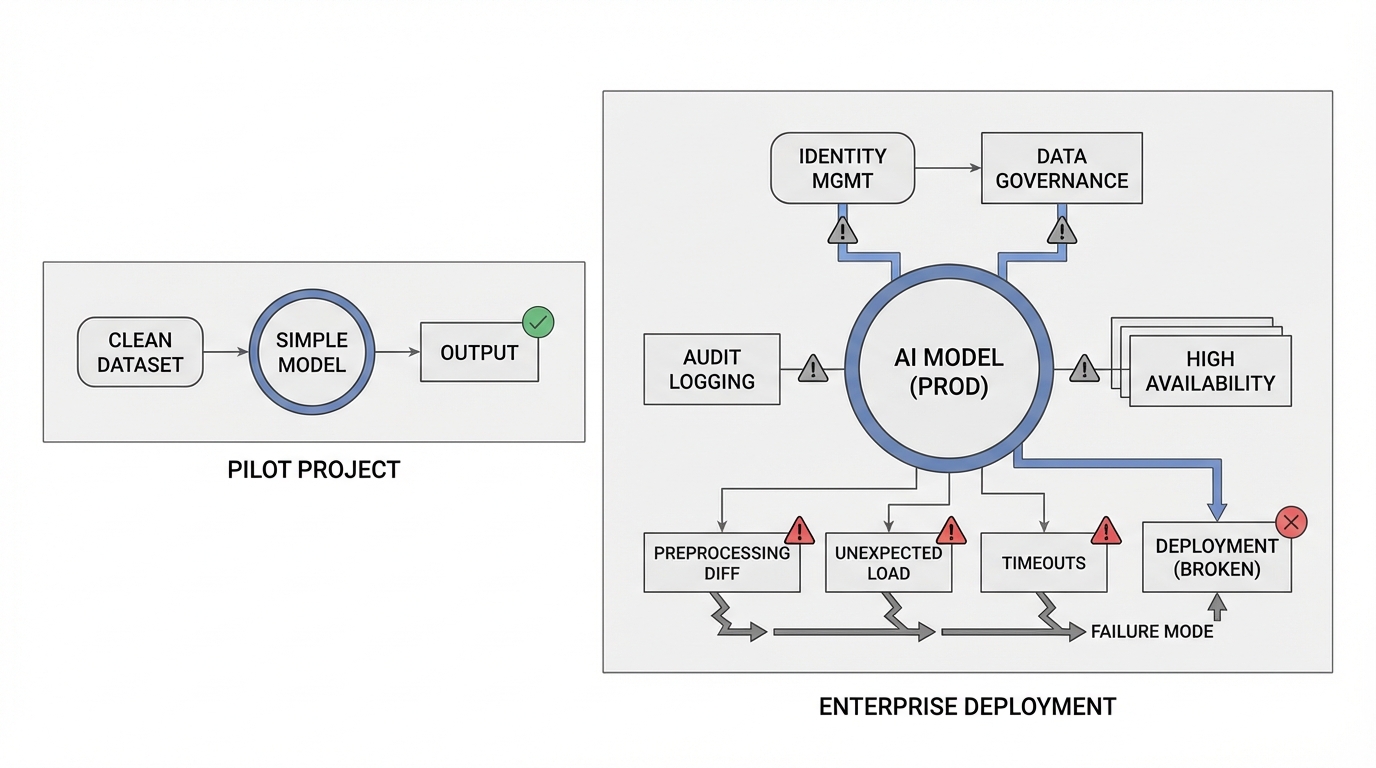

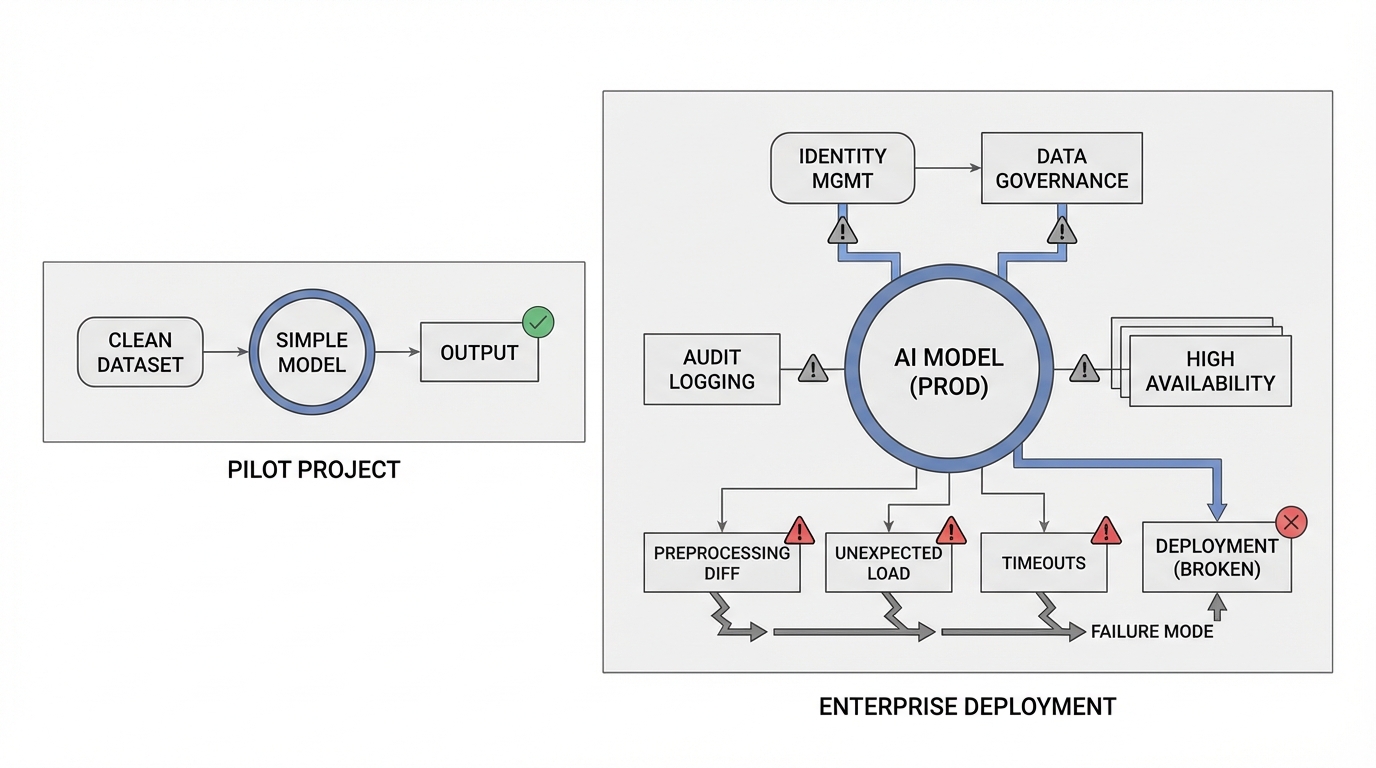

64% of organizations cite integration complexity as a top barrier for scaling AI. Moving from a pilot project with clean datasets to enterprise deployment means integrating with identity management systems, connecting to data governance frameworks, implementing audit logging, and ensuring high availability.

Each integration point introduces potential failure modes. A model that worked perfectly in development fails in production because of subtle differences in data preprocessing, unexpected load patterns, or timeout configurations in enterprise networking.

Escaping AI purgatory requires addressing these barriers systematically, not heroically.

Instead of assembling dozens of point solutions, organizations that successfully scale AI use platforms that provide pre-integrated toolchains. This reduces deployment time from months to days by eliminating the integration work that consumes ML engineering resources.

For regulated industries, data sovereignty isn't negotiable. Architectures that enable AI deployment within organizational boundaries solve compliance challenges without sacrificing capability. This approach addresses the 72% of leaders citing compliance as their top concern.

Standardizing infrastructure deployment through code reduces configuration drift and makes environments reproducible. Teams can spin up consistent AI environments across development, staging, and production without manual setup.

Rather than directly integrating AI with legacy systems, create API layers that abstract complexity. This approach lets AI applications evolve independently from core systems while maintaining necessary data access.

Waiting until production to think about model monitoring, versioning, and deployment pipelines guarantees delays. Organizations that build MLOps practices during pilot phases move to production faster.

Every new AI project shouldn't reinvent data preprocessing, model serving, or monitoring. Building internal libraries of validated components accelerates subsequent projects.

The 14% with adequate talent didn't just hire more data scientists. They built platform teams responsible for creating infrastructure that makes data scientists productive.

Successful scaling often begins with focused applications that deliver clear ROI. Early wins build organizational momentum and justify infrastructure investment. Organizations exploring specific use cases like analyzing sales call transcripts demonstrate how targeted applications can generate immediate business value while building toward broader AI capabilities.

Integrating data governance, access controls, and audit logging from the beginning prevents costly rework. Compliance requirements like SOC 2 should shape architecture decisions, not constrain completed systems.

The 6% of organizations achieving 5%+ EBIT impact from AI share common characteristics. They treat AI infrastructure as strategic capability, not IT project. They invest in platforms that reduce deployment friction. They prioritize time-to-value over perfect solutions.

These organizations recognize that the competitive advantage isn't having the most sophisticated algorithms. It's being able to deploy and iterate on AI applications faster than competitors.

Breaking out of AI purgatory requires confronting uncomfortable truths. Your existing infrastructure wasn't designed for AI workloads. Your compliance requirements won't relax. Your talent shortage won't resolve through hiring alone.

But these constraints don't make enterprise AI impossible. They make the right infrastructure choices critical.

Platforms like Shakudo address these challenges by providing pre-integrated AI operating systems that deploy in days rather than months, support on-premises and private cloud architectures for data sovereignty, and eliminate the integration complexity that keeps 85% of AI projects stuck in pilot phase.

The question isn't whether your organization will scale AI. It's whether you'll do it before your competitors do.

Nearly two-thirds of organizations have not yet begun scaling AI across the enterprise, with 43% remaining in experimental phase. If you're among them, the gap between experimentation and production deployment grows wider each quarter.

Start by auditing your current AI infrastructure against the five barriers outlined here. Identify which constraints most limit your ability to scale. Then prioritize solutions that address multiple barriers simultaneously rather than optimizing individual components.

The path out of AI purgatory isn't mysterious. It's just hard. But with the right infrastructure foundation, it's achievable.

Over 80% of companies are using or exploring AI in 2025, yet only 15% have achieved enterprise-wide implementation. Despite organizations increasing AI infrastructure spending by 97% year-over-year to $47.4 billion, the vast majority remain trapped in what industry analysts call "AI purgatory." Only one in four AI initiatives deliver their expected ROI, and 70-85% of AI projects fail to move past the pilot stage.

The math doesn't add up. More investment should mean more production deployments, but the opposite is happening. The gap between pilot enthusiasm and production reality continues to widen, leaving enterprises with proof-of-concept fatigue and mounting pressure to deliver actual business value.

Why do most AI projects stall? The failure isn't about algorithm sophistication or computing power. It's about the unglamorous infrastructure, compliance, and integration challenges that separate experimental notebooks from production systems.

Over 85% of technology leaders expect to modify infrastructure before deploying AI at scale, with 60% citing integration with legacy systems as their primary challenge. Your organization's existing technology stack wasn't designed for AI workloads. Mainframes running critical business logic, data warehouses optimized for batch processing, and monolithic applications with decades of accumulated complexity create integration nightmares.

The problem intensifies when AI models need real-time access to operational data locked in these systems. Building custom connectors for each integration point creates technical debt that compounds over time.

Deploying AI and ML solutions takes up to 9 months on average, with machine learning teams spending seven months to deploy one project to production. This isn't because data scientists are slow. It's because standing up the infrastructure for a single AI application requires orchestrating dozens of specialized tools.

Consider what's needed: container orchestration platforms, model serving infrastructure, feature stores, experiment tracking systems, monitoring tools, data versioning solutions, and CI/CD pipelines adapted for ML workflows. Each tool requires configuration, security hardening, and integration with enterprise systems. By the time infrastructure is ready, business requirements have often changed.

72% of leaders list data sovereignty and regulatory compliance as their top AI-related challenge for 2026, up from 49% in 2024. This represents a fundamental shift in how enterprises view AI deployment. What was once primarily a technical challenge has become a legal and geopolitical one.

71% of organizations cite cross-border data transfer compliance as their top regulatory challenge, with fragmented frameworks across jurisdictions. GDPR in Europe, data localization laws in China, sector-specific regulations in healthcare and finance, and emerging AI-specific legislation create a compliance maze. Cloud-based AI services often require data to leave organizational boundaries, creating legal exposure that risk-averse enterprises cannot accept.

Only 14% of leaders have the right talent to meet AI goals, with 61% reporting skills gaps in managing specialized infrastructure, up from 53% a year ago. The problem isn't just finding data scientists. It's finding people who understand MLOps, can configure Kubernetes for GPU workloads, know how to optimize model serving latency, and can debug distributed training failures.

This skills gap is widening because AI infrastructure tooling evolves faster than training programs can adapt. The MLOps engineer who mastered last year's toolchain faces a different landscape today.

64% of organizations cite integration complexity as a top barrier for scaling AI. Moving from a pilot project with clean datasets to enterprise deployment means integrating with identity management systems, connecting to data governance frameworks, implementing audit logging, and ensuring high availability.

Each integration point introduces potential failure modes. A model that worked perfectly in development fails in production because of subtle differences in data preprocessing, unexpected load patterns, or timeout configurations in enterprise networking.

Escaping AI purgatory requires addressing these barriers systematically, not heroically.

Instead of assembling dozens of point solutions, organizations that successfully scale AI use platforms that provide pre-integrated toolchains. This reduces deployment time from months to days by eliminating the integration work that consumes ML engineering resources.

For regulated industries, data sovereignty isn't negotiable. Architectures that enable AI deployment within organizational boundaries solve compliance challenges without sacrificing capability. This approach addresses the 72% of leaders citing compliance as their top concern.

Standardizing infrastructure deployment through code reduces configuration drift and makes environments reproducible. Teams can spin up consistent AI environments across development, staging, and production without manual setup.

Rather than directly integrating AI with legacy systems, create API layers that abstract complexity. This approach lets AI applications evolve independently from core systems while maintaining necessary data access.

Waiting until production to think about model monitoring, versioning, and deployment pipelines guarantees delays. Organizations that build MLOps practices during pilot phases move to production faster.

Every new AI project shouldn't reinvent data preprocessing, model serving, or monitoring. Building internal libraries of validated components accelerates subsequent projects.

The 14% with adequate talent didn't just hire more data scientists. They built platform teams responsible for creating infrastructure that makes data scientists productive.

Successful scaling often begins with focused applications that deliver clear ROI. Early wins build organizational momentum and justify infrastructure investment. Organizations exploring specific use cases like analyzing sales call transcripts demonstrate how targeted applications can generate immediate business value while building toward broader AI capabilities.

Integrating data governance, access controls, and audit logging from the beginning prevents costly rework. Compliance requirements like SOC 2 should shape architecture decisions, not constrain completed systems.

The 6% of organizations achieving 5%+ EBIT impact from AI share common characteristics. They treat AI infrastructure as strategic capability, not IT project. They invest in platforms that reduce deployment friction. They prioritize time-to-value over perfect solutions.

These organizations recognize that the competitive advantage isn't having the most sophisticated algorithms. It's being able to deploy and iterate on AI applications faster than competitors.

Breaking out of AI purgatory requires confronting uncomfortable truths. Your existing infrastructure wasn't designed for AI workloads. Your compliance requirements won't relax. Your talent shortage won't resolve through hiring alone.

But these constraints don't make enterprise AI impossible. They make the right infrastructure choices critical.

Platforms like Shakudo address these challenges by providing pre-integrated AI operating systems that deploy in days rather than months, support on-premises and private cloud architectures for data sovereignty, and eliminate the integration complexity that keeps 85% of AI projects stuck in pilot phase.

The question isn't whether your organization will scale AI. It's whether you'll do it before your competitors do.

Nearly two-thirds of organizations have not yet begun scaling AI across the enterprise, with 43% remaining in experimental phase. If you're among them, the gap between experimentation and production deployment grows wider each quarter.

Start by auditing your current AI infrastructure against the five barriers outlined here. Identify which constraints most limit your ability to scale. Then prioritize solutions that address multiple barriers simultaneously rather than optimizing individual components.

The path out of AI purgatory isn't mysterious. It's just hard. But with the right infrastructure foundation, it's achievable.

Over 80% of companies are using or exploring AI in 2025, yet only 15% have achieved enterprise-wide implementation. Despite organizations increasing AI infrastructure spending by 97% year-over-year to $47.4 billion, the vast majority remain trapped in what industry analysts call "AI purgatory." Only one in four AI initiatives deliver their expected ROI, and 70-85% of AI projects fail to move past the pilot stage.

The math doesn't add up. More investment should mean more production deployments, but the opposite is happening. The gap between pilot enthusiasm and production reality continues to widen, leaving enterprises with proof-of-concept fatigue and mounting pressure to deliver actual business value.

Why do most AI projects stall? The failure isn't about algorithm sophistication or computing power. It's about the unglamorous infrastructure, compliance, and integration challenges that separate experimental notebooks from production systems.

Over 85% of technology leaders expect to modify infrastructure before deploying AI at scale, with 60% citing integration with legacy systems as their primary challenge. Your organization's existing technology stack wasn't designed for AI workloads. Mainframes running critical business logic, data warehouses optimized for batch processing, and monolithic applications with decades of accumulated complexity create integration nightmares.

The problem intensifies when AI models need real-time access to operational data locked in these systems. Building custom connectors for each integration point creates technical debt that compounds over time.

Deploying AI and ML solutions takes up to 9 months on average, with machine learning teams spending seven months to deploy one project to production. This isn't because data scientists are slow. It's because standing up the infrastructure for a single AI application requires orchestrating dozens of specialized tools.

Consider what's needed: container orchestration platforms, model serving infrastructure, feature stores, experiment tracking systems, monitoring tools, data versioning solutions, and CI/CD pipelines adapted for ML workflows. Each tool requires configuration, security hardening, and integration with enterprise systems. By the time infrastructure is ready, business requirements have often changed.

72% of leaders list data sovereignty and regulatory compliance as their top AI-related challenge for 2026, up from 49% in 2024. This represents a fundamental shift in how enterprises view AI deployment. What was once primarily a technical challenge has become a legal and geopolitical one.

71% of organizations cite cross-border data transfer compliance as their top regulatory challenge, with fragmented frameworks across jurisdictions. GDPR in Europe, data localization laws in China, sector-specific regulations in healthcare and finance, and emerging AI-specific legislation create a compliance maze. Cloud-based AI services often require data to leave organizational boundaries, creating legal exposure that risk-averse enterprises cannot accept.

Only 14% of leaders have the right talent to meet AI goals, with 61% reporting skills gaps in managing specialized infrastructure, up from 53% a year ago. The problem isn't just finding data scientists. It's finding people who understand MLOps, can configure Kubernetes for GPU workloads, know how to optimize model serving latency, and can debug distributed training failures.

This skills gap is widening because AI infrastructure tooling evolves faster than training programs can adapt. The MLOps engineer who mastered last year's toolchain faces a different landscape today.

64% of organizations cite integration complexity as a top barrier for scaling AI. Moving from a pilot project with clean datasets to enterprise deployment means integrating with identity management systems, connecting to data governance frameworks, implementing audit logging, and ensuring high availability.

Each integration point introduces potential failure modes. A model that worked perfectly in development fails in production because of subtle differences in data preprocessing, unexpected load patterns, or timeout configurations in enterprise networking.

Escaping AI purgatory requires addressing these barriers systematically, not heroically.

Instead of assembling dozens of point solutions, organizations that successfully scale AI use platforms that provide pre-integrated toolchains. This reduces deployment time from months to days by eliminating the integration work that consumes ML engineering resources.

For regulated industries, data sovereignty isn't negotiable. Architectures that enable AI deployment within organizational boundaries solve compliance challenges without sacrificing capability. This approach addresses the 72% of leaders citing compliance as their top concern.

Standardizing infrastructure deployment through code reduces configuration drift and makes environments reproducible. Teams can spin up consistent AI environments across development, staging, and production without manual setup.

Rather than directly integrating AI with legacy systems, create API layers that abstract complexity. This approach lets AI applications evolve independently from core systems while maintaining necessary data access.

Waiting until production to think about model monitoring, versioning, and deployment pipelines guarantees delays. Organizations that build MLOps practices during pilot phases move to production faster.

Every new AI project shouldn't reinvent data preprocessing, model serving, or monitoring. Building internal libraries of validated components accelerates subsequent projects.

The 14% with adequate talent didn't just hire more data scientists. They built platform teams responsible for creating infrastructure that makes data scientists productive.

Successful scaling often begins with focused applications that deliver clear ROI. Early wins build organizational momentum and justify infrastructure investment. Organizations exploring specific use cases like analyzing sales call transcripts demonstrate how targeted applications can generate immediate business value while building toward broader AI capabilities.

Integrating data governance, access controls, and audit logging from the beginning prevents costly rework. Compliance requirements like SOC 2 should shape architecture decisions, not constrain completed systems.

The 6% of organizations achieving 5%+ EBIT impact from AI share common characteristics. They treat AI infrastructure as strategic capability, not IT project. They invest in platforms that reduce deployment friction. They prioritize time-to-value over perfect solutions.

These organizations recognize that the competitive advantage isn't having the most sophisticated algorithms. It's being able to deploy and iterate on AI applications faster than competitors.

Breaking out of AI purgatory requires confronting uncomfortable truths. Your existing infrastructure wasn't designed for AI workloads. Your compliance requirements won't relax. Your talent shortage won't resolve through hiring alone.

But these constraints don't make enterprise AI impossible. They make the right infrastructure choices critical.

Platforms like Shakudo address these challenges by providing pre-integrated AI operating systems that deploy in days rather than months, support on-premises and private cloud architectures for data sovereignty, and eliminate the integration complexity that keeps 85% of AI projects stuck in pilot phase.

The question isn't whether your organization will scale AI. It's whether you'll do it before your competitors do.

Nearly two-thirds of organizations have not yet begun scaling AI across the enterprise, with 43% remaining in experimental phase. If you're among them, the gap between experimentation and production deployment grows wider each quarter.

Start by auditing your current AI infrastructure against the five barriers outlined here. Identify which constraints most limit your ability to scale. Then prioritize solutions that address multiple barriers simultaneously rather than optimizing individual components.

The path out of AI purgatory isn't mysterious. It's just hard. But with the right infrastructure foundation, it's achievable.