Seventy-nine percent of organizations have adopted AI agents, with 66% reporting measurable value through increased productivity. Yet beneath these promising numbers lies a sobering reality: Gartner predicts over 40% of agentic AI projects will be canceled by the end of 2027 due to escalating costs, unclear business value, or inadequate risk controls.

The difference between these two outcomes often comes down to a single upstream decision: which AI agent framework you choose. With enterprise-focused agentic AI expanding from $2.58 billion in 2024 to $24.50 billion by 2030 at a 46.2% CAGR, selecting the right foundation has never been more consequential.

Enterprise teams face a paradox. Twenty-three percent of respondents report their organizations are scaling an agentic AI system somewhere in their enterprises, with an additional 39% experimenting with AI agents, though most are only doing so in one or two functions. The challenge isn't lack of interest; it's the compounding cost of wrong decisions.

Security vulnerabilities plague 62% of practitioners who identify security as their top challenge, while 63% of executives cite platform sprawl as a growing concern, suggesting enterprises are juggling too many tools with limited interconnectivity. Each framework requires separate security approvals, authentication setups, and compliance documentation. Choose poorly, and your team spends months building infrastructure instead of intelligent agents.

The infrastructure tax is real. Teams report spending 80% of their time building data connectors rather than training agents, stretching projects from weeks into months. Meanwhile, returns often lag behind expectations due to fragmented workflows, insufficient integration, and misalignment between AI capabilities and business processes, with AI tools operating in silos and producing modest productivity gains that fall short of initial projections.

80% of enterprises prefer AI hosted inside their AWS cloud for compliance and data sovereignty reasons. This isn't negotiable for regulated industries. When evaluating LangChain, AutoGen, CrewAI, or LlamaIndex, the first question is deployment flexibility.

LangChain has over 600+ integrations and can connect to virtually every major LLM, tool, and database via a standardized interface, offering deployment versatility. LangGraph is an open-source library within the LangChain ecosystem designed for building stateful, multi-actor applications powered by LLMs, introducing the ability to create and manage cyclical graphs, with a platform designed to streamline deployment and scaling.

For private cloud deployments, ask: Can I run this framework entirely within my VPC? Does it require external API calls that expose data? What telemetry leaves my environment?

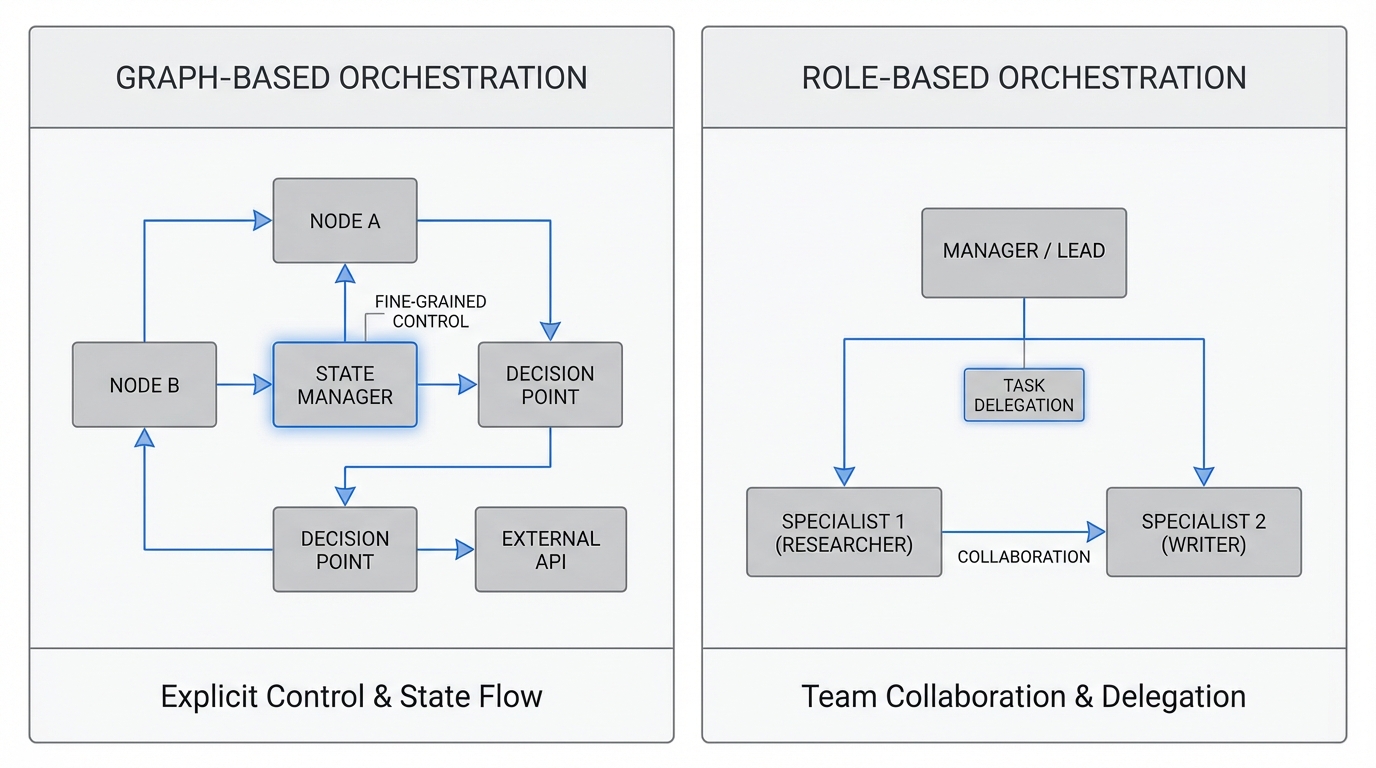

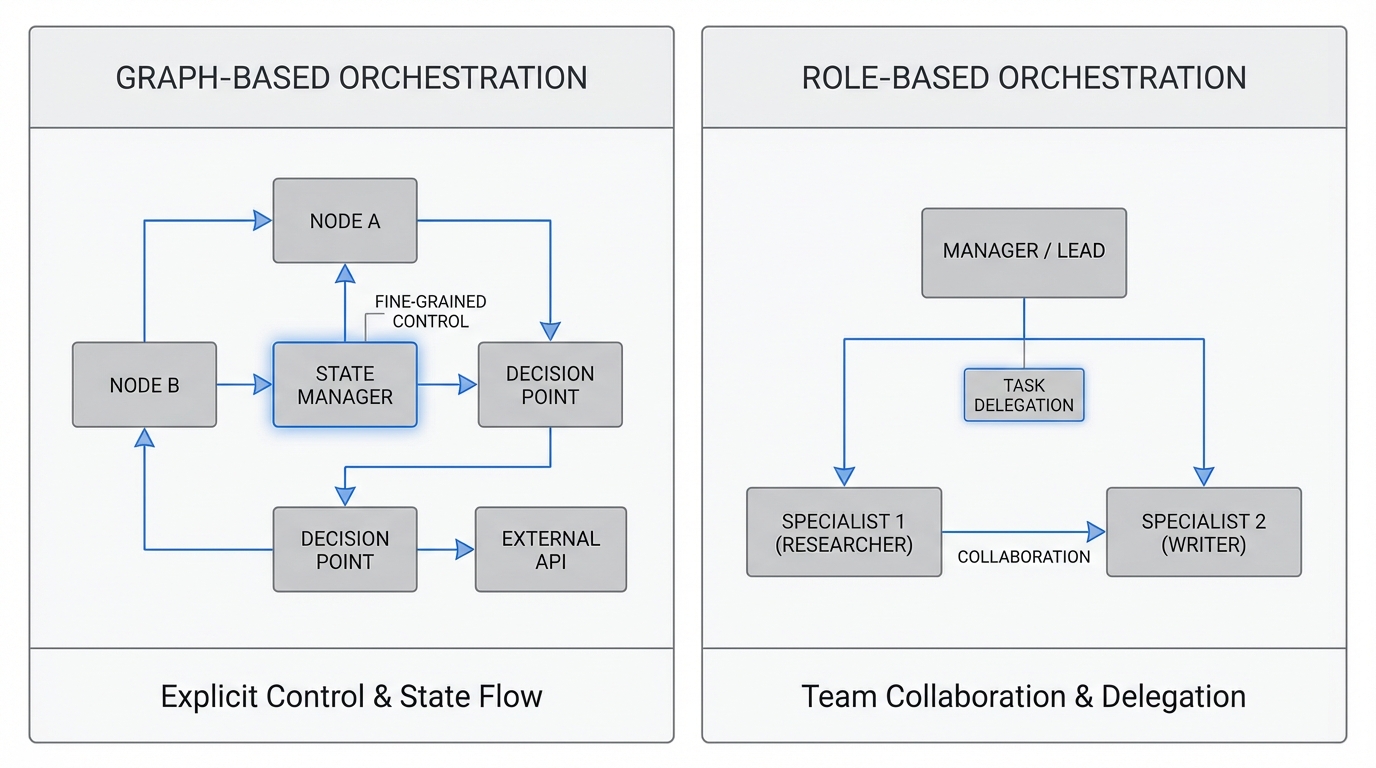

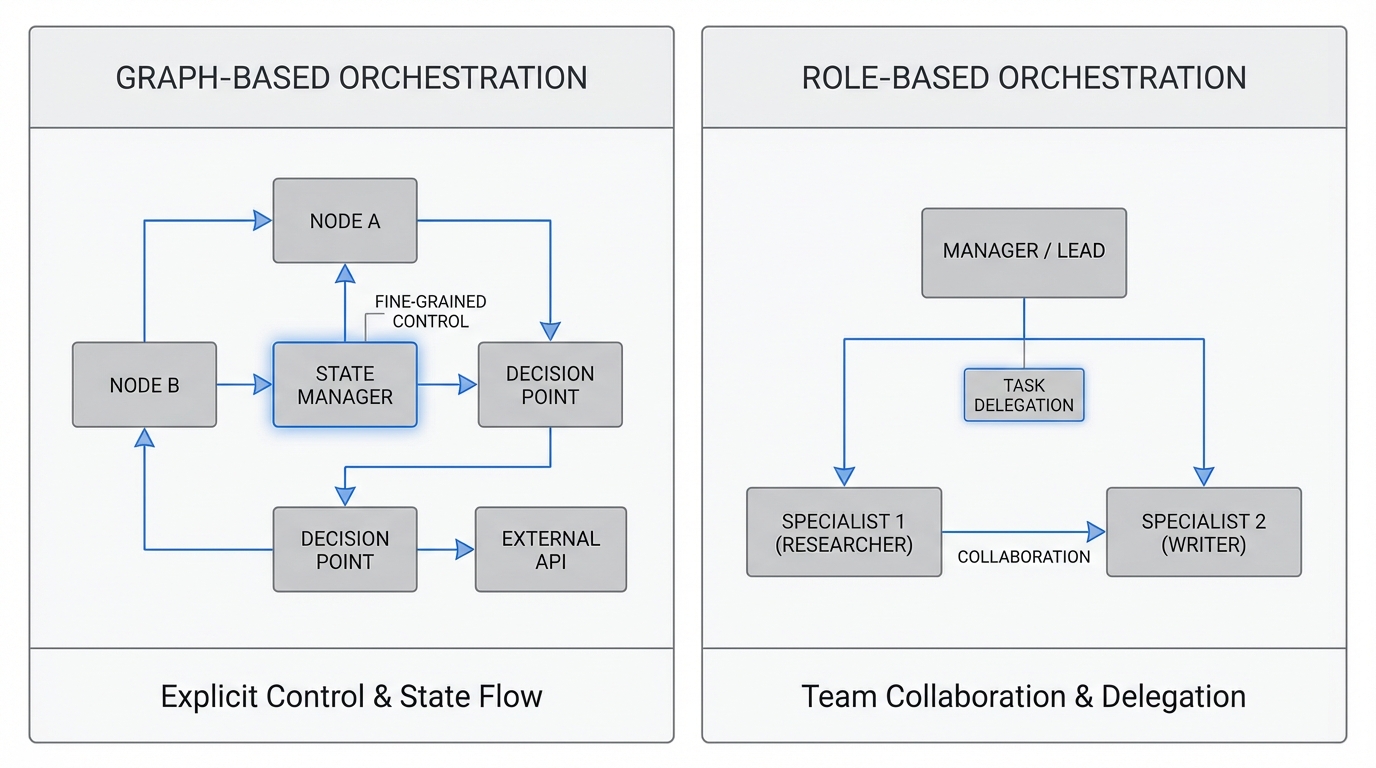

CrewAI adopts a role-based model inspired by real-world organizational structures, LangGraph embraces a graph-based workflow approach, and AutoGen focuses on conversational collaboration. These architectural differences have profound implications.

AutoGen treats workflows as conversations between agents, while LangGraph represents them as a graph with nodes and edges, offering a more visual and structured approach to workflow management. CrewAI runs at a higher level of abstraction, allowing developers to focus on role assignment and goal specification, with built-in functionalities for task delegation, sequencing, and state management.

For complex enterprise workflows requiring fine-grained control and state management, graph-based approaches provide explicit orchestration. For rapid prototyping with team-based collaboration patterns, role-based frameworks accelerate development. Teams implementing multi-agent orchestration patterns should evaluate how each framework's architecture aligns with their operational complexity.

All three frameworks provide extensive API support and integration with external tools, with CrewAI providing built-in integrations for common cloud services, LangGraph benefiting from the entire LangChain ecosystem, and AutoGen prioritizing tool usage within conversations.

But integration breadth differs dramatically. AutoGen offers essential pre-built extensions but its library is younger compared to LangChain's 600+ integrations. Crew AI is built over Langchain giving access to all of their tools, and LangGraph and Crew have an edge due to their seamless integration with LangChain, which offers a comprehensive range of tools.

Evaluate your existing tech stack. If you're deeply embedded in enterprise systems (Salesforce, SAP, Snowflake), prioritize frameworks with proven connectors. Custom integration work compounds quickly.

Memory support varies significantly across frameworks, with CrewAI using structured, role-based memory with RAG support, LangGraph providing state-based memory with checkpointing for workflow continuity, and AutoGen focusing on conversation-based memory maintaining dialogue history.

State persistence determines whether your agents can handle long-running workflows, recover from failures, and maintain context across sessions. CrewAI handles state persistence through task outputs with straightforward transitions but limited debugging options, while AutoGen maintains agent memory well, with flexible state transitions and debugging supported with conversation tracking.

For enterprise deployments, checkpointing, rollback capabilities, and audit trails aren't optional. They're the difference between prototype and production.

The security landscape for AI agents extends beyond traditional application security. 73% of AI Agent implementations in European companies during 2024 presented some GDPR compliance vulnerability, with sanctions reaching 4% of global annual revenue.

Specific risks of AI Agents include data leakage between users, prompt injection, model poisoning, and inadvertent PII exposure in responses. Framework selection must account for built-in security primitives: input validation, output sanitization, data isolation between sessions, and audit logging.

AI governance platforms help meet GDPR by ensuring AI agents access only necessary data, enforcing least privilege, blocking unauthorized sharing, with audit trails and real-time governance to prove compliance and protect privacy.

Ask: Does the framework provide native security controls? Can I implement row-level security? How are credentials managed? What audit capabilities exist out of the box?

CrewAI is the second easiest requiring understanding of tools and roles with well-structured beginner-friendly docs, while AutoGen is tricky needing manual setup working around chat conversations with confusing versioning in documents.

Developer experience compounds. AutoGen AgentChat provides the most parsimonious API, allowing you to replicate complex agents in 50 lines of code. Meanwhile, LangGraph offers deep customization through graph structures best suited for cyclical workflows, but the learning curve is steep.

Evaluate your team's composition. If you have experienced ML engineers comfortable with low-level control, flexibility matters more than simplicity. If you need business analysts building workflows, high-level abstractions accelerate value delivery.

CrewAI scales through horizontal agent replication and task parallelization within role hierarchies, LangGraph scales through distributed graph execution and parallel node processing, and AutoGen scales through conversation sharding and distributed chat management.

Production performance varies significantly. PydanticAI implementation seems to be the fastest followed by OpenAI agents sdk, llamaindex, AutoGen, LangGraph, Google ADK based on benchmark comparisons.

Enterprise implementations deliver latency ranging from 140 ms to 1.3 s under heavy multi-session load. For customer-facing applications, sub-second response times aren't aspirational; they're table stakes.

CrewAI highlights flow structure for tracing and debugging with recommended tracing for observability, while LangGraph emphasizes orchestration capabilities like durability and human-in-the-loop, and LangChain agents build on that.

Without comprehensive observability, debugging multi-agent systems becomes archaeological work. You need visibility into: agent decision paths, tool invocations, state transitions, token consumption, latency breakdown by component, and error propagation.

Frameworks with built-in tracing and integration with observability platforms (DataDog, New Relic, custom tooling) reduce mean time to resolution from hours to minutes.

AutoGen's repository includes a note recommending newcomers check Microsoft Agent Framework, stating AutoGen will be maintained with bug fixes and critical security patches, with Microsoft describing Agent Framework as an open-source kit bringing together and extending ideas from Semantic Kernel and AutoGen.

Framework longevity and migration paths matter. Open-source frameworks with permissive licenses provide optionality. Managed platforms with proprietary extensions create dependencies. CrewAI provides commercial licensing with enterprise support options, balancing open-source flexibility with enterprise support.

Evaluate: Can I export agent definitions? Are prompts portable? Can I swap LLM providers without rewriting code? What's the migration cost if this framework gets deprecated?

57% of companies already have AI agents in production with 22% in pilot and 21% in pre-pilot, with mature vendors treating agents as real operating infrastructure, not experiments.

LangChain offers flexibility, LlamaIndex excels at data retrieval, AutoGen offers complex multi-agent workflows, and CrewAI simplifies team orchestration. Match framework capabilities to your current stage.

For rapid experimentation, prioritize speed and simplicity. For production deployments at scale, prioritize robustness, observability, and enterprise-grade security. A 1-week POC with 10 realistic tasks, fixed tools, fixed model, a clear rubric, and measurable budgets for cost and failure modes provides concrete validation.

In early 2024, Klarna's customer-service AI assistant handled roughly two-thirds of incoming support chats in its first month, managing 2.3 million conversations, cutting average resolution time from ~11 minutes to under 2 minutes, and equating to about 700 FTE of capacity, with an estimated ~$40M profit improvement. Esusu automated 64% of email-based customer interactions and recorded a 10-point CSAT lift, with approximately 80% one-touch responses.

These outcomes required matching framework capabilities to business requirements. Customer service workloads demand high concurrency, stateless interactions, and rapid response times. Organizations deploying AI-powered customer service agents require frameworks that excel at conversation management and real-time response generation. Knowledge work applications need sophisticated memory, tool integration, and human-in-the-loop workflows.

Enterprise deployments report 68% deflection on employee requests and 43% autonomous resolution, but only when frameworks align with infrastructure realities and security requirements.

If you prefer more structure and an observability-first posture, CrewAI can be compelling, while teams benefiting from clear orchestration structure and traceability find graph/flow oriented approaches reduce operational ambiguity.

Choose AutoGen when you want flexible multi-agent coordination and you're comfortable designing constraints yourself, choose CrewAI when you want an opinionated crews plus flows structure for collaboration and a stronger rails story.

Start with a structured evaluation:

Do not confuse framework choice with production readiness; your engineering practices matter more.

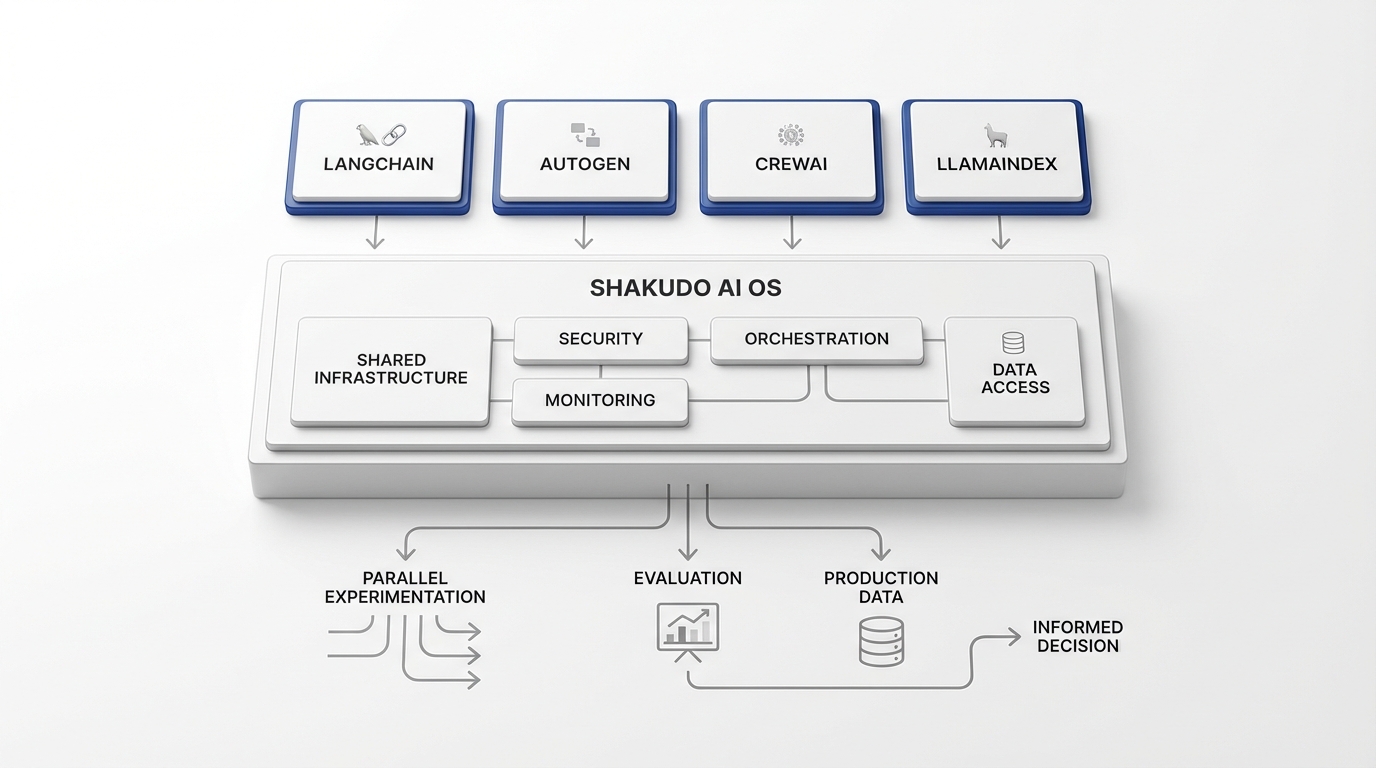

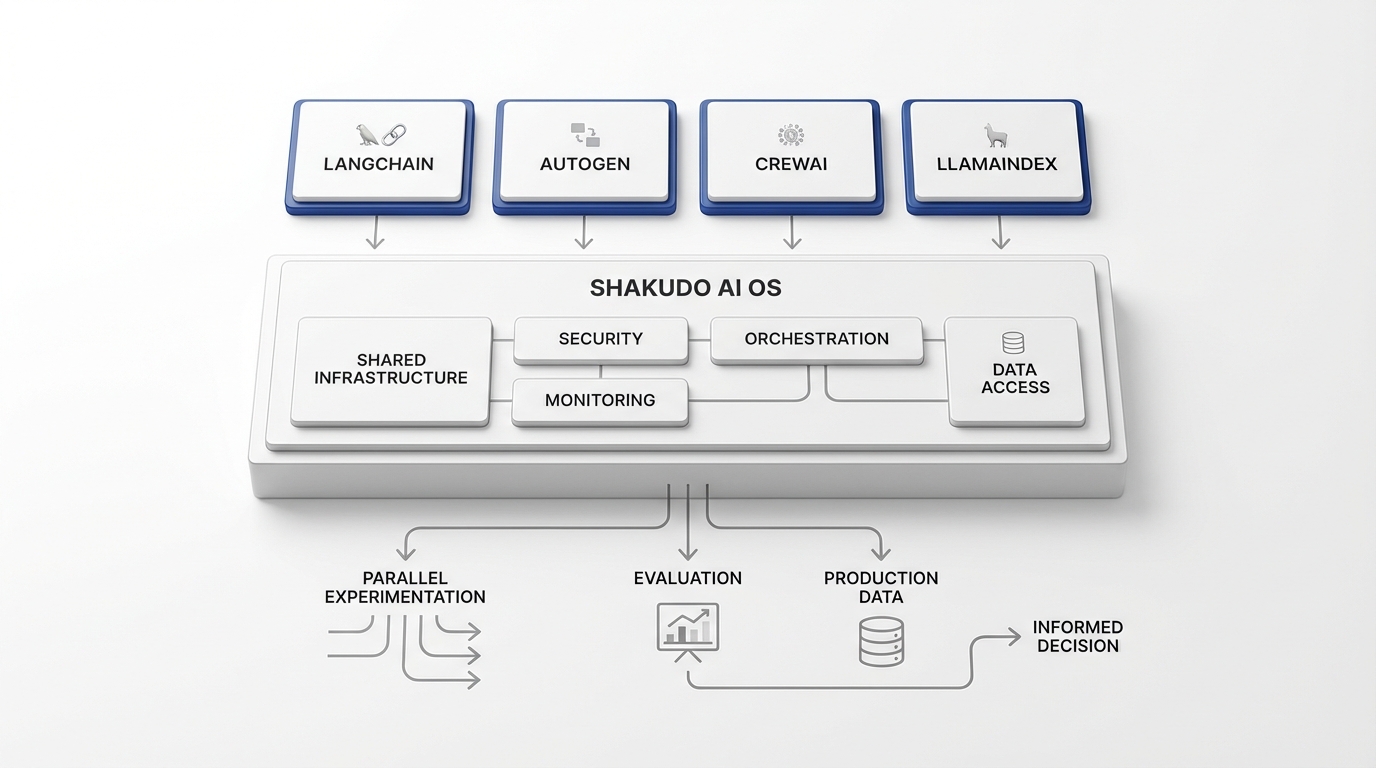

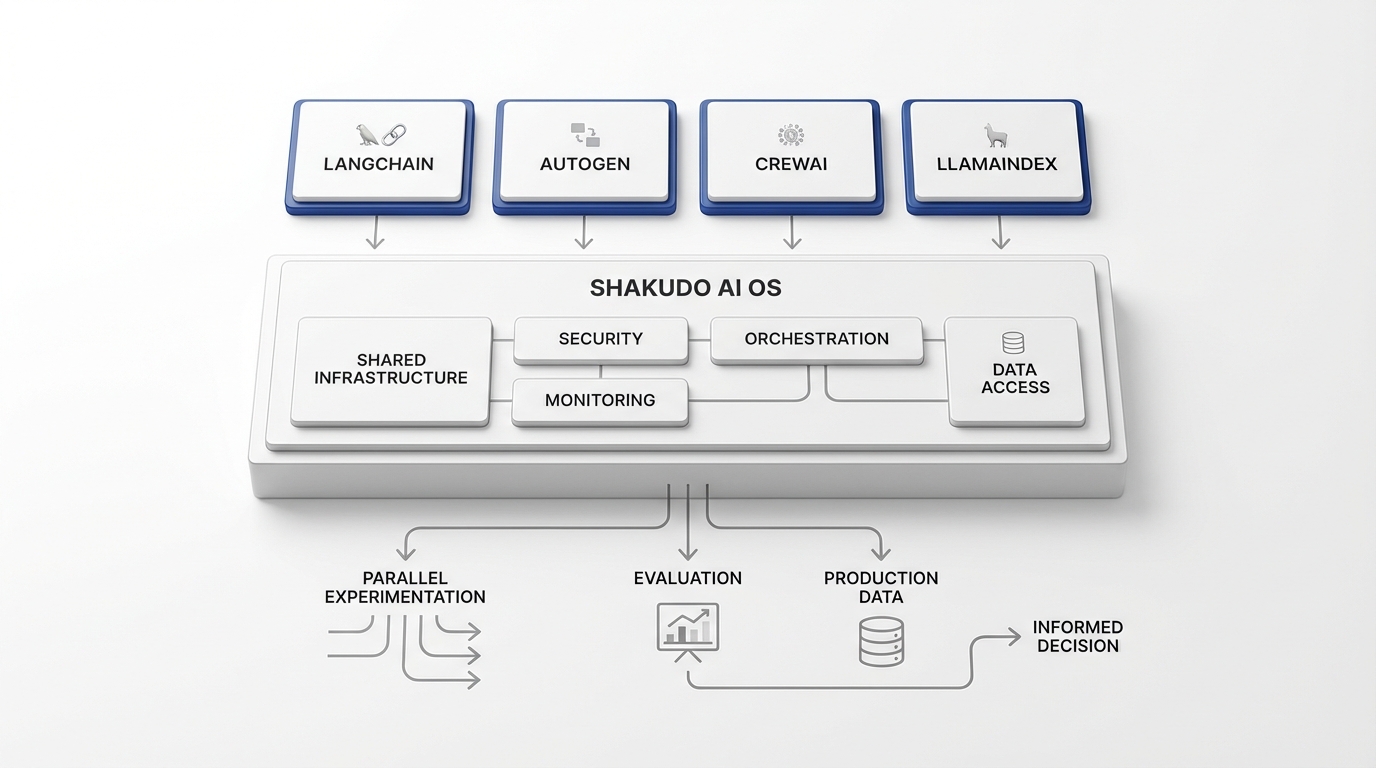

The framework selection dilemma stems from a false premise: that you must choose one framework and commit entirely. Shakudo provides a fundamentally different approach.

Rather than forcing teams to standardize on a single framework before understanding their requirements, Shakudo's AI operating system supports LangChain, AutoGen, CrewAI, and LlamaIndex out-of-the-box. Teams can experiment with multiple frameworks simultaneously, evaluate them against real use cases, and make informed decisions based on production data rather than vendor claims.

Shakudo addresses the critical pain points that cause 40% of AI agent projects to fail:

Data sovereignty by default: Deploy any framework within your private cloud infrastructure, ensuring compliance with GDPR, HIPAA, and SOC 2 requirements without framework-specific security hardening.

Pre-integrated infrastructure: Authentication, orchestration, monitoring, and governance layers work across all frameworks, eliminating the 80% of time teams waste building connectors and plumbing.

Framework portability: Agent definitions, tool integrations, and deployment configurations remain portable. Migrate between frameworks without rewriting your entire codebase.

Production-grade observability: Unified monitoring and debugging across frameworks, whether you're running LangGraph workflows or AutoGen conversations.

This allows engineering teams to focus on the question that actually matters: which framework best solves this specific business problem? Rather than: which framework can our infrastructure team support?

Framework selection represents a critical decision point, but it shouldn't paralyze progress. All five vendors expect AI agents to manage a significantly larger share of workflows within the next six months, and by 2028, at least 15% of day-to-day work decisions will be made autonomously through agentic AI, up from 0% in 2024.

The window for competitive advantage is narrowing. Organizations that establish robust AI agent capabilities in 2025 will define their industries in 2027. Those still debating framework selection in 2027 will be explaining to boards why competitors are operating at lower costs with higher customer satisfaction.

Start with clarity on your requirements. Validate with time-boxed experiments. Choose frameworks that align with your team's capabilities and your organization's constraints. And critically, select infrastructure that provides flexibility rather than lock-in.

The best framework is the one that ships value to production. Everything else is just architecture.

Seventy-nine percent of organizations have adopted AI agents, with 66% reporting measurable value through increased productivity. Yet beneath these promising numbers lies a sobering reality: Gartner predicts over 40% of agentic AI projects will be canceled by the end of 2027 due to escalating costs, unclear business value, or inadequate risk controls.

The difference between these two outcomes often comes down to a single upstream decision: which AI agent framework you choose. With enterprise-focused agentic AI expanding from $2.58 billion in 2024 to $24.50 billion by 2030 at a 46.2% CAGR, selecting the right foundation has never been more consequential.

Enterprise teams face a paradox. Twenty-three percent of respondents report their organizations are scaling an agentic AI system somewhere in their enterprises, with an additional 39% experimenting with AI agents, though most are only doing so in one or two functions. The challenge isn't lack of interest; it's the compounding cost of wrong decisions.

Security vulnerabilities plague 62% of practitioners who identify security as their top challenge, while 63% of executives cite platform sprawl as a growing concern, suggesting enterprises are juggling too many tools with limited interconnectivity. Each framework requires separate security approvals, authentication setups, and compliance documentation. Choose poorly, and your team spends months building infrastructure instead of intelligent agents.

The infrastructure tax is real. Teams report spending 80% of their time building data connectors rather than training agents, stretching projects from weeks into months. Meanwhile, returns often lag behind expectations due to fragmented workflows, insufficient integration, and misalignment between AI capabilities and business processes, with AI tools operating in silos and producing modest productivity gains that fall short of initial projections.

80% of enterprises prefer AI hosted inside their AWS cloud for compliance and data sovereignty reasons. This isn't negotiable for regulated industries. When evaluating LangChain, AutoGen, CrewAI, or LlamaIndex, the first question is deployment flexibility.

LangChain has over 600+ integrations and can connect to virtually every major LLM, tool, and database via a standardized interface, offering deployment versatility. LangGraph is an open-source library within the LangChain ecosystem designed for building stateful, multi-actor applications powered by LLMs, introducing the ability to create and manage cyclical graphs, with a platform designed to streamline deployment and scaling.

For private cloud deployments, ask: Can I run this framework entirely within my VPC? Does it require external API calls that expose data? What telemetry leaves my environment?

CrewAI adopts a role-based model inspired by real-world organizational structures, LangGraph embraces a graph-based workflow approach, and AutoGen focuses on conversational collaboration. These architectural differences have profound implications.

AutoGen treats workflows as conversations between agents, while LangGraph represents them as a graph with nodes and edges, offering a more visual and structured approach to workflow management. CrewAI runs at a higher level of abstraction, allowing developers to focus on role assignment and goal specification, with built-in functionalities for task delegation, sequencing, and state management.

For complex enterprise workflows requiring fine-grained control and state management, graph-based approaches provide explicit orchestration. For rapid prototyping with team-based collaboration patterns, role-based frameworks accelerate development. Teams implementing multi-agent orchestration patterns should evaluate how each framework's architecture aligns with their operational complexity.

All three frameworks provide extensive API support and integration with external tools, with CrewAI providing built-in integrations for common cloud services, LangGraph benefiting from the entire LangChain ecosystem, and AutoGen prioritizing tool usage within conversations.

But integration breadth differs dramatically. AutoGen offers essential pre-built extensions but its library is younger compared to LangChain's 600+ integrations. Crew AI is built over Langchain giving access to all of their tools, and LangGraph and Crew have an edge due to their seamless integration with LangChain, which offers a comprehensive range of tools.

Evaluate your existing tech stack. If you're deeply embedded in enterprise systems (Salesforce, SAP, Snowflake), prioritize frameworks with proven connectors. Custom integration work compounds quickly.

Memory support varies significantly across frameworks, with CrewAI using structured, role-based memory with RAG support, LangGraph providing state-based memory with checkpointing for workflow continuity, and AutoGen focusing on conversation-based memory maintaining dialogue history.

State persistence determines whether your agents can handle long-running workflows, recover from failures, and maintain context across sessions. CrewAI handles state persistence through task outputs with straightforward transitions but limited debugging options, while AutoGen maintains agent memory well, with flexible state transitions and debugging supported with conversation tracking.

For enterprise deployments, checkpointing, rollback capabilities, and audit trails aren't optional. They're the difference between prototype and production.

The security landscape for AI agents extends beyond traditional application security. 73% of AI Agent implementations in European companies during 2024 presented some GDPR compliance vulnerability, with sanctions reaching 4% of global annual revenue.

Specific risks of AI Agents include data leakage between users, prompt injection, model poisoning, and inadvertent PII exposure in responses. Framework selection must account for built-in security primitives: input validation, output sanitization, data isolation between sessions, and audit logging.

AI governance platforms help meet GDPR by ensuring AI agents access only necessary data, enforcing least privilege, blocking unauthorized sharing, with audit trails and real-time governance to prove compliance and protect privacy.

Ask: Does the framework provide native security controls? Can I implement row-level security? How are credentials managed? What audit capabilities exist out of the box?

CrewAI is the second easiest requiring understanding of tools and roles with well-structured beginner-friendly docs, while AutoGen is tricky needing manual setup working around chat conversations with confusing versioning in documents.

Developer experience compounds. AutoGen AgentChat provides the most parsimonious API, allowing you to replicate complex agents in 50 lines of code. Meanwhile, LangGraph offers deep customization through graph structures best suited for cyclical workflows, but the learning curve is steep.

Evaluate your team's composition. If you have experienced ML engineers comfortable with low-level control, flexibility matters more than simplicity. If you need business analysts building workflows, high-level abstractions accelerate value delivery.

CrewAI scales through horizontal agent replication and task parallelization within role hierarchies, LangGraph scales through distributed graph execution and parallel node processing, and AutoGen scales through conversation sharding and distributed chat management.

Production performance varies significantly. PydanticAI implementation seems to be the fastest followed by OpenAI agents sdk, llamaindex, AutoGen, LangGraph, Google ADK based on benchmark comparisons.

Enterprise implementations deliver latency ranging from 140 ms to 1.3 s under heavy multi-session load. For customer-facing applications, sub-second response times aren't aspirational; they're table stakes.

CrewAI highlights flow structure for tracing and debugging with recommended tracing for observability, while LangGraph emphasizes orchestration capabilities like durability and human-in-the-loop, and LangChain agents build on that.

Without comprehensive observability, debugging multi-agent systems becomes archaeological work. You need visibility into: agent decision paths, tool invocations, state transitions, token consumption, latency breakdown by component, and error propagation.

Frameworks with built-in tracing and integration with observability platforms (DataDog, New Relic, custom tooling) reduce mean time to resolution from hours to minutes.

AutoGen's repository includes a note recommending newcomers check Microsoft Agent Framework, stating AutoGen will be maintained with bug fixes and critical security patches, with Microsoft describing Agent Framework as an open-source kit bringing together and extending ideas from Semantic Kernel and AutoGen.

Framework longevity and migration paths matter. Open-source frameworks with permissive licenses provide optionality. Managed platforms with proprietary extensions create dependencies. CrewAI provides commercial licensing with enterprise support options, balancing open-source flexibility with enterprise support.

Evaluate: Can I export agent definitions? Are prompts portable? Can I swap LLM providers without rewriting code? What's the migration cost if this framework gets deprecated?

57% of companies already have AI agents in production with 22% in pilot and 21% in pre-pilot, with mature vendors treating agents as real operating infrastructure, not experiments.

LangChain offers flexibility, LlamaIndex excels at data retrieval, AutoGen offers complex multi-agent workflows, and CrewAI simplifies team orchestration. Match framework capabilities to your current stage.

For rapid experimentation, prioritize speed and simplicity. For production deployments at scale, prioritize robustness, observability, and enterprise-grade security. A 1-week POC with 10 realistic tasks, fixed tools, fixed model, a clear rubric, and measurable budgets for cost and failure modes provides concrete validation.

In early 2024, Klarna's customer-service AI assistant handled roughly two-thirds of incoming support chats in its first month, managing 2.3 million conversations, cutting average resolution time from ~11 minutes to under 2 minutes, and equating to about 700 FTE of capacity, with an estimated ~$40M profit improvement. Esusu automated 64% of email-based customer interactions and recorded a 10-point CSAT lift, with approximately 80% one-touch responses.

These outcomes required matching framework capabilities to business requirements. Customer service workloads demand high concurrency, stateless interactions, and rapid response times. Organizations deploying AI-powered customer service agents require frameworks that excel at conversation management and real-time response generation. Knowledge work applications need sophisticated memory, tool integration, and human-in-the-loop workflows.

Enterprise deployments report 68% deflection on employee requests and 43% autonomous resolution, but only when frameworks align with infrastructure realities and security requirements.

If you prefer more structure and an observability-first posture, CrewAI can be compelling, while teams benefiting from clear orchestration structure and traceability find graph/flow oriented approaches reduce operational ambiguity.

Choose AutoGen when you want flexible multi-agent coordination and you're comfortable designing constraints yourself, choose CrewAI when you want an opinionated crews plus flows structure for collaboration and a stronger rails story.

Start with a structured evaluation:

Do not confuse framework choice with production readiness; your engineering practices matter more.

The framework selection dilemma stems from a false premise: that you must choose one framework and commit entirely. Shakudo provides a fundamentally different approach.

Rather than forcing teams to standardize on a single framework before understanding their requirements, Shakudo's AI operating system supports LangChain, AutoGen, CrewAI, and LlamaIndex out-of-the-box. Teams can experiment with multiple frameworks simultaneously, evaluate them against real use cases, and make informed decisions based on production data rather than vendor claims.

Shakudo addresses the critical pain points that cause 40% of AI agent projects to fail:

Data sovereignty by default: Deploy any framework within your private cloud infrastructure, ensuring compliance with GDPR, HIPAA, and SOC 2 requirements without framework-specific security hardening.

Pre-integrated infrastructure: Authentication, orchestration, monitoring, and governance layers work across all frameworks, eliminating the 80% of time teams waste building connectors and plumbing.

Framework portability: Agent definitions, tool integrations, and deployment configurations remain portable. Migrate between frameworks without rewriting your entire codebase.

Production-grade observability: Unified monitoring and debugging across frameworks, whether you're running LangGraph workflows or AutoGen conversations.

This allows engineering teams to focus on the question that actually matters: which framework best solves this specific business problem? Rather than: which framework can our infrastructure team support?

Framework selection represents a critical decision point, but it shouldn't paralyze progress. All five vendors expect AI agents to manage a significantly larger share of workflows within the next six months, and by 2028, at least 15% of day-to-day work decisions will be made autonomously through agentic AI, up from 0% in 2024.

The window for competitive advantage is narrowing. Organizations that establish robust AI agent capabilities in 2025 will define their industries in 2027. Those still debating framework selection in 2027 will be explaining to boards why competitors are operating at lower costs with higher customer satisfaction.

Start with clarity on your requirements. Validate with time-boxed experiments. Choose frameworks that align with your team's capabilities and your organization's constraints. And critically, select infrastructure that provides flexibility rather than lock-in.

The best framework is the one that ships value to production. Everything else is just architecture.

Seventy-nine percent of organizations have adopted AI agents, with 66% reporting measurable value through increased productivity. Yet beneath these promising numbers lies a sobering reality: Gartner predicts over 40% of agentic AI projects will be canceled by the end of 2027 due to escalating costs, unclear business value, or inadequate risk controls.

The difference between these two outcomes often comes down to a single upstream decision: which AI agent framework you choose. With enterprise-focused agentic AI expanding from $2.58 billion in 2024 to $24.50 billion by 2030 at a 46.2% CAGR, selecting the right foundation has never been more consequential.

Enterprise teams face a paradox. Twenty-three percent of respondents report their organizations are scaling an agentic AI system somewhere in their enterprises, with an additional 39% experimenting with AI agents, though most are only doing so in one or two functions. The challenge isn't lack of interest; it's the compounding cost of wrong decisions.

Security vulnerabilities plague 62% of practitioners who identify security as their top challenge, while 63% of executives cite platform sprawl as a growing concern, suggesting enterprises are juggling too many tools with limited interconnectivity. Each framework requires separate security approvals, authentication setups, and compliance documentation. Choose poorly, and your team spends months building infrastructure instead of intelligent agents.

The infrastructure tax is real. Teams report spending 80% of their time building data connectors rather than training agents, stretching projects from weeks into months. Meanwhile, returns often lag behind expectations due to fragmented workflows, insufficient integration, and misalignment between AI capabilities and business processes, with AI tools operating in silos and producing modest productivity gains that fall short of initial projections.

80% of enterprises prefer AI hosted inside their AWS cloud for compliance and data sovereignty reasons. This isn't negotiable for regulated industries. When evaluating LangChain, AutoGen, CrewAI, or LlamaIndex, the first question is deployment flexibility.

LangChain has over 600+ integrations and can connect to virtually every major LLM, tool, and database via a standardized interface, offering deployment versatility. LangGraph is an open-source library within the LangChain ecosystem designed for building stateful, multi-actor applications powered by LLMs, introducing the ability to create and manage cyclical graphs, with a platform designed to streamline deployment and scaling.

For private cloud deployments, ask: Can I run this framework entirely within my VPC? Does it require external API calls that expose data? What telemetry leaves my environment?

CrewAI adopts a role-based model inspired by real-world organizational structures, LangGraph embraces a graph-based workflow approach, and AutoGen focuses on conversational collaboration. These architectural differences have profound implications.

AutoGen treats workflows as conversations between agents, while LangGraph represents them as a graph with nodes and edges, offering a more visual and structured approach to workflow management. CrewAI runs at a higher level of abstraction, allowing developers to focus on role assignment and goal specification, with built-in functionalities for task delegation, sequencing, and state management.

For complex enterprise workflows requiring fine-grained control and state management, graph-based approaches provide explicit orchestration. For rapid prototyping with team-based collaboration patterns, role-based frameworks accelerate development. Teams implementing multi-agent orchestration patterns should evaluate how each framework's architecture aligns with their operational complexity.

All three frameworks provide extensive API support and integration with external tools, with CrewAI providing built-in integrations for common cloud services, LangGraph benefiting from the entire LangChain ecosystem, and AutoGen prioritizing tool usage within conversations.

But integration breadth differs dramatically. AutoGen offers essential pre-built extensions but its library is younger compared to LangChain's 600+ integrations. Crew AI is built over Langchain giving access to all of their tools, and LangGraph and Crew have an edge due to their seamless integration with LangChain, which offers a comprehensive range of tools.

Evaluate your existing tech stack. If you're deeply embedded in enterprise systems (Salesforce, SAP, Snowflake), prioritize frameworks with proven connectors. Custom integration work compounds quickly.

Memory support varies significantly across frameworks, with CrewAI using structured, role-based memory with RAG support, LangGraph providing state-based memory with checkpointing for workflow continuity, and AutoGen focusing on conversation-based memory maintaining dialogue history.

State persistence determines whether your agents can handle long-running workflows, recover from failures, and maintain context across sessions. CrewAI handles state persistence through task outputs with straightforward transitions but limited debugging options, while AutoGen maintains agent memory well, with flexible state transitions and debugging supported with conversation tracking.

For enterprise deployments, checkpointing, rollback capabilities, and audit trails aren't optional. They're the difference between prototype and production.

The security landscape for AI agents extends beyond traditional application security. 73% of AI Agent implementations in European companies during 2024 presented some GDPR compliance vulnerability, with sanctions reaching 4% of global annual revenue.

Specific risks of AI Agents include data leakage between users, prompt injection, model poisoning, and inadvertent PII exposure in responses. Framework selection must account for built-in security primitives: input validation, output sanitization, data isolation between sessions, and audit logging.

AI governance platforms help meet GDPR by ensuring AI agents access only necessary data, enforcing least privilege, blocking unauthorized sharing, with audit trails and real-time governance to prove compliance and protect privacy.

Ask: Does the framework provide native security controls? Can I implement row-level security? How are credentials managed? What audit capabilities exist out of the box?

CrewAI is the second easiest requiring understanding of tools and roles with well-structured beginner-friendly docs, while AutoGen is tricky needing manual setup working around chat conversations with confusing versioning in documents.

Developer experience compounds. AutoGen AgentChat provides the most parsimonious API, allowing you to replicate complex agents in 50 lines of code. Meanwhile, LangGraph offers deep customization through graph structures best suited for cyclical workflows, but the learning curve is steep.

Evaluate your team's composition. If you have experienced ML engineers comfortable with low-level control, flexibility matters more than simplicity. If you need business analysts building workflows, high-level abstractions accelerate value delivery.

CrewAI scales through horizontal agent replication and task parallelization within role hierarchies, LangGraph scales through distributed graph execution and parallel node processing, and AutoGen scales through conversation sharding and distributed chat management.

Production performance varies significantly. PydanticAI implementation seems to be the fastest followed by OpenAI agents sdk, llamaindex, AutoGen, LangGraph, Google ADK based on benchmark comparisons.

Enterprise implementations deliver latency ranging from 140 ms to 1.3 s under heavy multi-session load. For customer-facing applications, sub-second response times aren't aspirational; they're table stakes.

CrewAI highlights flow structure for tracing and debugging with recommended tracing for observability, while LangGraph emphasizes orchestration capabilities like durability and human-in-the-loop, and LangChain agents build on that.

Without comprehensive observability, debugging multi-agent systems becomes archaeological work. You need visibility into: agent decision paths, tool invocations, state transitions, token consumption, latency breakdown by component, and error propagation.

Frameworks with built-in tracing and integration with observability platforms (DataDog, New Relic, custom tooling) reduce mean time to resolution from hours to minutes.

AutoGen's repository includes a note recommending newcomers check Microsoft Agent Framework, stating AutoGen will be maintained with bug fixes and critical security patches, with Microsoft describing Agent Framework as an open-source kit bringing together and extending ideas from Semantic Kernel and AutoGen.

Framework longevity and migration paths matter. Open-source frameworks with permissive licenses provide optionality. Managed platforms with proprietary extensions create dependencies. CrewAI provides commercial licensing with enterprise support options, balancing open-source flexibility with enterprise support.

Evaluate: Can I export agent definitions? Are prompts portable? Can I swap LLM providers without rewriting code? What's the migration cost if this framework gets deprecated?

57% of companies already have AI agents in production with 22% in pilot and 21% in pre-pilot, with mature vendors treating agents as real operating infrastructure, not experiments.

LangChain offers flexibility, LlamaIndex excels at data retrieval, AutoGen offers complex multi-agent workflows, and CrewAI simplifies team orchestration. Match framework capabilities to your current stage.

For rapid experimentation, prioritize speed and simplicity. For production deployments at scale, prioritize robustness, observability, and enterprise-grade security. A 1-week POC with 10 realistic tasks, fixed tools, fixed model, a clear rubric, and measurable budgets for cost and failure modes provides concrete validation.

In early 2024, Klarna's customer-service AI assistant handled roughly two-thirds of incoming support chats in its first month, managing 2.3 million conversations, cutting average resolution time from ~11 minutes to under 2 minutes, and equating to about 700 FTE of capacity, with an estimated ~$40M profit improvement. Esusu automated 64% of email-based customer interactions and recorded a 10-point CSAT lift, with approximately 80% one-touch responses.

These outcomes required matching framework capabilities to business requirements. Customer service workloads demand high concurrency, stateless interactions, and rapid response times. Organizations deploying AI-powered customer service agents require frameworks that excel at conversation management and real-time response generation. Knowledge work applications need sophisticated memory, tool integration, and human-in-the-loop workflows.

Enterprise deployments report 68% deflection on employee requests and 43% autonomous resolution, but only when frameworks align with infrastructure realities and security requirements.

If you prefer more structure and an observability-first posture, CrewAI can be compelling, while teams benefiting from clear orchestration structure and traceability find graph/flow oriented approaches reduce operational ambiguity.

Choose AutoGen when you want flexible multi-agent coordination and you're comfortable designing constraints yourself, choose CrewAI when you want an opinionated crews plus flows structure for collaboration and a stronger rails story.

Start with a structured evaluation:

Do not confuse framework choice with production readiness; your engineering practices matter more.

The framework selection dilemma stems from a false premise: that you must choose one framework and commit entirely. Shakudo provides a fundamentally different approach.

Rather than forcing teams to standardize on a single framework before understanding their requirements, Shakudo's AI operating system supports LangChain, AutoGen, CrewAI, and LlamaIndex out-of-the-box. Teams can experiment with multiple frameworks simultaneously, evaluate them against real use cases, and make informed decisions based on production data rather than vendor claims.

Shakudo addresses the critical pain points that cause 40% of AI agent projects to fail:

Data sovereignty by default: Deploy any framework within your private cloud infrastructure, ensuring compliance with GDPR, HIPAA, and SOC 2 requirements without framework-specific security hardening.

Pre-integrated infrastructure: Authentication, orchestration, monitoring, and governance layers work across all frameworks, eliminating the 80% of time teams waste building connectors and plumbing.

Framework portability: Agent definitions, tool integrations, and deployment configurations remain portable. Migrate between frameworks without rewriting your entire codebase.

Production-grade observability: Unified monitoring and debugging across frameworks, whether you're running LangGraph workflows or AutoGen conversations.

This allows engineering teams to focus on the question that actually matters: which framework best solves this specific business problem? Rather than: which framework can our infrastructure team support?

Framework selection represents a critical decision point, but it shouldn't paralyze progress. All five vendors expect AI agents to manage a significantly larger share of workflows within the next six months, and by 2028, at least 15% of day-to-day work decisions will be made autonomously through agentic AI, up from 0% in 2024.

The window for competitive advantage is narrowing. Organizations that establish robust AI agent capabilities in 2025 will define their industries in 2027. Those still debating framework selection in 2027 will be explaining to boards why competitors are operating at lower costs with higher customer satisfaction.

Start with clarity on your requirements. Validate with time-boxed experiments. Choose frameworks that align with your team's capabilities and your organization's constraints. And critically, select infrastructure that provides flexibility rather than lock-in.

The best framework is the one that ships value to production. Everything else is just architecture.