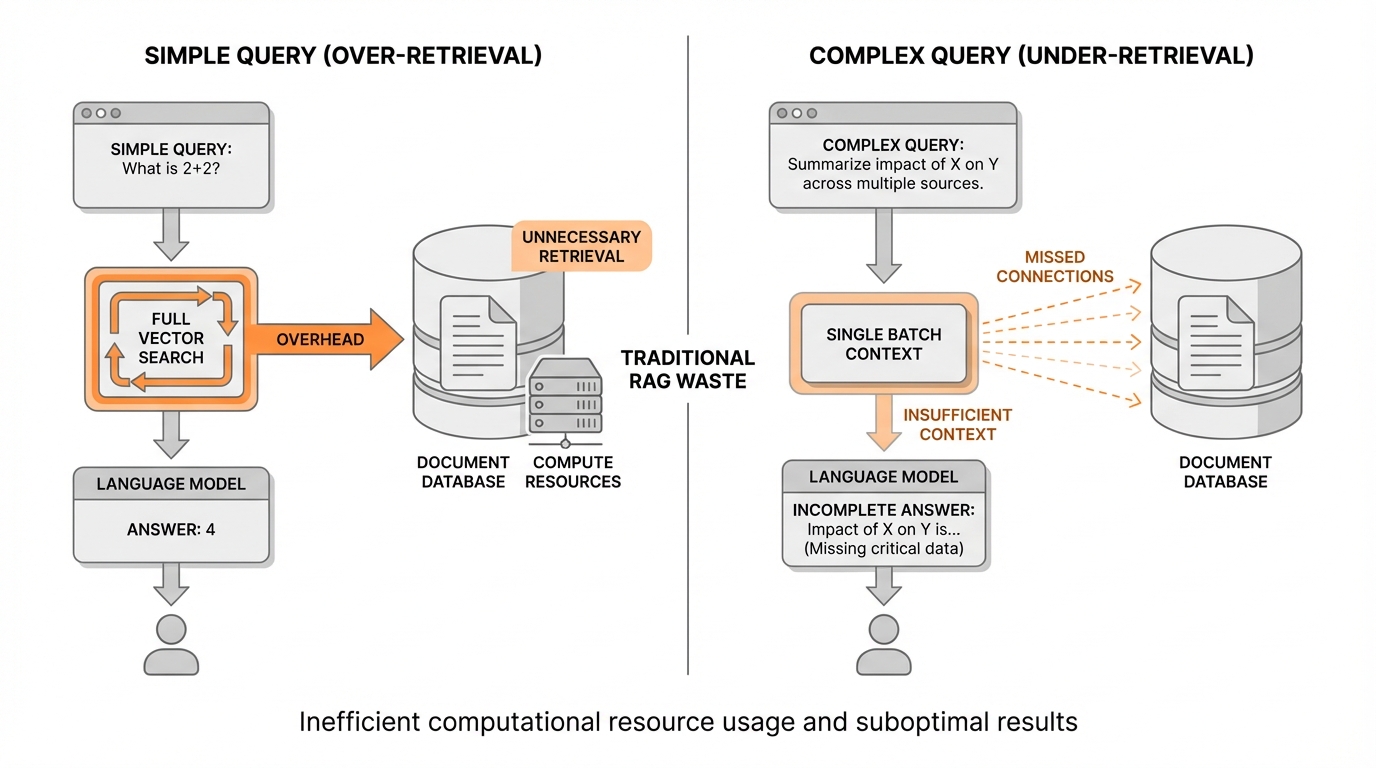

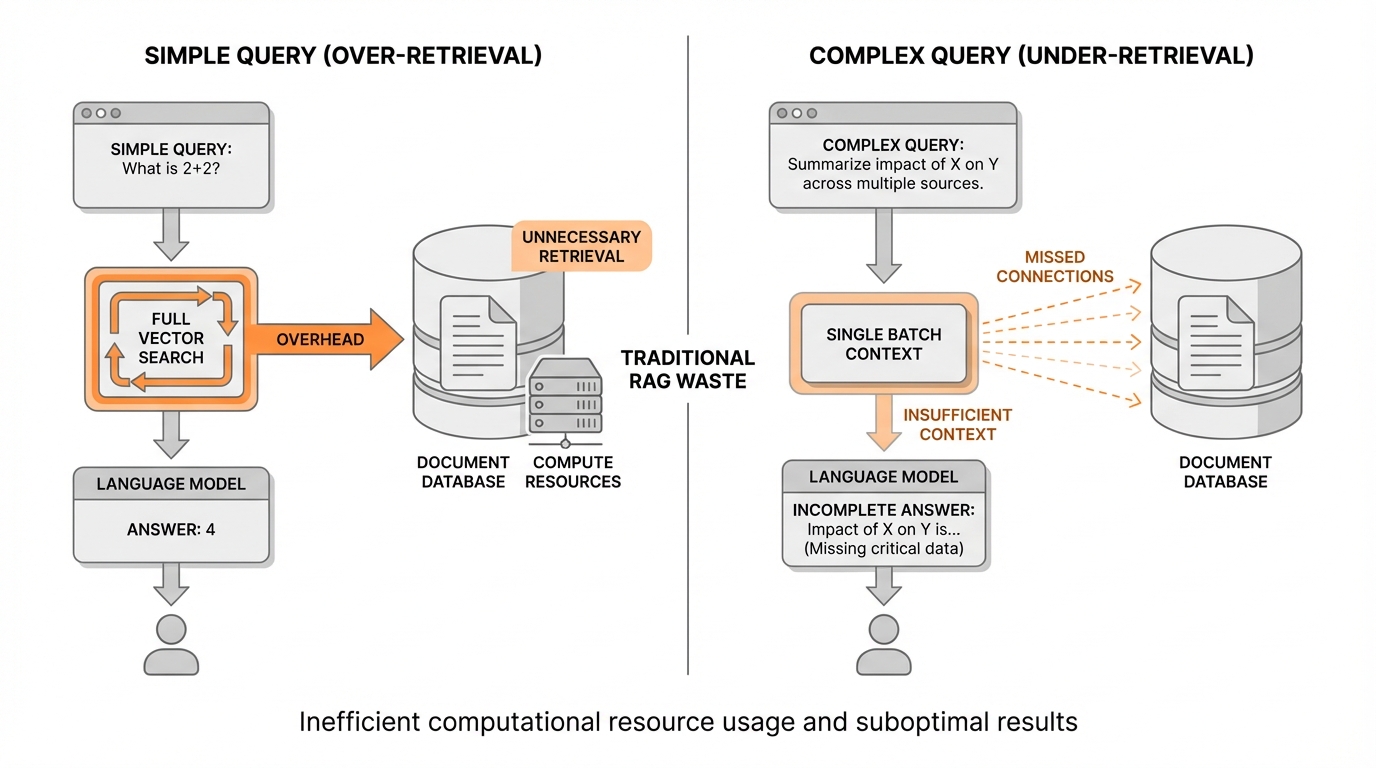

Enterprise AI teams implementing Retrieval-Augmented Generation face a frustrating paradox. Traditional RAG architectures retrieve too much context, driving up vector database queries and compute costs without delivering proportionally better answers. Meanwhile, complex queries that require synthesizing information across multiple internal knowledge sources often fail entirely, leaving critical business questions unanswered.

This isn't a tuning problem. It's an architectural one.

Most production RAG implementations follow a deceptively simple pattern: embed the user query, search the vector database, retrieve the top-k results, and pass everything to the language model. This single-step approach works adequately for straightforward factual lookups. But it breaks down spectacularly when deployed at scale across enterprise knowledge bases.

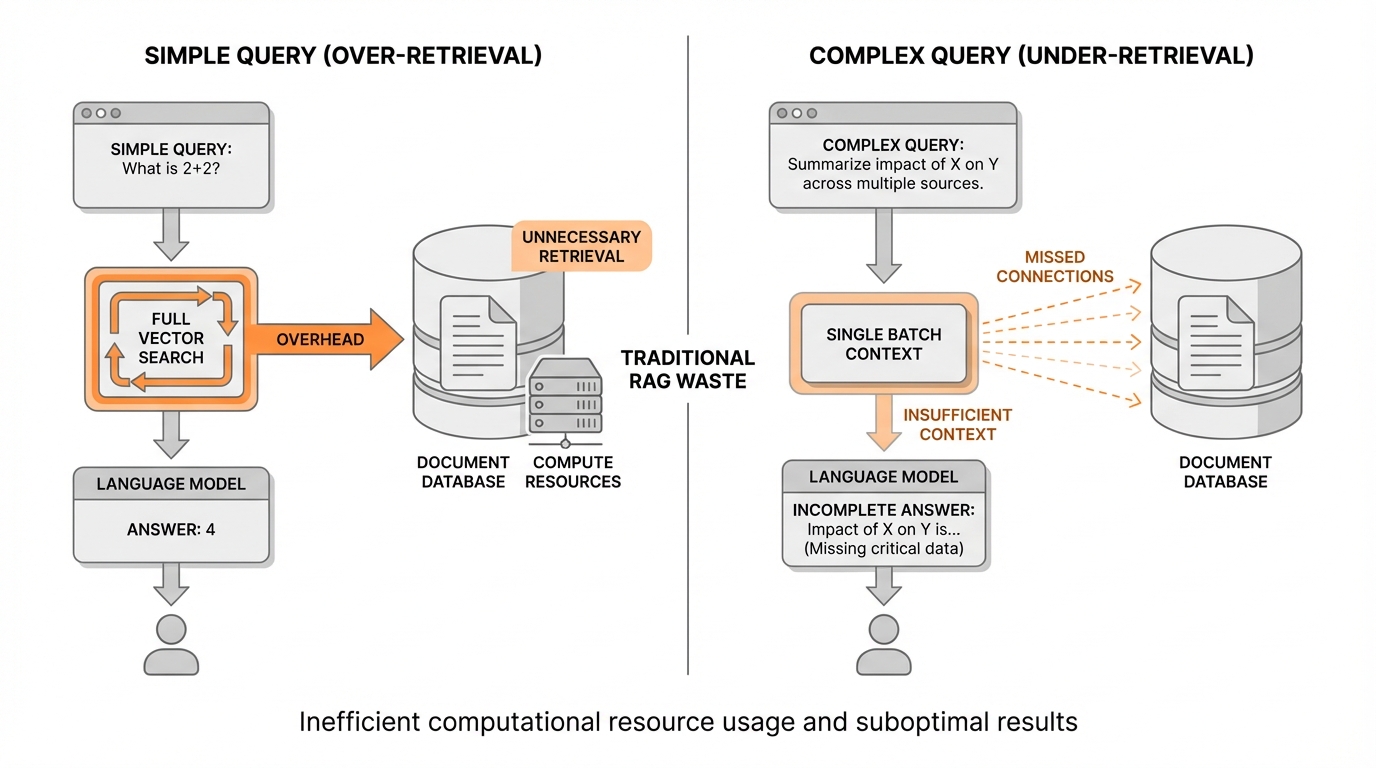

The computational waste is staggering. Every query triggers a vector search regardless of whether retrieval is necessary. Simple queries that the language model could answer directly still incur the full retrieval overhead. Complex queries that need information from multiple sources receive a single batch of context that may miss critical connections. The result is a system that simultaneously over-retrieves for simple cases and under-retrieves for complex ones.

Regulated industries face an additional burden. Financial services firms, healthcare organizations, and government contractors operating under strict data sovereignty requirements must deploy these systems entirely within their infrastructure. When every unnecessary retrieval multiplies infrastructure costs across dedicated VPC deployments, the economics become untenable. Teams find themselves choosing between answer quality and operational efficiency, a choice that shouldn't exist.

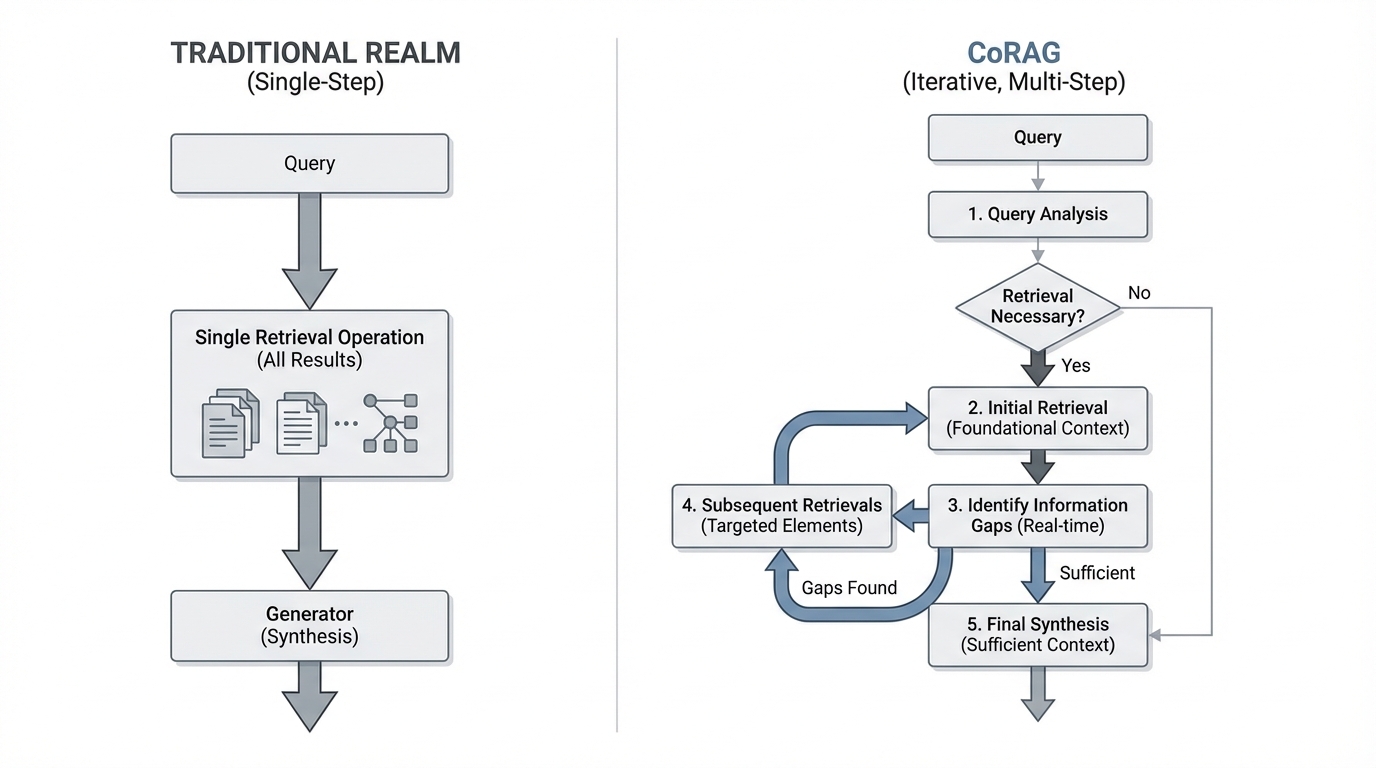

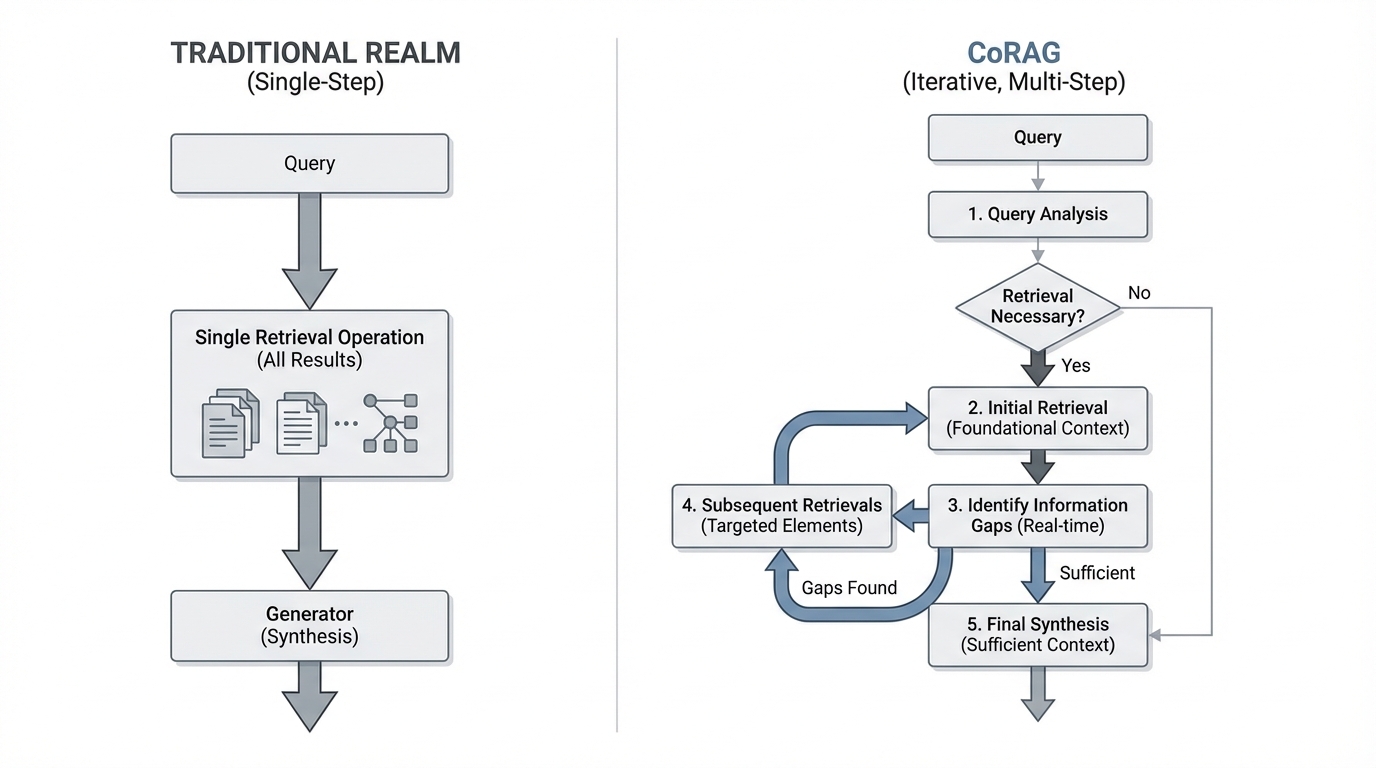

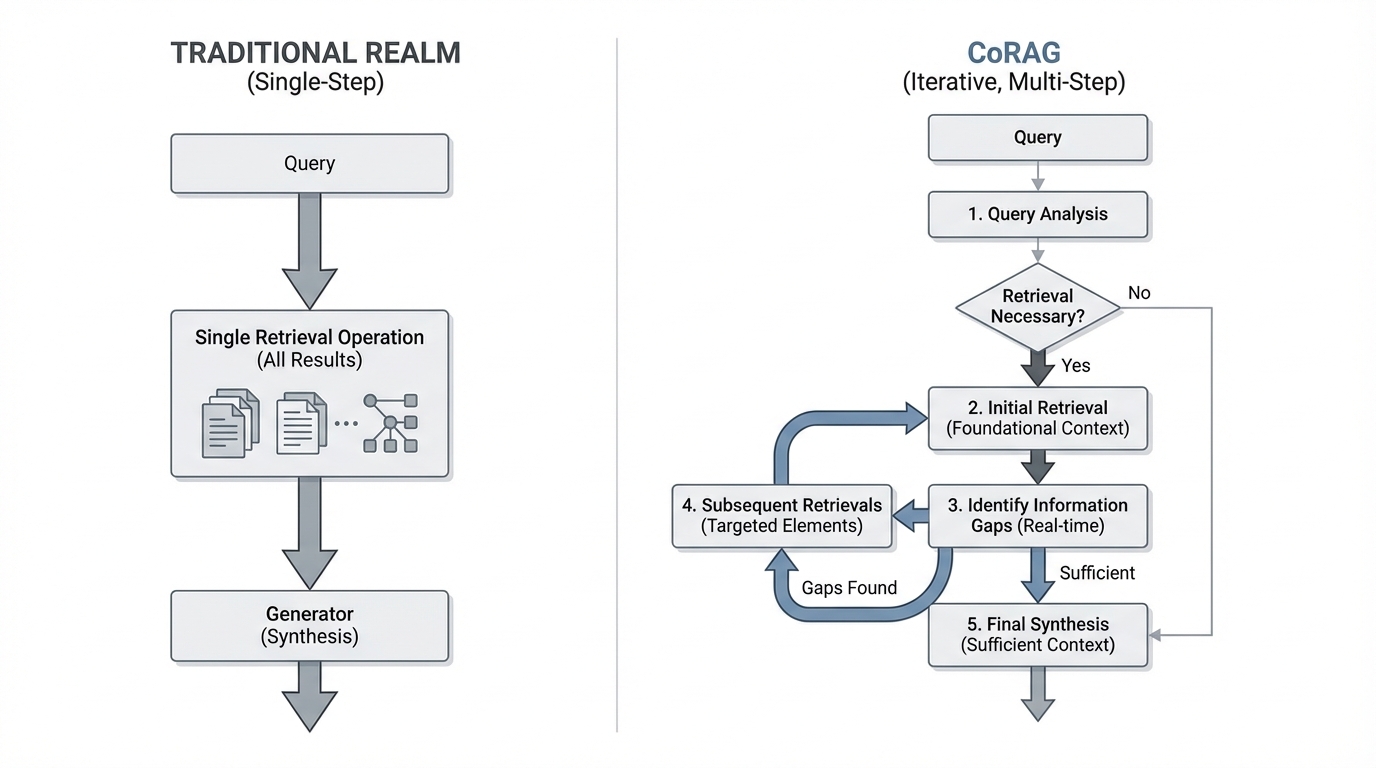

The evolution of RAG design patterns represents a fundamental rethinking of how retrieval and generation should interact. Rather than treating retrieval as a single preprocessing step, advanced architectures like Chain-of-Retrieval Augmented Generation (CoRAG) decompose complex queries into multi-step retrieval chains.

The technical distinction matters. In traditional REALM (Retrieval-Augmented Language Model) architectures, the system performs one retrieval operation and feeds all results to the generator. CoRAG instead implements a deliberative process:

This multi-step approach mirrors how domain experts actually research complex questions. A financial analyst investigating merger implications doesn't pull every relevant document simultaneously. They start with the deal structure, then retrieve specific regulatory filings, then pull historical precedents, building understanding iteratively.

Adaptive retrieval strategies add another layer of sophistication. Research by Wang et al. (2025) demonstrates that intelligent gating mechanisms can determine when retrieval adds value versus when the language model already possesses sufficient knowledge. These systems learn to route queries appropriately, avoiding unnecessary vector searches while ensuring complex queries receive the multi-hop reasoning they require.

The performance differences are substantial. CoRAG systems demonstrate significantly better results on complex queries compared to single-step approaches, precisely because they can gather context progressively rather than hoping a single retrieval captures everything needed.

The business case for advanced RAG patterns extends well beyond accuracy metrics. Infrastructure cost reduction represents the most immediate benefit. Adaptive retrieval strategies reduce unnecessary retrievals while enhancing output quality, cutting vector database query volumes by 30-40% in production deployments. For enterprises running dedicated Pinecone, Weaviate, or Qdrant clusters, this translates directly to lower compute costs.

Response latency improvements matter equally. Counter-intuitively, multi-step retrieval can actually reduce time-to-answer for complex queries. Single-step systems often require multiple user interactions to refine inadequate initial responses. CoRAG architectures handle this refinement internally, delivering complete answers on the first interaction. The user experience transforms from iterative dialog to direct resolution.

Auditability becomes tractable with chain-of-retrieval patterns. Regulated industries need to trace how AI systems reached specific conclusions. When retrieval happens in discrete, logged steps, compliance teams can reconstruct the reasoning chain. This visibility is nearly impossible with opaque single-step architectures where hundreds of document chunks feed the generator simultaneously.

Data sovereignty requirements find natural alignment with these architectural patterns. Because CoRAG systems can implement retrieval logic entirely within the organization's infrastructure, enterprises maintain complete control over what data gets accessed and how. No external API calls. No third-party processing. Just internal orchestration of internal resources.

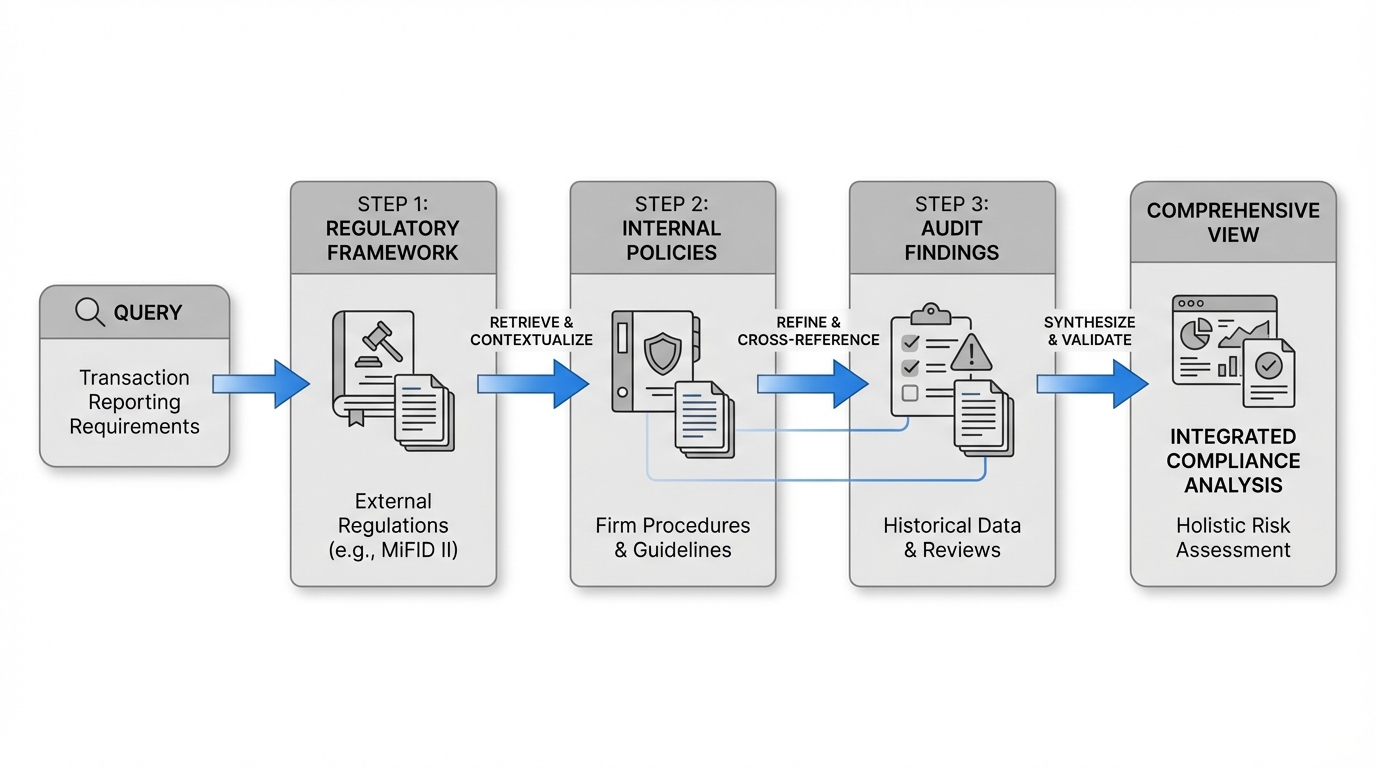

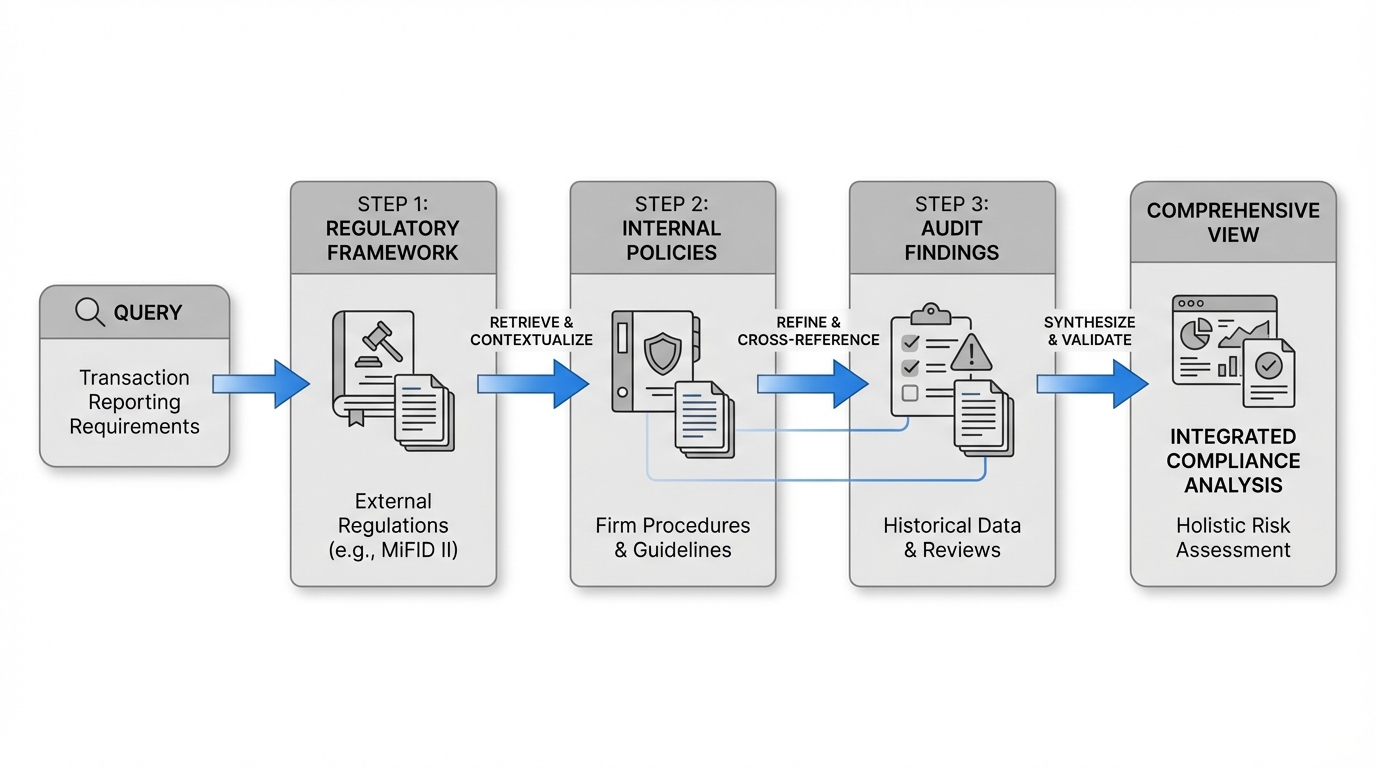

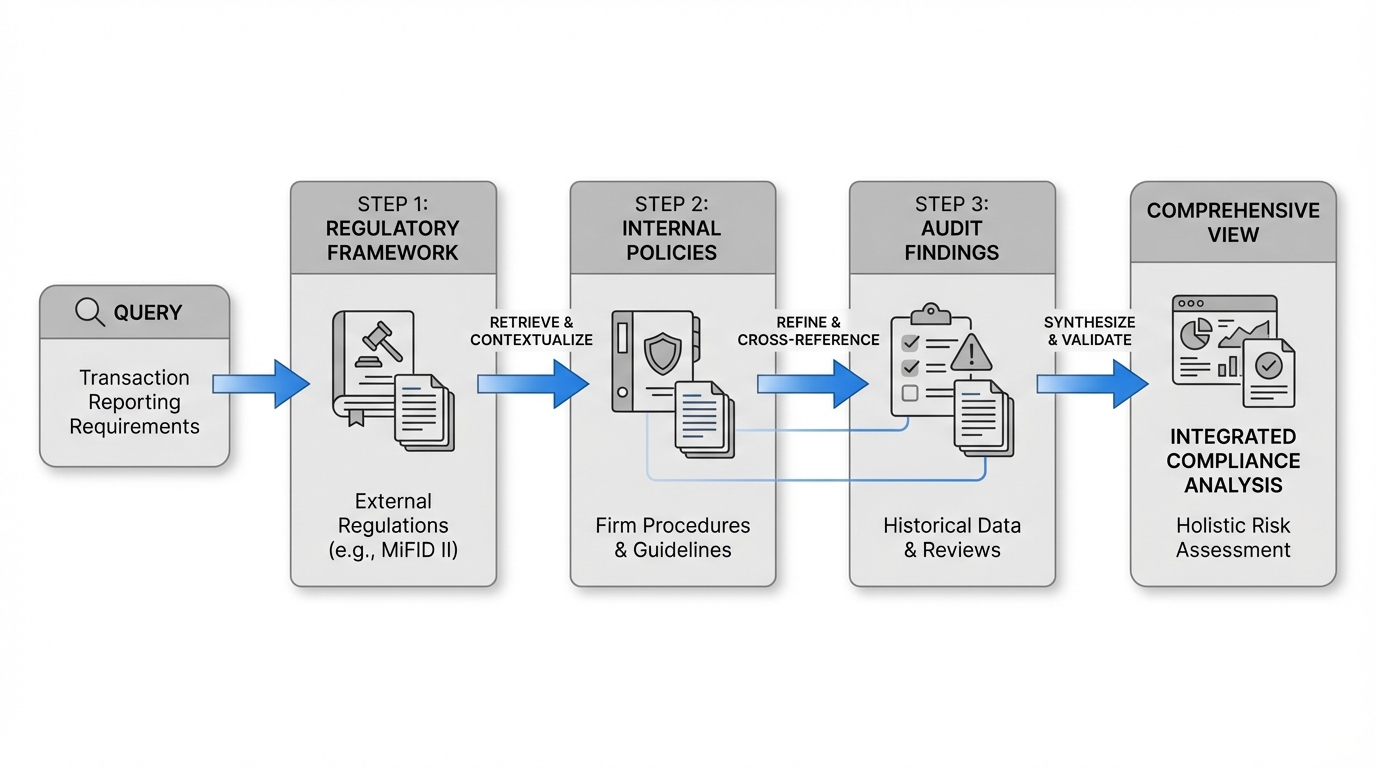

Financial services firms use multi-step RAG for regulatory compliance analysis. A query about transaction reporting requirements might first retrieve the relevant regulatory framework, then pull internal policy documentation, then access previous audit findings. Each step informs the next, building a comprehensive view that single-step retrieval would miss.

Healthcare organizations implement CoRAG for clinical decision support. A complex diagnostic question triggers retrieval of patient history, then relevant research literature, then treatment protocols, then insurance coverage policies. The chain structure mirrors clinical reasoning processes, making outputs more trustworthy to medical professionals.

Manufacturing companies deploy adaptive RAG for supply chain intelligence. Simple queries about part availability hit internal databases directly. Complex questions about alternative sourcing under constraint scenarios trigger multi-step retrieval across supplier catalogs, logistics networks, and historical procurement data.

Legal teams use these patterns for case research. Initial retrieval gathers relevant precedents, subsequent steps pull specific statutory language, final steps access internal case notes and strategy documents. The iterative approach handles the hierarchical nature of legal reasoning.

Architecting multi-step RAG systems requires rethinking your infrastructure stack. Vector databases remain foundational, but orchestration becomes critical. Teams need workflow engines that can manage conditional retrieval logic, tracking state across multiple steps while maintaining performance.

Embedding model selection affects every retrieval step. CoRAG architectures amplify the impact of embedding quality because errors compound across the chain. Enterprises typically need multiple specialized embedding models: one for initial query understanding, others fine-tuned for specific document types in their knowledge base.

Prompt engineering complexity increases significantly. Each retrieval step requires carefully crafted prompts that guide the language model to identify information gaps and formulate targeted follow-up retrievals. These prompts must balance specificity with flexibility, avoiding brittle logic that breaks on edge cases.

Cost modeling shifts from per-query to per-chain economics. Teams need monitoring that tracks complete retrieval chains, not just individual operations. A query that triggers four retrieval steps but resolves the user's need completely may deliver better ROI than a single-step query that leads to three follow-up questions.

Data access patterns change fundamentally. Single-step RAG generates predictable, uniform load on vector databases. Multi-step systems create variable, bursty access patterns that require different capacity planning and caching strategies.

Shakudo enables enterprises to deploy both REALM and CoRAG architectures entirely within their VPC, supporting the vector databases, embedding models, and orchestration tools needed for adaptive multi-step retrieval while maintaining complete data sovereignty. This integrated approach allows teams to experiment with different RAG design patterns without vendor lock-in or data exposure, critical for regulated industries implementing knowledge-intensive AI systems.

The platform handles the infrastructure complexity of running coordinated retrieval chains across multiple tools, letting data science teams focus on optimizing architectural patterns rather than managing Kubernetes configurations and service mesh policies.

The shift from single-step to chain-of-retrieval patterns represents more than incremental improvement. It's a fundamental advancement in how enterprise AI systems handle knowledge-intensive tasks. Organizations that adopt these architectures gain simultaneous improvements in cost efficiency, answer quality, and operational transparency.

The path forward requires technical investment. Multi-step RAG systems are more complex to architect, deploy, and monitor than traditional approaches. But for enterprises operating at scale with complex internal knowledge bases, the economics are compelling. Reduced infrastructure costs, improved user satisfaction, and enhanced auditability create clear ROI.

Start by identifying query patterns in your current RAG deployment. Which questions require multiple user interactions to resolve? Where do simple queries incur unnecessary retrieval overhead? These patterns reveal opportunities for architectural refinement.

The tools and frameworks for advanced RAG patterns are production-ready today. The question isn't whether these architectures will become standard, but how quickly your organization can implement them while competitors continue running expensive, inefficient single-step systems.

Ready to implement multi-step RAG within your infrastructure? Connect with our team to discuss deploying CoRAG architectures with complete data sovereignty and flexible experimentation across retrieval patterns.

Enterprise AI teams implementing Retrieval-Augmented Generation face a frustrating paradox. Traditional RAG architectures retrieve too much context, driving up vector database queries and compute costs without delivering proportionally better answers. Meanwhile, complex queries that require synthesizing information across multiple internal knowledge sources often fail entirely, leaving critical business questions unanswered.

This isn't a tuning problem. It's an architectural one.

Most production RAG implementations follow a deceptively simple pattern: embed the user query, search the vector database, retrieve the top-k results, and pass everything to the language model. This single-step approach works adequately for straightforward factual lookups. But it breaks down spectacularly when deployed at scale across enterprise knowledge bases.

The computational waste is staggering. Every query triggers a vector search regardless of whether retrieval is necessary. Simple queries that the language model could answer directly still incur the full retrieval overhead. Complex queries that need information from multiple sources receive a single batch of context that may miss critical connections. The result is a system that simultaneously over-retrieves for simple cases and under-retrieves for complex ones.

Regulated industries face an additional burden. Financial services firms, healthcare organizations, and government contractors operating under strict data sovereignty requirements must deploy these systems entirely within their infrastructure. When every unnecessary retrieval multiplies infrastructure costs across dedicated VPC deployments, the economics become untenable. Teams find themselves choosing between answer quality and operational efficiency, a choice that shouldn't exist.

The evolution of RAG design patterns represents a fundamental rethinking of how retrieval and generation should interact. Rather than treating retrieval as a single preprocessing step, advanced architectures like Chain-of-Retrieval Augmented Generation (CoRAG) decompose complex queries into multi-step retrieval chains.

The technical distinction matters. In traditional REALM (Retrieval-Augmented Language Model) architectures, the system performs one retrieval operation and feeds all results to the generator. CoRAG instead implements a deliberative process:

This multi-step approach mirrors how domain experts actually research complex questions. A financial analyst investigating merger implications doesn't pull every relevant document simultaneously. They start with the deal structure, then retrieve specific regulatory filings, then pull historical precedents, building understanding iteratively.

Adaptive retrieval strategies add another layer of sophistication. Research by Wang et al. (2025) demonstrates that intelligent gating mechanisms can determine when retrieval adds value versus when the language model already possesses sufficient knowledge. These systems learn to route queries appropriately, avoiding unnecessary vector searches while ensuring complex queries receive the multi-hop reasoning they require.

The performance differences are substantial. CoRAG systems demonstrate significantly better results on complex queries compared to single-step approaches, precisely because they can gather context progressively rather than hoping a single retrieval captures everything needed.

The business case for advanced RAG patterns extends well beyond accuracy metrics. Infrastructure cost reduction represents the most immediate benefit. Adaptive retrieval strategies reduce unnecessary retrievals while enhancing output quality, cutting vector database query volumes by 30-40% in production deployments. For enterprises running dedicated Pinecone, Weaviate, or Qdrant clusters, this translates directly to lower compute costs.

Response latency improvements matter equally. Counter-intuitively, multi-step retrieval can actually reduce time-to-answer for complex queries. Single-step systems often require multiple user interactions to refine inadequate initial responses. CoRAG architectures handle this refinement internally, delivering complete answers on the first interaction. The user experience transforms from iterative dialog to direct resolution.

Auditability becomes tractable with chain-of-retrieval patterns. Regulated industries need to trace how AI systems reached specific conclusions. When retrieval happens in discrete, logged steps, compliance teams can reconstruct the reasoning chain. This visibility is nearly impossible with opaque single-step architectures where hundreds of document chunks feed the generator simultaneously.

Data sovereignty requirements find natural alignment with these architectural patterns. Because CoRAG systems can implement retrieval logic entirely within the organization's infrastructure, enterprises maintain complete control over what data gets accessed and how. No external API calls. No third-party processing. Just internal orchestration of internal resources.

Financial services firms use multi-step RAG for regulatory compliance analysis. A query about transaction reporting requirements might first retrieve the relevant regulatory framework, then pull internal policy documentation, then access previous audit findings. Each step informs the next, building a comprehensive view that single-step retrieval would miss.

Healthcare organizations implement CoRAG for clinical decision support. A complex diagnostic question triggers retrieval of patient history, then relevant research literature, then treatment protocols, then insurance coverage policies. The chain structure mirrors clinical reasoning processes, making outputs more trustworthy to medical professionals.

Manufacturing companies deploy adaptive RAG for supply chain intelligence. Simple queries about part availability hit internal databases directly. Complex questions about alternative sourcing under constraint scenarios trigger multi-step retrieval across supplier catalogs, logistics networks, and historical procurement data.

Legal teams use these patterns for case research. Initial retrieval gathers relevant precedents, subsequent steps pull specific statutory language, final steps access internal case notes and strategy documents. The iterative approach handles the hierarchical nature of legal reasoning.

Architecting multi-step RAG systems requires rethinking your infrastructure stack. Vector databases remain foundational, but orchestration becomes critical. Teams need workflow engines that can manage conditional retrieval logic, tracking state across multiple steps while maintaining performance.

Embedding model selection affects every retrieval step. CoRAG architectures amplify the impact of embedding quality because errors compound across the chain. Enterprises typically need multiple specialized embedding models: one for initial query understanding, others fine-tuned for specific document types in their knowledge base.

Prompt engineering complexity increases significantly. Each retrieval step requires carefully crafted prompts that guide the language model to identify information gaps and formulate targeted follow-up retrievals. These prompts must balance specificity with flexibility, avoiding brittle logic that breaks on edge cases.

Cost modeling shifts from per-query to per-chain economics. Teams need monitoring that tracks complete retrieval chains, not just individual operations. A query that triggers four retrieval steps but resolves the user's need completely may deliver better ROI than a single-step query that leads to three follow-up questions.

Data access patterns change fundamentally. Single-step RAG generates predictable, uniform load on vector databases. Multi-step systems create variable, bursty access patterns that require different capacity planning and caching strategies.

Shakudo enables enterprises to deploy both REALM and CoRAG architectures entirely within their VPC, supporting the vector databases, embedding models, and orchestration tools needed for adaptive multi-step retrieval while maintaining complete data sovereignty. This integrated approach allows teams to experiment with different RAG design patterns without vendor lock-in or data exposure, critical for regulated industries implementing knowledge-intensive AI systems.

The platform handles the infrastructure complexity of running coordinated retrieval chains across multiple tools, letting data science teams focus on optimizing architectural patterns rather than managing Kubernetes configurations and service mesh policies.

The shift from single-step to chain-of-retrieval patterns represents more than incremental improvement. It's a fundamental advancement in how enterprise AI systems handle knowledge-intensive tasks. Organizations that adopt these architectures gain simultaneous improvements in cost efficiency, answer quality, and operational transparency.

The path forward requires technical investment. Multi-step RAG systems are more complex to architect, deploy, and monitor than traditional approaches. But for enterprises operating at scale with complex internal knowledge bases, the economics are compelling. Reduced infrastructure costs, improved user satisfaction, and enhanced auditability create clear ROI.

Start by identifying query patterns in your current RAG deployment. Which questions require multiple user interactions to resolve? Where do simple queries incur unnecessary retrieval overhead? These patterns reveal opportunities for architectural refinement.

The tools and frameworks for advanced RAG patterns are production-ready today. The question isn't whether these architectures will become standard, but how quickly your organization can implement them while competitors continue running expensive, inefficient single-step systems.

Ready to implement multi-step RAG within your infrastructure? Connect with our team to discuss deploying CoRAG architectures with complete data sovereignty and flexible experimentation across retrieval patterns.

Enterprise AI teams implementing Retrieval-Augmented Generation face a frustrating paradox. Traditional RAG architectures retrieve too much context, driving up vector database queries and compute costs without delivering proportionally better answers. Meanwhile, complex queries that require synthesizing information across multiple internal knowledge sources often fail entirely, leaving critical business questions unanswered.

This isn't a tuning problem. It's an architectural one.

Most production RAG implementations follow a deceptively simple pattern: embed the user query, search the vector database, retrieve the top-k results, and pass everything to the language model. This single-step approach works adequately for straightforward factual lookups. But it breaks down spectacularly when deployed at scale across enterprise knowledge bases.

The computational waste is staggering. Every query triggers a vector search regardless of whether retrieval is necessary. Simple queries that the language model could answer directly still incur the full retrieval overhead. Complex queries that need information from multiple sources receive a single batch of context that may miss critical connections. The result is a system that simultaneously over-retrieves for simple cases and under-retrieves for complex ones.

Regulated industries face an additional burden. Financial services firms, healthcare organizations, and government contractors operating under strict data sovereignty requirements must deploy these systems entirely within their infrastructure. When every unnecessary retrieval multiplies infrastructure costs across dedicated VPC deployments, the economics become untenable. Teams find themselves choosing between answer quality and operational efficiency, a choice that shouldn't exist.

The evolution of RAG design patterns represents a fundamental rethinking of how retrieval and generation should interact. Rather than treating retrieval as a single preprocessing step, advanced architectures like Chain-of-Retrieval Augmented Generation (CoRAG) decompose complex queries into multi-step retrieval chains.

The technical distinction matters. In traditional REALM (Retrieval-Augmented Language Model) architectures, the system performs one retrieval operation and feeds all results to the generator. CoRAG instead implements a deliberative process:

This multi-step approach mirrors how domain experts actually research complex questions. A financial analyst investigating merger implications doesn't pull every relevant document simultaneously. They start with the deal structure, then retrieve specific regulatory filings, then pull historical precedents, building understanding iteratively.

Adaptive retrieval strategies add another layer of sophistication. Research by Wang et al. (2025) demonstrates that intelligent gating mechanisms can determine when retrieval adds value versus when the language model already possesses sufficient knowledge. These systems learn to route queries appropriately, avoiding unnecessary vector searches while ensuring complex queries receive the multi-hop reasoning they require.

The performance differences are substantial. CoRAG systems demonstrate significantly better results on complex queries compared to single-step approaches, precisely because they can gather context progressively rather than hoping a single retrieval captures everything needed.

The business case for advanced RAG patterns extends well beyond accuracy metrics. Infrastructure cost reduction represents the most immediate benefit. Adaptive retrieval strategies reduce unnecessary retrievals while enhancing output quality, cutting vector database query volumes by 30-40% in production deployments. For enterprises running dedicated Pinecone, Weaviate, or Qdrant clusters, this translates directly to lower compute costs.

Response latency improvements matter equally. Counter-intuitively, multi-step retrieval can actually reduce time-to-answer for complex queries. Single-step systems often require multiple user interactions to refine inadequate initial responses. CoRAG architectures handle this refinement internally, delivering complete answers on the first interaction. The user experience transforms from iterative dialog to direct resolution.

Auditability becomes tractable with chain-of-retrieval patterns. Regulated industries need to trace how AI systems reached specific conclusions. When retrieval happens in discrete, logged steps, compliance teams can reconstruct the reasoning chain. This visibility is nearly impossible with opaque single-step architectures where hundreds of document chunks feed the generator simultaneously.

Data sovereignty requirements find natural alignment with these architectural patterns. Because CoRAG systems can implement retrieval logic entirely within the organization's infrastructure, enterprises maintain complete control over what data gets accessed and how. No external API calls. No third-party processing. Just internal orchestration of internal resources.

Financial services firms use multi-step RAG for regulatory compliance analysis. A query about transaction reporting requirements might first retrieve the relevant regulatory framework, then pull internal policy documentation, then access previous audit findings. Each step informs the next, building a comprehensive view that single-step retrieval would miss.

Healthcare organizations implement CoRAG for clinical decision support. A complex diagnostic question triggers retrieval of patient history, then relevant research literature, then treatment protocols, then insurance coverage policies. The chain structure mirrors clinical reasoning processes, making outputs more trustworthy to medical professionals.

Manufacturing companies deploy adaptive RAG for supply chain intelligence. Simple queries about part availability hit internal databases directly. Complex questions about alternative sourcing under constraint scenarios trigger multi-step retrieval across supplier catalogs, logistics networks, and historical procurement data.

Legal teams use these patterns for case research. Initial retrieval gathers relevant precedents, subsequent steps pull specific statutory language, final steps access internal case notes and strategy documents. The iterative approach handles the hierarchical nature of legal reasoning.

Architecting multi-step RAG systems requires rethinking your infrastructure stack. Vector databases remain foundational, but orchestration becomes critical. Teams need workflow engines that can manage conditional retrieval logic, tracking state across multiple steps while maintaining performance.

Embedding model selection affects every retrieval step. CoRAG architectures amplify the impact of embedding quality because errors compound across the chain. Enterprises typically need multiple specialized embedding models: one for initial query understanding, others fine-tuned for specific document types in their knowledge base.

Prompt engineering complexity increases significantly. Each retrieval step requires carefully crafted prompts that guide the language model to identify information gaps and formulate targeted follow-up retrievals. These prompts must balance specificity with flexibility, avoiding brittle logic that breaks on edge cases.

Cost modeling shifts from per-query to per-chain economics. Teams need monitoring that tracks complete retrieval chains, not just individual operations. A query that triggers four retrieval steps but resolves the user's need completely may deliver better ROI than a single-step query that leads to three follow-up questions.

Data access patterns change fundamentally. Single-step RAG generates predictable, uniform load on vector databases. Multi-step systems create variable, bursty access patterns that require different capacity planning and caching strategies.

Shakudo enables enterprises to deploy both REALM and CoRAG architectures entirely within their VPC, supporting the vector databases, embedding models, and orchestration tools needed for adaptive multi-step retrieval while maintaining complete data sovereignty. This integrated approach allows teams to experiment with different RAG design patterns without vendor lock-in or data exposure, critical for regulated industries implementing knowledge-intensive AI systems.

The platform handles the infrastructure complexity of running coordinated retrieval chains across multiple tools, letting data science teams focus on optimizing architectural patterns rather than managing Kubernetes configurations and service mesh policies.

The shift from single-step to chain-of-retrieval patterns represents more than incremental improvement. It's a fundamental advancement in how enterprise AI systems handle knowledge-intensive tasks. Organizations that adopt these architectures gain simultaneous improvements in cost efficiency, answer quality, and operational transparency.

The path forward requires technical investment. Multi-step RAG systems are more complex to architect, deploy, and monitor than traditional approaches. But for enterprises operating at scale with complex internal knowledge bases, the economics are compelling. Reduced infrastructure costs, improved user satisfaction, and enhanced auditability create clear ROI.

Start by identifying query patterns in your current RAG deployment. Which questions require multiple user interactions to resolve? Where do simple queries incur unnecessary retrieval overhead? These patterns reveal opportunities for architectural refinement.

The tools and frameworks for advanced RAG patterns are production-ready today. The question isn't whether these architectures will become standard, but how quickly your organization can implement them while competitors continue running expensive, inefficient single-step systems.

Ready to implement multi-step RAG within your infrastructure? Connect with our team to discuss deploying CoRAG architectures with complete data sovereignty and flexible experimentation across retrieval patterns.