.avif)

.avif)

Recent advances in big data technologies have enabled many applications to perform tasks unimaginable just a short time ago, with use cases ranging from reverse image search to question-answering or even natural language semantic search for podcasts.

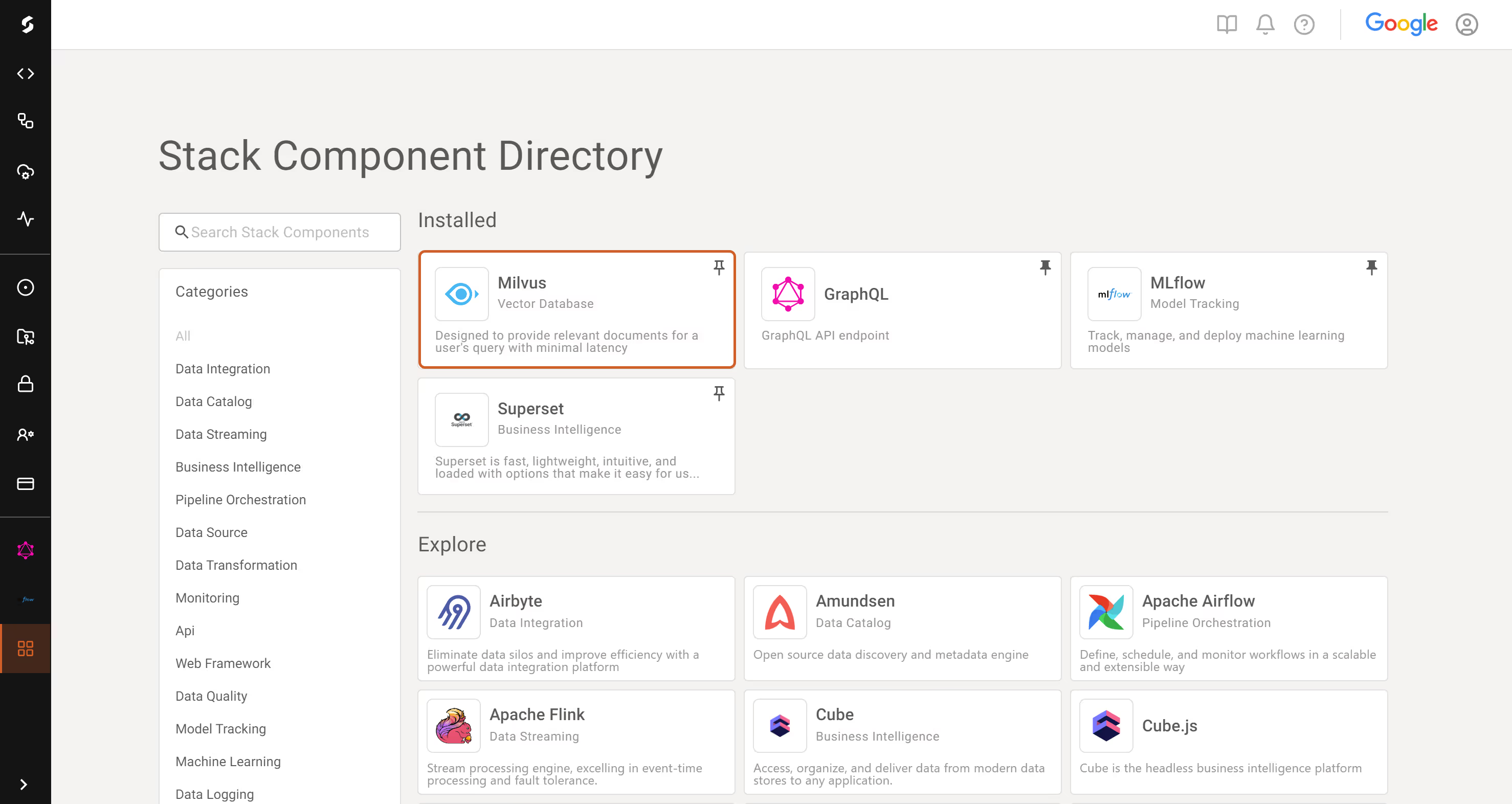

A critical component of such systems is a fast vector storage and search engine like Milvus, a vector database designed to provide relevant documents for a user's query with minimal latency. (If you are not familiar with vector databases or want to learn more, we covered vector database basics in a previous post.)

We’re excited to announce that Shakudo's unified infrastructure now seamlessly integrates with Milvus, simplifying deployment processes and centralizing data management. This integration also ensures smooth interoperability with other Shakudo services, such as Nvidia RAPIDS and Dask, thereby enhancing the reliability and performance of vector database operations.

Milvus is one of the most popular vector databases currently available — is known for its efficiency and high scalability.

In this blogpost, we’ll cover essential operations on Milvus, from initialization and inserting your data into a fresh collection, to indexing and vector search.

With Shakudo's built-in Milvus integration, there's no need for any special setup to access enterprise-grade features like auto-scaling, backup management, and failover capabilities. Complementing this, Shakudo's built-in monitoring and alerting mechanisms offer real-time metrics on Milvus' health and performance. Simply initiate the Milvus service through Shakudo, and you're instantly ready to work with vectors.

The following sections of this blog post will walk you through this workflow with Milvus:

We will assume that the required parameters to perform the following operations have already been set up by the user in their environment.

Milvus requires a connection be established before any other operation is performed. We assume that the Milvus host and port are in the environment (see below). The default Milvus port is 19530. On Shakudo, the host for the Milvus instance will have a name that looks like `milvus.hyperplane-milvus.svc.cluster.local`, following Shakudo’s straightforward naming convention of `stack-component.hyperplane-stack-component.svc.cluster.local`.

The connection alias is used to differentiate between multiple active connections, if applicable. Other operations in Milvus will use the connection with the alias `default` when another alias is not provided, so we can save a little typing by starting our single connection with this alias in this case. Note that connecting to Milvus does not create a connection state or management object. Instead, the connection alias will be reused for subsequent operations, such as closing it during the cleanup phase.

While Milvus also supports creating databases, which allows setting permissions on a set of collections for finer-grained control, performing this setup is optional and we will not be covering it here. Instead, we will set up a collection for the WikiHow dataset, which can be downloaded through this link. For demonstration purposes, only the WikiHow article title will be embedded as a vector (the rest of the document, built from concatenating the section headlines and section texts, will be stored in Milvus and retrieved through the vector search), and we will be using a single vector field, although that is not a limitation of Milvus. Additionally, we will structure the fields in the collection to adhere to a minimal LangChain-compatible schema, to demonstrate how we would prepare Milvus for operation with this highly popular LLM framework.

LangChain itself has native support for Milvus through the vectorstores module, which allows adding documents in the right format directly and performing similarity search without much further setup. On the other hand, the LangChain integration for Milvus is not very flexible in terms of operations it can perform, while the PyMilvus interface gives us full control.

The primary key should be called `pk` and be `auto_id` with type `INT64` for compatibility with LangChain. Moreover, the vector field should be called `vector` and the document as a whole would be accessed or inserted by LangChain in the `text` field if working through it instead of directly with the PyMilvus interface.

We set the `consistency_level` to `Session`, which ensures we will read our own writes, even though the store may not yet be fully consistent with the writes of other sessions at the time we perform a query. In this example, we set `enable_dynamic_fields` to `False`, meaning that Milvus will enforce the provided schema, instead of operating in dynamic schema mode.

Now that we have a connection and a collection, we can start inserting data in our Milvus store. First, we need to actually acquire a suitable dataset (in this case, we will use the WikiHow dataset, linked above). Next, the data needs to be processed. For brevity, we omitted this step from this post, but see our ipython notebook (containing a fully working example that merely needs to be configured with the right environment variables on Shakudo) for the full details.

For data processing, Shakudo supports integration with many common tools, such as Nvidia RAPIDS, previously discussed on our blog, or Dask, for which Shakudo provides an easy-to-use interface, as discussed in our documentation. A list of Shakudo’s current integrations is available at this link.

We use a small BERT model for embeddings, using LangChain:

Armed with our embeddings and dataset, inserting documents in Milvus is as simple as:

Once we are done inserting data in Milvus, we flush the collection to ensure the data is properly persisted. Note that search results can be quite slow without an index defined, so we create one before loading the data. The collection must be `loaded` in order to perform searches against it.

Now that our data is stored and indexed in Milvus, and after creating our index to ensure good performance for our searches, we can find relevant documents with the `search` function. For scalar searches, Milvus also supports a `query` method, but we will not be covering that in this blog.

The search parameters are described in the Milvus documentation in more detail and are also covered in Shakudo’s documentation about the Milvus integration.

Milvus expects a list of embeddings to search for and will return `limit` matches for each of the input vectors. Since we specify a limit of 1 and only provide 1 tensor to the search, the lone search result’s data can be queried as shown in the code by indexing through to the first tensor’s first match.

And for a test query:

On Shakudo, Milvus returns a great match at interactive speeds. Success!

To avoid Milvus’ nodes using unneeded resources, we simply release the collection once the search is done, and then close the connection when we are ready to stop using Milvus

That’s it — you are now ready to implement your document retrieval applications with Milvus on Shakudo! Come back soon for more on how to build state-of-the-art data apps in minutes with Shakudo, the operating system for data stacks.

Shakudo is the ideal solution for businesses that want to build a flexible, unified data stack that can grow with their needs. To learn more, read Roger Mahoula's blog post on how to achieve this with no vendor lock-in or infrastructure maintenance required. Register for a personalized demo to see how Shakudo can help you build your customized data stack.

.avif)

Recent advances in big data technologies have enabled many applications to perform tasks unimaginable just a short time ago, with use cases ranging from reverse image search to question-answering or even natural language semantic search for podcasts.

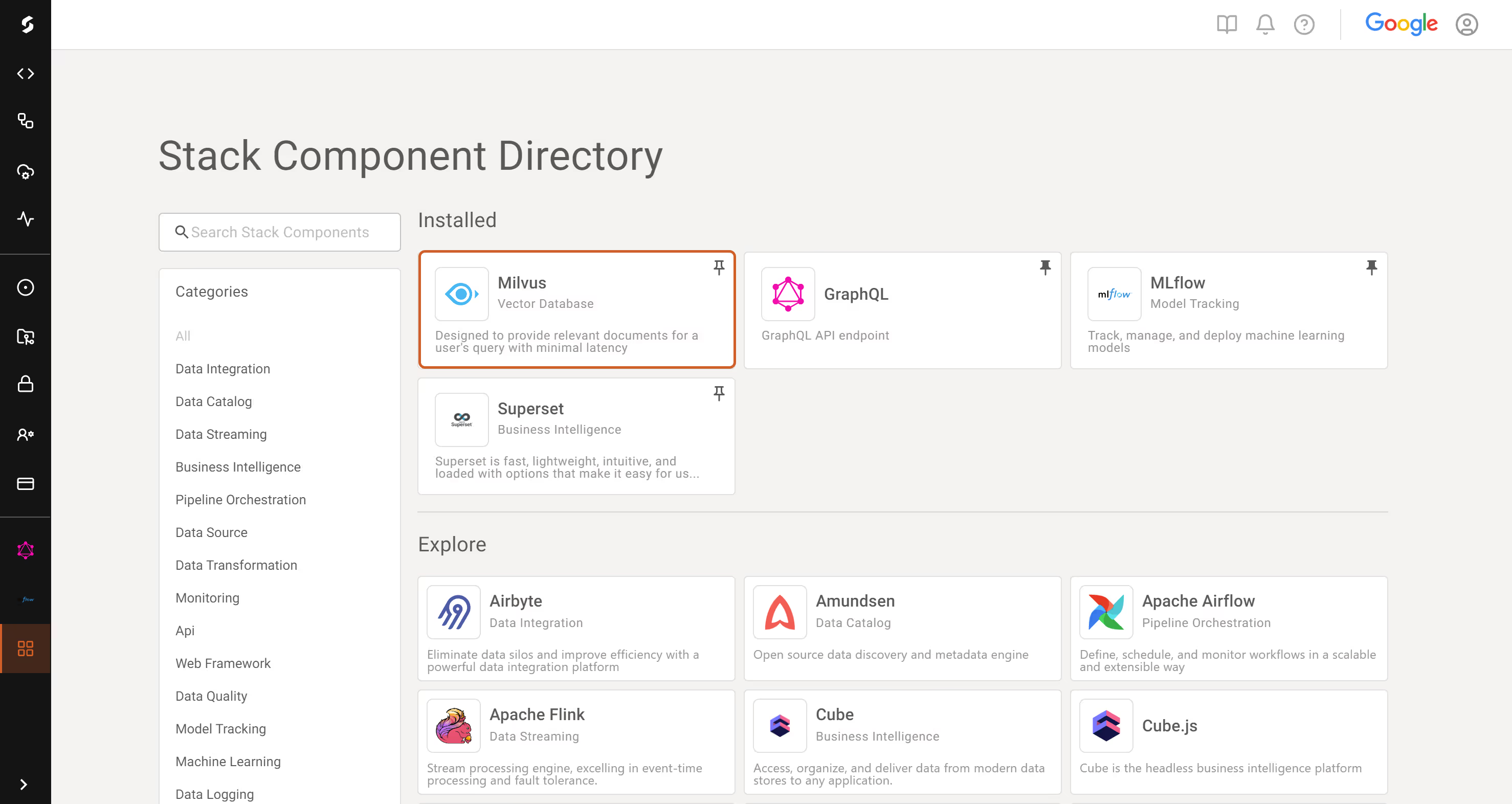

A critical component of such systems is a fast vector storage and search engine like Milvus, a vector database designed to provide relevant documents for a user's query with minimal latency. (If you are not familiar with vector databases or want to learn more, we covered vector database basics in a previous post.)

We’re excited to announce that Shakudo's unified infrastructure now seamlessly integrates with Milvus, simplifying deployment processes and centralizing data management. This integration also ensures smooth interoperability with other Shakudo services, such as Nvidia RAPIDS and Dask, thereby enhancing the reliability and performance of vector database operations.

Milvus is one of the most popular vector databases currently available — is known for its efficiency and high scalability.

In this blogpost, we’ll cover essential operations on Milvus, from initialization and inserting your data into a fresh collection, to indexing and vector search.

With Shakudo's built-in Milvus integration, there's no need for any special setup to access enterprise-grade features like auto-scaling, backup management, and failover capabilities. Complementing this, Shakudo's built-in monitoring and alerting mechanisms offer real-time metrics on Milvus' health and performance. Simply initiate the Milvus service through Shakudo, and you're instantly ready to work with vectors.

The following sections of this blog post will walk you through this workflow with Milvus:

We will assume that the required parameters to perform the following operations have already been set up by the user in their environment.

Milvus requires a connection be established before any other operation is performed. We assume that the Milvus host and port are in the environment (see below). The default Milvus port is 19530. On Shakudo, the host for the Milvus instance will have a name that looks like `milvus.hyperplane-milvus.svc.cluster.local`, following Shakudo’s straightforward naming convention of `stack-component.hyperplane-stack-component.svc.cluster.local`.

The connection alias is used to differentiate between multiple active connections, if applicable. Other operations in Milvus will use the connection with the alias `default` when another alias is not provided, so we can save a little typing by starting our single connection with this alias in this case. Note that connecting to Milvus does not create a connection state or management object. Instead, the connection alias will be reused for subsequent operations, such as closing it during the cleanup phase.

While Milvus also supports creating databases, which allows setting permissions on a set of collections for finer-grained control, performing this setup is optional and we will not be covering it here. Instead, we will set up a collection for the WikiHow dataset, which can be downloaded through this link. For demonstration purposes, only the WikiHow article title will be embedded as a vector (the rest of the document, built from concatenating the section headlines and section texts, will be stored in Milvus and retrieved through the vector search), and we will be using a single vector field, although that is not a limitation of Milvus. Additionally, we will structure the fields in the collection to adhere to a minimal LangChain-compatible schema, to demonstrate how we would prepare Milvus for operation with this highly popular LLM framework.

LangChain itself has native support for Milvus through the vectorstores module, which allows adding documents in the right format directly and performing similarity search without much further setup. On the other hand, the LangChain integration for Milvus is not very flexible in terms of operations it can perform, while the PyMilvus interface gives us full control.

The primary key should be called `pk` and be `auto_id` with type `INT64` for compatibility with LangChain. Moreover, the vector field should be called `vector` and the document as a whole would be accessed or inserted by LangChain in the `text` field if working through it instead of directly with the PyMilvus interface.

We set the `consistency_level` to `Session`, which ensures we will read our own writes, even though the store may not yet be fully consistent with the writes of other sessions at the time we perform a query. In this example, we set `enable_dynamic_fields` to `False`, meaning that Milvus will enforce the provided schema, instead of operating in dynamic schema mode.

Now that we have a connection and a collection, we can start inserting data in our Milvus store. First, we need to actually acquire a suitable dataset (in this case, we will use the WikiHow dataset, linked above). Next, the data needs to be processed. For brevity, we omitted this step from this post, but see our ipython notebook (containing a fully working example that merely needs to be configured with the right environment variables on Shakudo) for the full details.

For data processing, Shakudo supports integration with many common tools, such as Nvidia RAPIDS, previously discussed on our blog, or Dask, for which Shakudo provides an easy-to-use interface, as discussed in our documentation. A list of Shakudo’s current integrations is available at this link.

We use a small BERT model for embeddings, using LangChain:

Armed with our embeddings and dataset, inserting documents in Milvus is as simple as:

Once we are done inserting data in Milvus, we flush the collection to ensure the data is properly persisted. Note that search results can be quite slow without an index defined, so we create one before loading the data. The collection must be `loaded` in order to perform searches against it.

Now that our data is stored and indexed in Milvus, and after creating our index to ensure good performance for our searches, we can find relevant documents with the `search` function. For scalar searches, Milvus also supports a `query` method, but we will not be covering that in this blog.

The search parameters are described in the Milvus documentation in more detail and are also covered in Shakudo’s documentation about the Milvus integration.

Milvus expects a list of embeddings to search for and will return `limit` matches for each of the input vectors. Since we specify a limit of 1 and only provide 1 tensor to the search, the lone search result’s data can be queried as shown in the code by indexing through to the first tensor’s first match.

And for a test query:

On Shakudo, Milvus returns a great match at interactive speeds. Success!

To avoid Milvus’ nodes using unneeded resources, we simply release the collection once the search is done, and then close the connection when we are ready to stop using Milvus

That’s it — you are now ready to implement your document retrieval applications with Milvus on Shakudo! Come back soon for more on how to build state-of-the-art data apps in minutes with Shakudo, the operating system for data stacks.

Shakudo is the ideal solution for businesses that want to build a flexible, unified data stack that can grow with their needs. To learn more, read Roger Mahoula's blog post on how to achieve this with no vendor lock-in or infrastructure maintenance required. Register for a personalized demo to see how Shakudo can help you build your customized data stack.

Recent advances in big data technologies have enabled many applications to perform tasks unimaginable just a short time ago, with use cases ranging from reverse image search to question-answering or even natural language semantic search for podcasts.

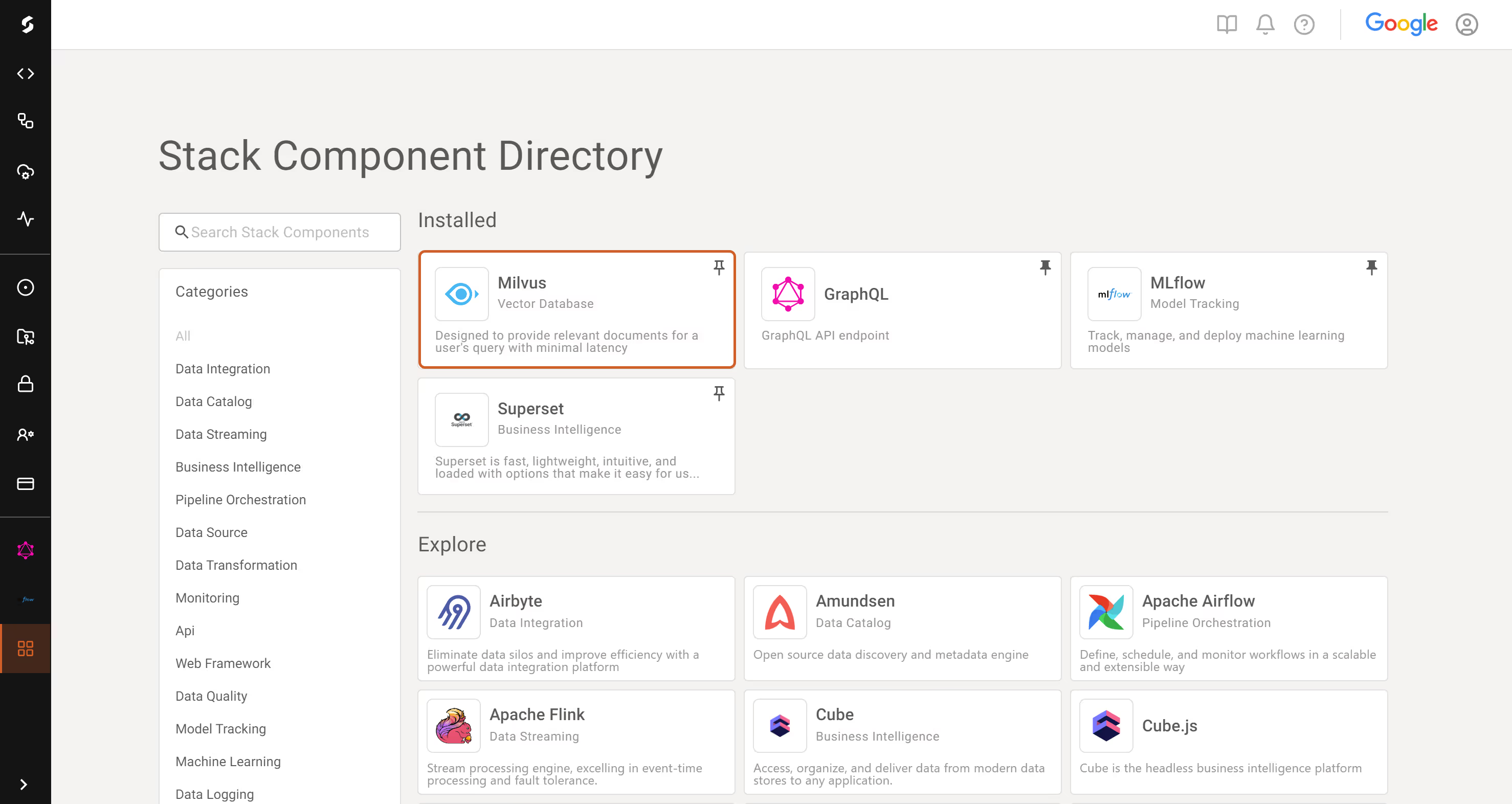

A critical component of such systems is a fast vector storage and search engine like Milvus, a vector database designed to provide relevant documents for a user's query with minimal latency. (If you are not familiar with vector databases or want to learn more, we covered vector database basics in a previous post.)

We’re excited to announce that Shakudo's unified infrastructure now seamlessly integrates with Milvus, simplifying deployment processes and centralizing data management. This integration also ensures smooth interoperability with other Shakudo services, such as Nvidia RAPIDS and Dask, thereby enhancing the reliability and performance of vector database operations.

Milvus is one of the most popular vector databases currently available — is known for its efficiency and high scalability.

In this blogpost, we’ll cover essential operations on Milvus, from initialization and inserting your data into a fresh collection, to indexing and vector search.

With Shakudo's built-in Milvus integration, there's no need for any special setup to access enterprise-grade features like auto-scaling, backup management, and failover capabilities. Complementing this, Shakudo's built-in monitoring and alerting mechanisms offer real-time metrics on Milvus' health and performance. Simply initiate the Milvus service through Shakudo, and you're instantly ready to work with vectors.

The following sections of this blog post will walk you through this workflow with Milvus:

We will assume that the required parameters to perform the following operations have already been set up by the user in their environment.

Milvus requires a connection be established before any other operation is performed. We assume that the Milvus host and port are in the environment (see below). The default Milvus port is 19530. On Shakudo, the host for the Milvus instance will have a name that looks like `milvus.hyperplane-milvus.svc.cluster.local`, following Shakudo’s straightforward naming convention of `stack-component.hyperplane-stack-component.svc.cluster.local`.

The connection alias is used to differentiate between multiple active connections, if applicable. Other operations in Milvus will use the connection with the alias `default` when another alias is not provided, so we can save a little typing by starting our single connection with this alias in this case. Note that connecting to Milvus does not create a connection state or management object. Instead, the connection alias will be reused for subsequent operations, such as closing it during the cleanup phase.

While Milvus also supports creating databases, which allows setting permissions on a set of collections for finer-grained control, performing this setup is optional and we will not be covering it here. Instead, we will set up a collection for the WikiHow dataset, which can be downloaded through this link. For demonstration purposes, only the WikiHow article title will be embedded as a vector (the rest of the document, built from concatenating the section headlines and section texts, will be stored in Milvus and retrieved through the vector search), and we will be using a single vector field, although that is not a limitation of Milvus. Additionally, we will structure the fields in the collection to adhere to a minimal LangChain-compatible schema, to demonstrate how we would prepare Milvus for operation with this highly popular LLM framework.

LangChain itself has native support for Milvus through the vectorstores module, which allows adding documents in the right format directly and performing similarity search without much further setup. On the other hand, the LangChain integration for Milvus is not very flexible in terms of operations it can perform, while the PyMilvus interface gives us full control.

The primary key should be called `pk` and be `auto_id` with type `INT64` for compatibility with LangChain. Moreover, the vector field should be called `vector` and the document as a whole would be accessed or inserted by LangChain in the `text` field if working through it instead of directly with the PyMilvus interface.

We set the `consistency_level` to `Session`, which ensures we will read our own writes, even though the store may not yet be fully consistent with the writes of other sessions at the time we perform a query. In this example, we set `enable_dynamic_fields` to `False`, meaning that Milvus will enforce the provided schema, instead of operating in dynamic schema mode.

Now that we have a connection and a collection, we can start inserting data in our Milvus store. First, we need to actually acquire a suitable dataset (in this case, we will use the WikiHow dataset, linked above). Next, the data needs to be processed. For brevity, we omitted this step from this post, but see our ipython notebook (containing a fully working example that merely needs to be configured with the right environment variables on Shakudo) for the full details.

For data processing, Shakudo supports integration with many common tools, such as Nvidia RAPIDS, previously discussed on our blog, or Dask, for which Shakudo provides an easy-to-use interface, as discussed in our documentation. A list of Shakudo’s current integrations is available at this link.

We use a small BERT model for embeddings, using LangChain:

Armed with our embeddings and dataset, inserting documents in Milvus is as simple as:

Once we are done inserting data in Milvus, we flush the collection to ensure the data is properly persisted. Note that search results can be quite slow without an index defined, so we create one before loading the data. The collection must be `loaded` in order to perform searches against it.

Now that our data is stored and indexed in Milvus, and after creating our index to ensure good performance for our searches, we can find relevant documents with the `search` function. For scalar searches, Milvus also supports a `query` method, but we will not be covering that in this blog.

The search parameters are described in the Milvus documentation in more detail and are also covered in Shakudo’s documentation about the Milvus integration.

Milvus expects a list of embeddings to search for and will return `limit` matches for each of the input vectors. Since we specify a limit of 1 and only provide 1 tensor to the search, the lone search result’s data can be queried as shown in the code by indexing through to the first tensor’s first match.

And for a test query:

On Shakudo, Milvus returns a great match at interactive speeds. Success!

To avoid Milvus’ nodes using unneeded resources, we simply release the collection once the search is done, and then close the connection when we are ready to stop using Milvus

That’s it — you are now ready to implement your document retrieval applications with Milvus on Shakudo! Come back soon for more on how to build state-of-the-art data apps in minutes with Shakudo, the operating system for data stacks.

Shakudo is the ideal solution for businesses that want to build a flexible, unified data stack that can grow with their needs. To learn more, read Roger Mahoula's blog post on how to achieve this with no vendor lock-in or infrastructure maintenance required. Register for a personalized demo to see how Shakudo can help you build your customized data stack.