.jpg)

.jpg)

The viral, bottom-up adoption of generative AI assistants like ChatGPT and Microsoft Copilot is undeniable and, on the surface, a clear victory for productivity. Employees are leveraging these tools to draft emails, summarize documents, and generate code snippets with unprecedented speed, creating immense internal pressure on technology leaders to deploy them at scale. The appeal is tangible; the efficiency gains for individual tasks are immediate and compelling.

This creates a profound paradox for the modern technology executive. The very tools that empower individuals can introduce systemic risk and strategic debt to the enterprise as a whole. The frictionless experience for an employee masks a web of complex security, compliance, and integration challenges for the organization. The ease of use that drives adoption also sets a dangerously misleading precedent. Employees and even business leaders come to perceive AI as a kind of magic—instant, free or low-cost, and requiring no foundational work. When technology leaders then propose a more robust, strategic approach that requires investment in data governance, secure infrastructure, and operational discipline, it can be perceived as bureaucratic and slow. The tactical win for employee productivity thus creates a cultural and political headwind against building a durable, long-term AI capability.

The strategic question, therefore, is not if the enterprise should use AI, but how. How do we mature from scattered pockets of AI-driven efficiency to a secure, scalable, and integrated enterprise AI foundation that becomes a true competitive moat? This requires looking past the immediate allure of generic assistants and confronting the seven critical gaps they leave open.

The most significant and immediate risk posed by public AI tools is the loss of control over an organization's most valuable asset: its data. When employees input sensitive information—unannounced financial data, proprietary source code, customer personally identifiable information (PII), or confidential legal strategies—into a third-party-hosted AI tool, that data leaves the secure perimeter of the enterprise. This act fundamentally compromises the principle of data sovereignty.

These tools often operate as a black box, providing little to no transparency into how input data is stored, who has access to it, or whether it is used to train the vendor's future models. This creates a direct and unacceptable risk of data leakage, where a company's confidential information could be inadvertently regurgitated in a response to another user, who could be a competitor. This lack of control creates a compliance nightmare. Regulations like the General Data Protection Regulation (GDPR), the Health Insurance Portability and Accountability Act (HIPAA), and the California Consumer Privacy Act (CCPA) impose strict requirements on data processing, residency, and consent. Using a public AI tool for regulated data can lead to severe financial penalties, loss of customer trust, and lasting reputational damage, as the burden of compliance remains with the enterprise even when control is abdicated to a vendor.

This reality exposes a deep philosophical conflict between the operational model of public AI and the security posture of the modern enterprise. Today's security architectures are built on a "zero-trust" model: never trust, always verify, and assume breach. Access is granular, monitored, and meticulously controlled. Public AI tools, conversely, operate on a model of "implicit trust," forcing a Chief Information Security Officer (CISO) to trust a vendor's opaque security promises and multi-tenant architecture with their most sensitive data. This is not a technical gap; it is a fundamental misalignment of security principles. The only truly defensible posture is to bring AI capabilities to the data, not the other way around. This makes an architecture where models and applications run within the enterprise's own secure in-VPC (Virtual Private Cloud) deployment a non-negotiable prerequisite for any serious AI initiative, as it extends the zero-trust perimeter to encompass AI workloads, rather than contradicting it.

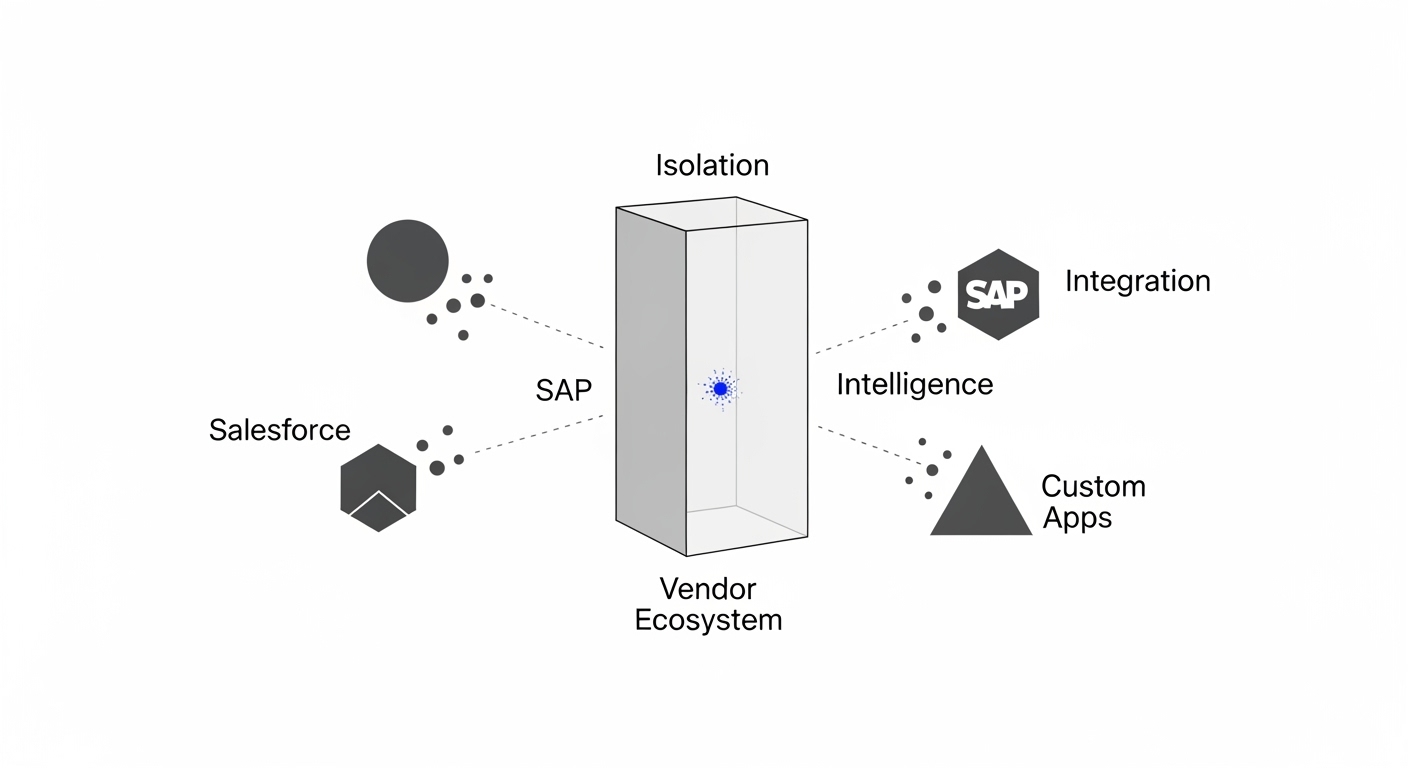

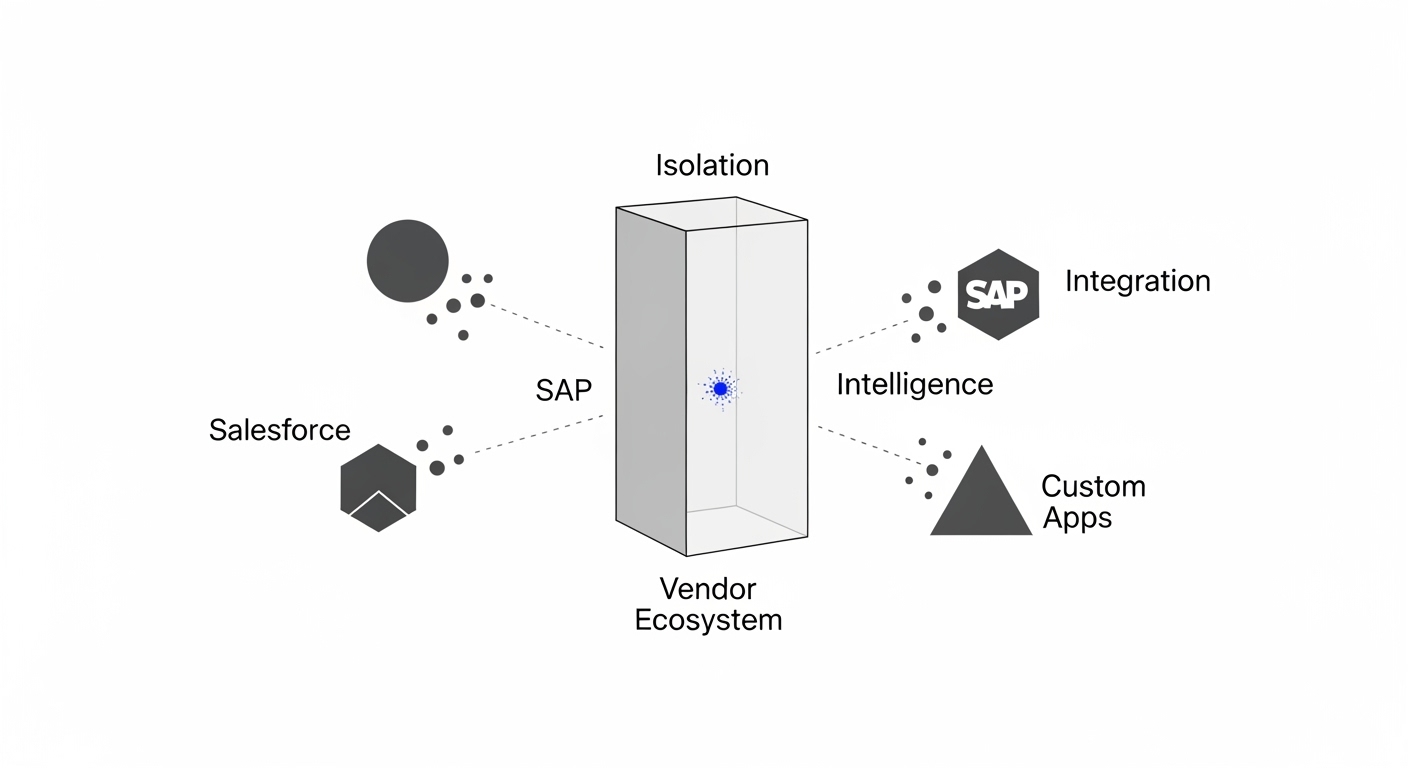

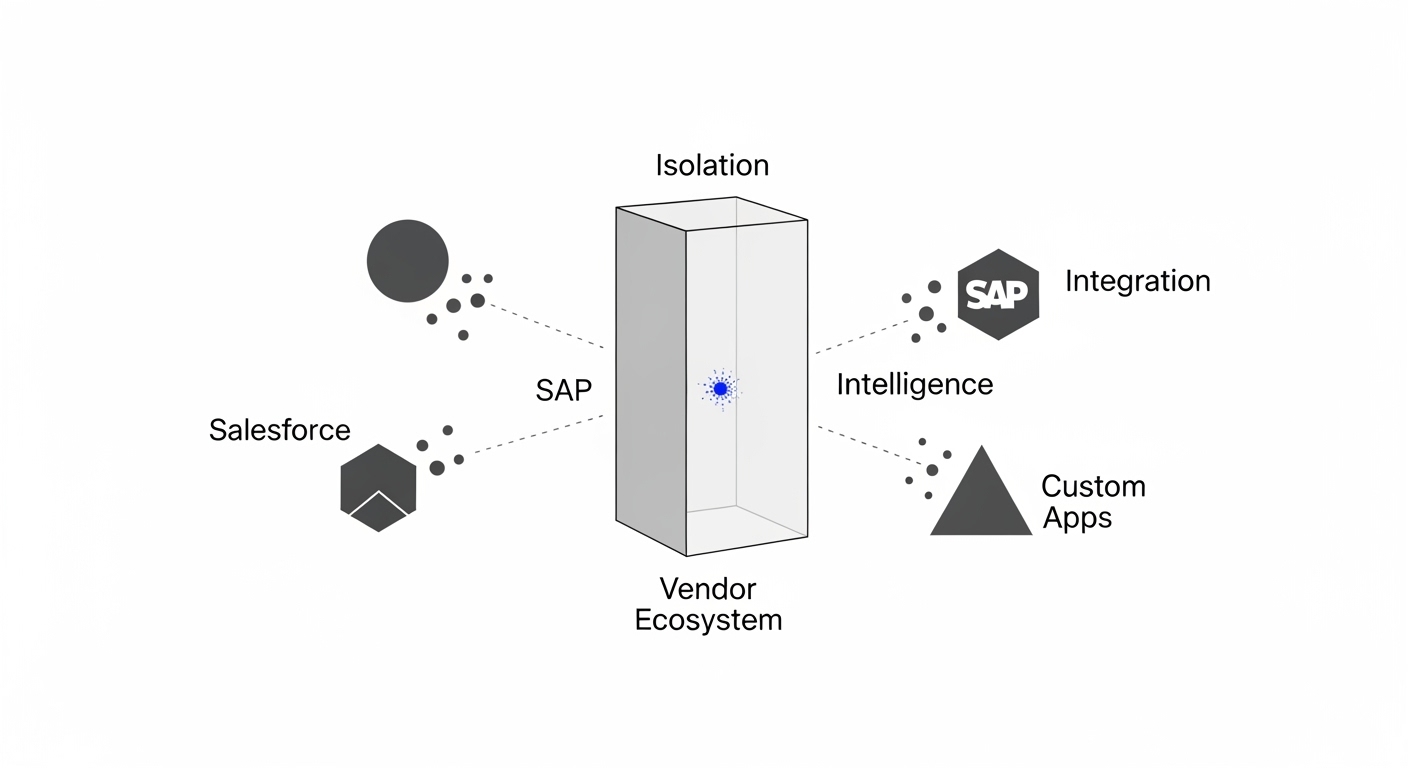

Microsoft Copilot is marketed on its deep integration within the Microsoft 365 suite, offering powerful features for users of Word, Excel, and Teams. While this provides a seamless experience for tasks confined to that ecosystem, it creates a strategic vulnerability for the enterprise: a walled garden that isolates intelligence.

The modern enterprise is a heterogeneous environment. Mission-critical data does not live solely in Microsoft products; it resides in Salesforce, Confluence, Jira, Slack, SAP, Snowflake, and countless bespoke internal applications. A tool that cannot natively access and reason over these disparate data sources cannot provide true enterprise-wide intelligence. It can only optimize tasks within its own silo, reinforcing the very information barriers that technology leaders have spent decades trying to dismantle. This limitation reframes the concept of vendor lock-in. Historically, lock-in was a financial and operational concern centered on high switching costs. In the AI era, it becomes a strategic constraint on intelligence itself. By tethering an AI strategy to a single vendor's ecosystem, an organization is not just locked into their pricing; it is locked into their model of the world. The AI can only be as intelligent as the data it can see.

The real, transformative value of enterprise AI lies in its ability to act as an orchestration layer, connecting data and workflows across different systems to generate novel insights and automate complex, cross-functional processes. This requires a platform that is fundamentally open and tool-agnostic, designed to integrate with any data source or application, whether commercial or open-source. Such a platform is architected to maximize the enterprise's collective intelligence by connecting to its entire "brain," not just one lobe.

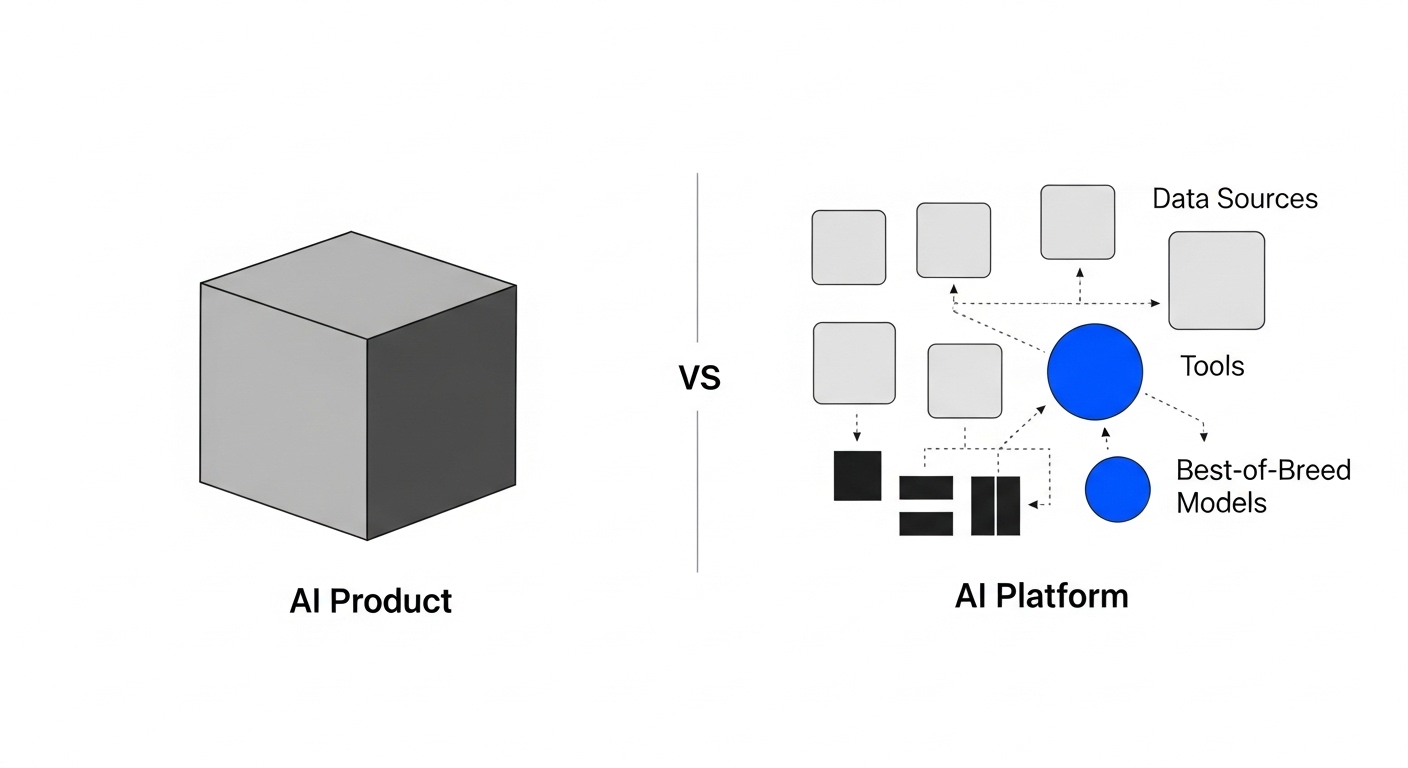

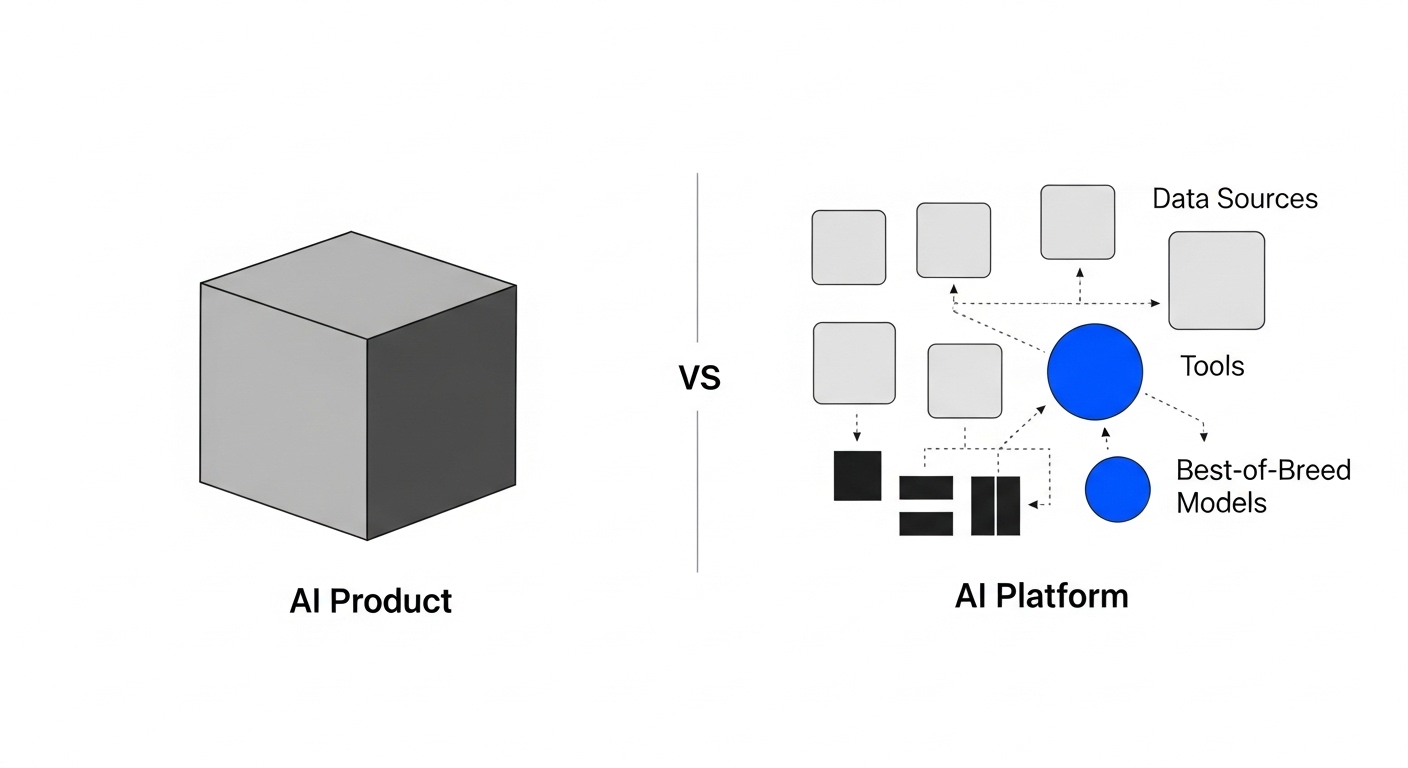

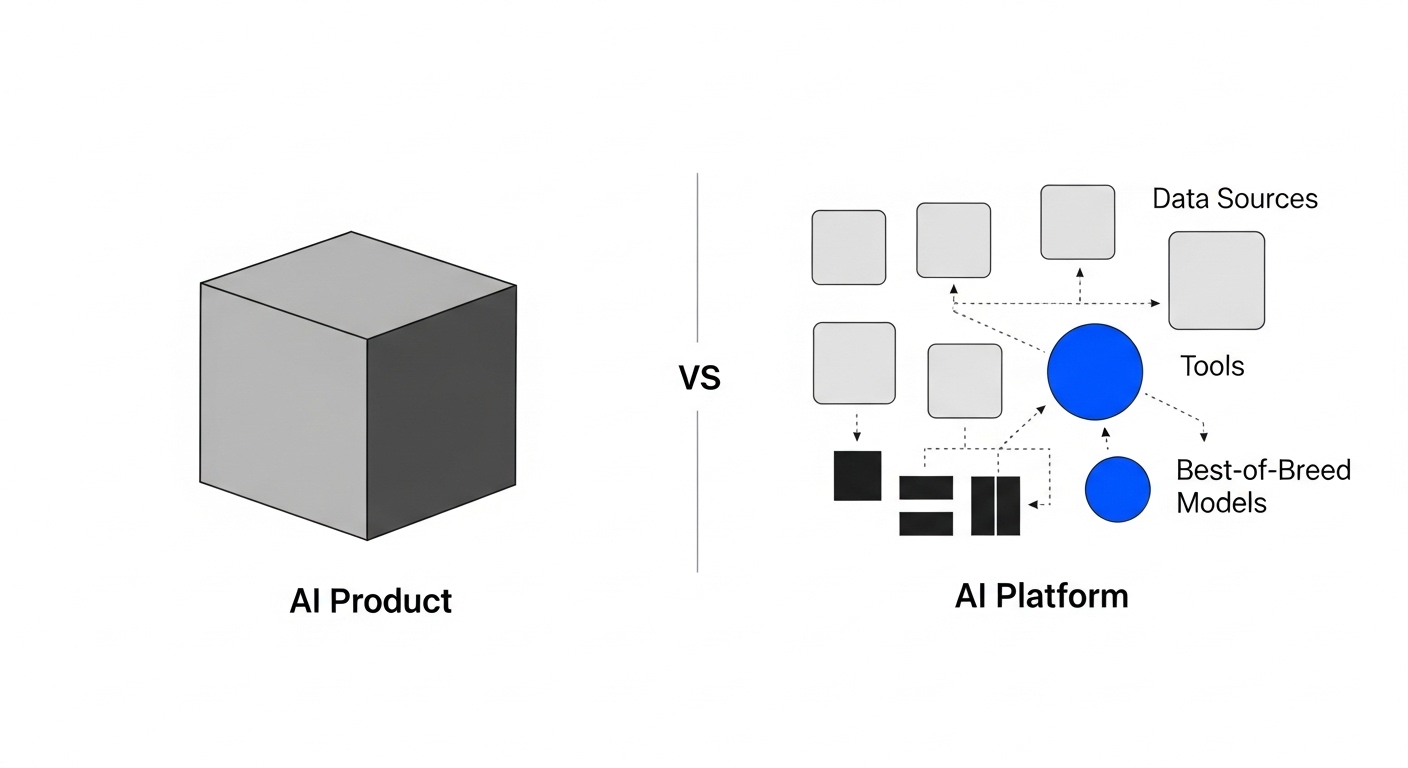

A critical distinction for technology leaders is that between an AI product and an AI platform. ChatGPT and Copilot are products: feature-rich applications designed for a predefined set of tasks. They are not, however, platforms upon which an enterprise can build its own unique, mission-critical AI applications. An organization cannot use Copilot to construct a custom AI agent for real-time fraud detection or a specialized system for dynamic supply chain optimization; these use cases fall outside its scope.

These products also enforce a closed model stack, typically relying on OpenAI's models delivered via Azure. This is a significant limitation in a field where new, potentially more efficient or specialized models—both commercial and open-source—emerge constantly. A closed platform prevents the enterprise from leveraging these innovations for cost or performance advantages. Furthermore, the most valuable enterprise AI solutions are often "compound applications" that chain together multiple models, tools, and data sources to automate a complex business workflow. This level of composition is impossible with a locked-down product.

Relying solely on off-the-shelf AI products is akin to outsourcing a future core competency. In the coming years, the ability to rapidly build and deploy custom AI solutions will be a primary driver of competitive differentiation. By becoming mere consumers of AI, enterprises cede this capability to their vendors, limiting themselves to the same generic features available to all their competitors. True, durable innovation requires an AI operating system—a foundational layer that allows developers to compose solutions, treating best-of-breed models and tools as modular components in a larger, enterprise-owned system. This is an investment in building an internal engine for innovation, rather than simply renting one.

The path from a promising AI pilot to a production-grade system is a graveyard of failed initiatives. Industry analysis consistently shows that a vast majority of AI proofs-of-concept (POCs) never reach production. Gartner predicts that at least 30% of generative AI projects will be abandoned after the POC stage, with other studies suggesting the failure rate is as high as 88%.

Pilots fail because they are born in a sterile lab, not the chaotic real world. They succeed under ideal conditions—using clean, curated data, with a tightly defined scope, and free from the complexities of integration with messy legacy systems. Demos built with generic tools like ChatGPT are prime examples of this "sandbox illusion." They look impressive but are not architected to withstand the pressures of production, which include fragmented data, inadequate infrastructure, evolving business needs, and the lack of robust Machine Learning Operations (MLOps) for monitoring, retraining, and governance. Many pilots are also "technology in search of a problem," demonstrating technical feasibility without being tied to a clear business problem or measurable ROI, making it impossible to justify the significant investment required for scaling.

This high failure rate suggests that the "fail fast" mantra of agile software development is misapplied to enterprise AI. A failing AI pilot does not just represent wasted development time; it erodes organizational trust in AI as a whole. A model that produces biased or incorrect results in production can cause real financial and reputational harm. The foundational work for a production AI system—secure infrastructure, data governance, and operational resilience—cannot be "iterated" into existence from a simple demo. The system must be built to last from day one, requiring a strategic partner and a platform architected for production, not just for a flashy but fragile pilot.

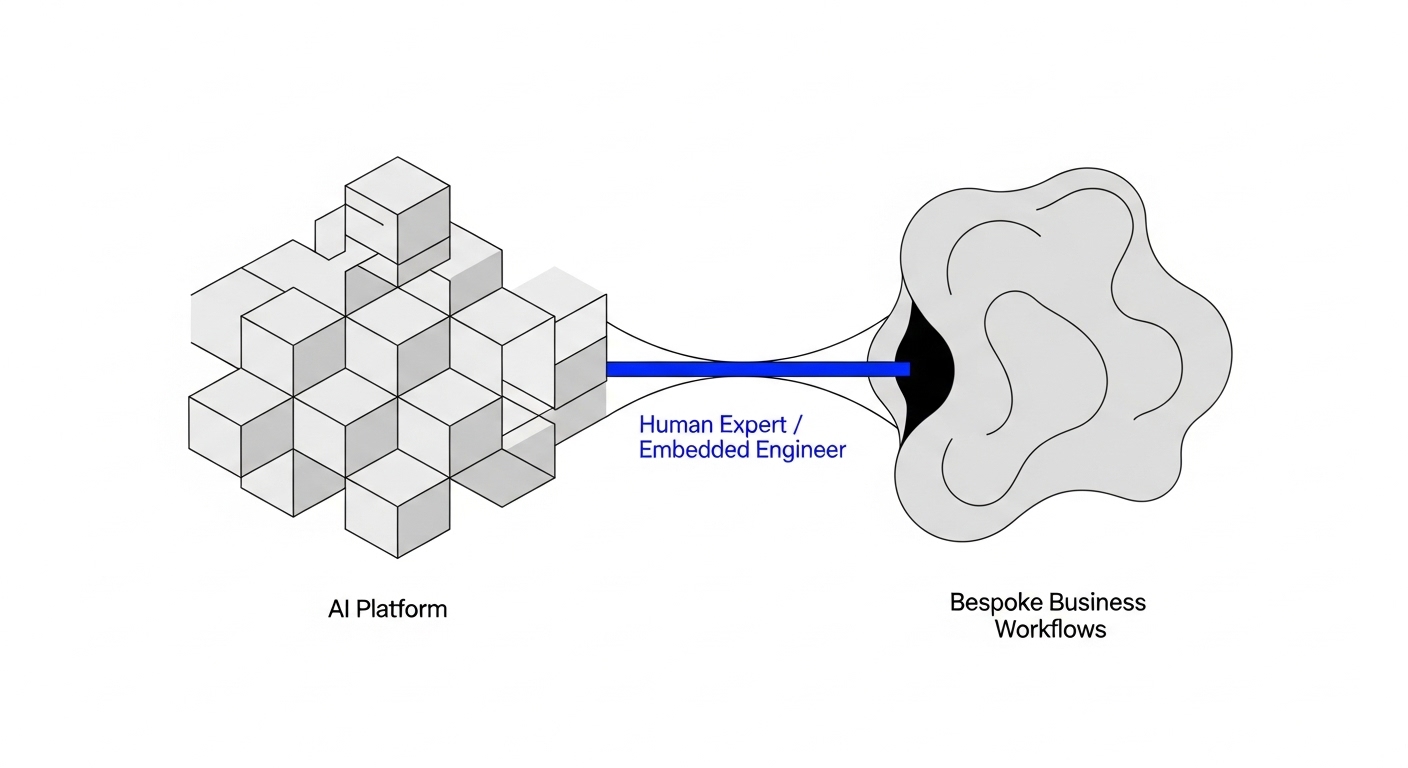

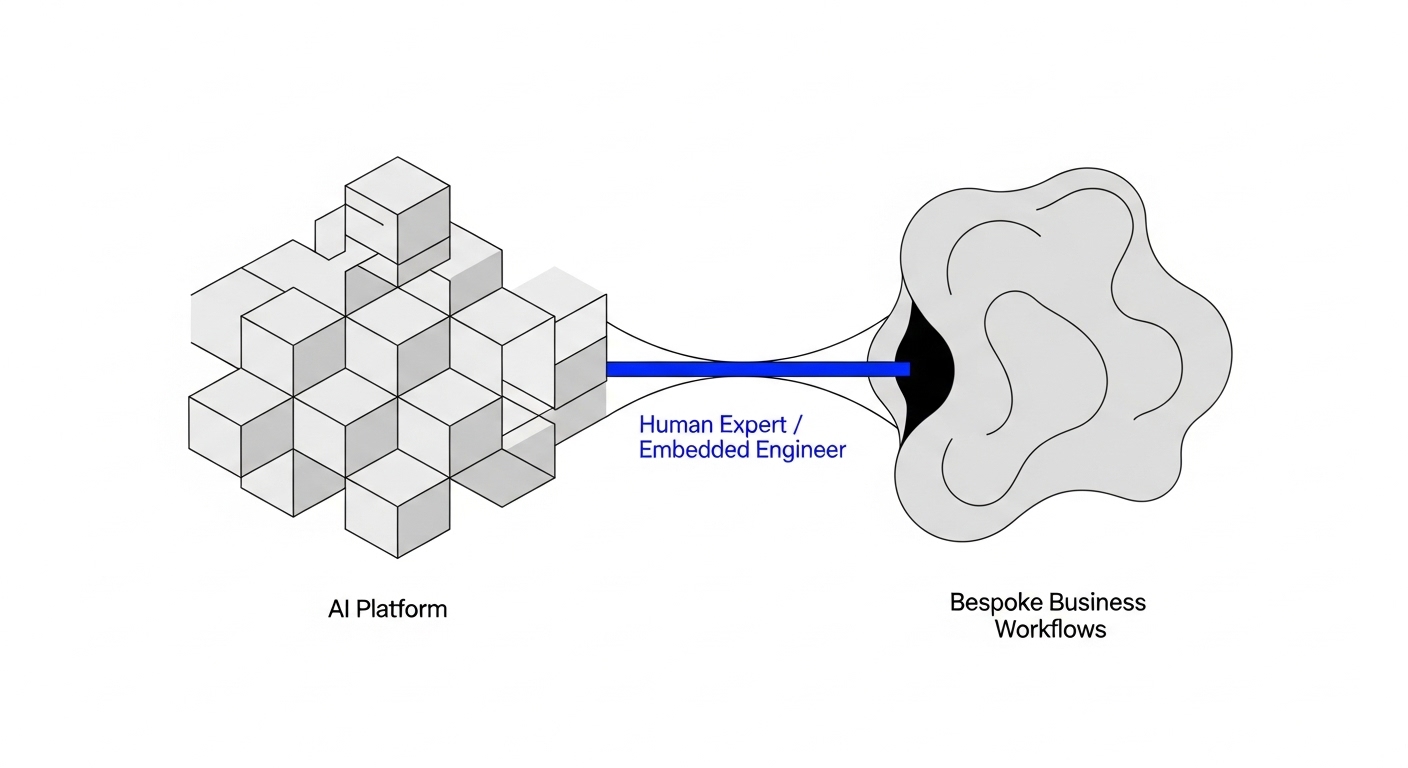

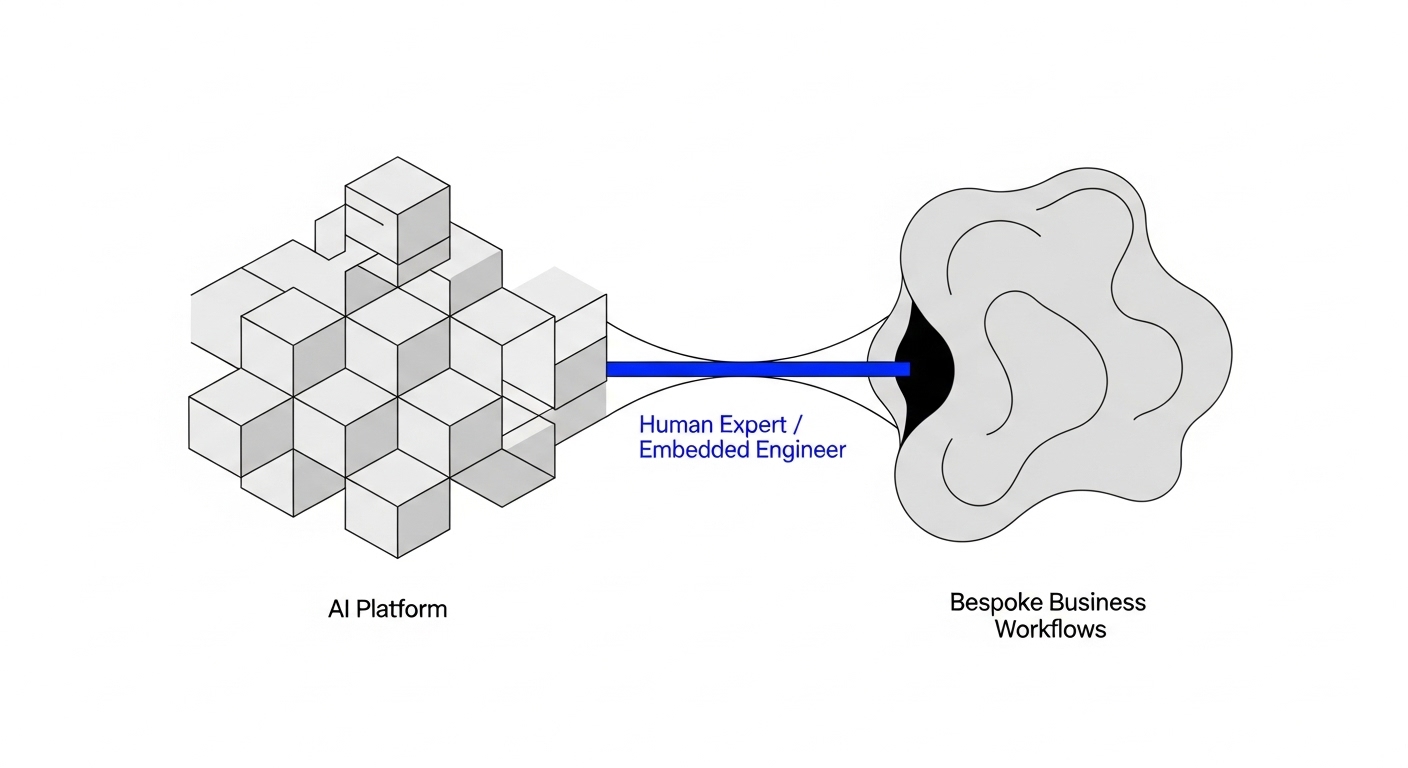

Off-the-shelf AI tools, by their very nature, cannot navigate the unique, complex, and often undocumented "last mile" of enterprise integration. This final, critical step involves connecting to legacy systems, handling idiosyncratic data formats, and embedding AI capabilities into bespoke business workflows that define how a company operates. This is not a problem that can be solved with a generic API.

This challenge is compounded by the "context chasm." An external tool or a traditional consulting engagement often lacks deep, nuanced understanding of the business's specific problems. Handoffs between business stakeholders, data scientists, and engineers inevitably lead to lost context, resulting in solutions that are technically correct but practically useless in the hands of the end-user. Overcoming this last-mile challenge requires more than just a tool; it requires expert humans. It demands a human-in-the-loop approach where skilled engineers work inside the enterprise's environment, alongside its teams, to co-develop and productionize solutions. These experts bridge the gap between the AI model and the business process, ensuring the final system is not just deployed but deeply integrated and adopted.

This reveals a crucial truth about enterprise AI: for complex, high-value problems, the most effective "interface" is not a dashboard or an API, but a human expert. This embedded engineer acts as a translator, an architect, and a collaborative partner. This "human API" is what transforms a powerful platform from a set of technical capabilities into a solved business problem, ensuring that the AI initiative survives the perilous journey from concept to production and delivers lasting value.

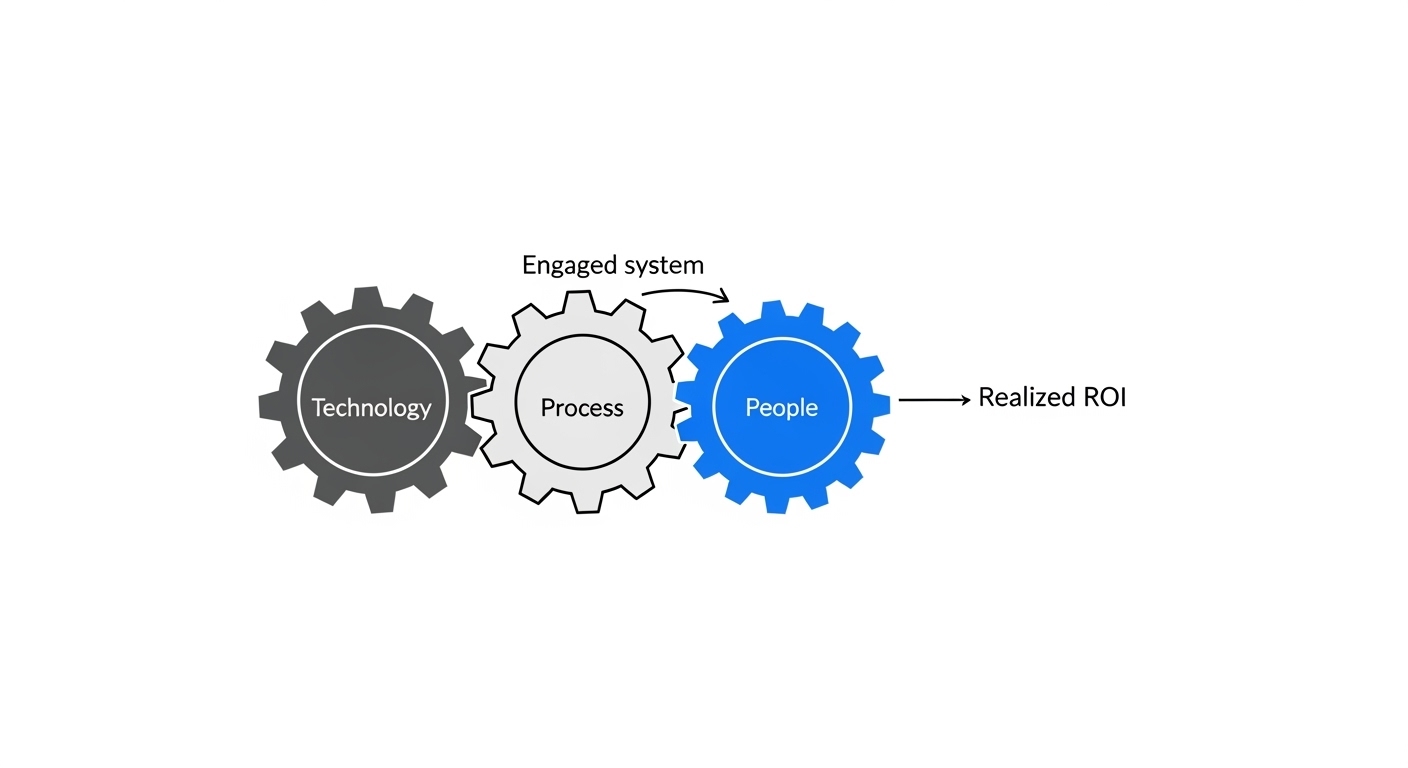

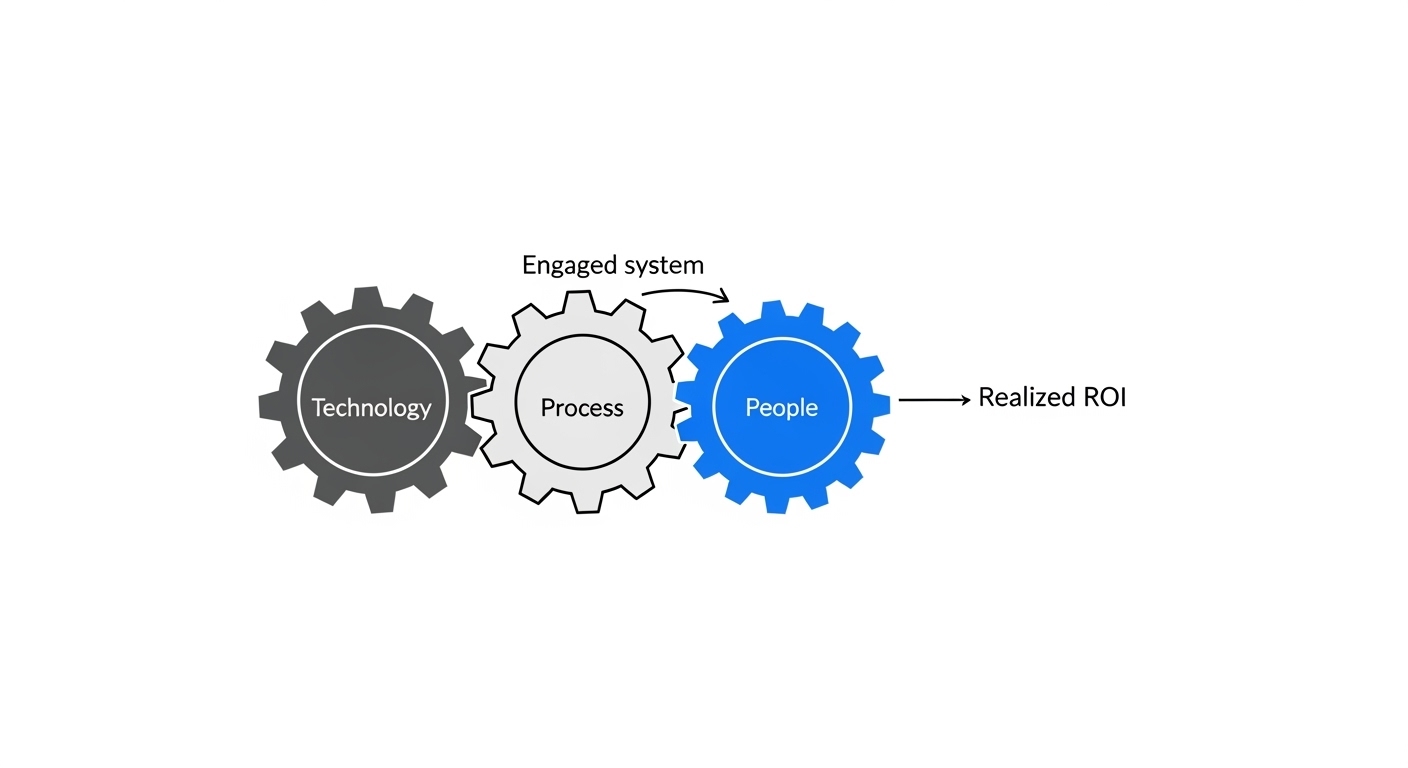

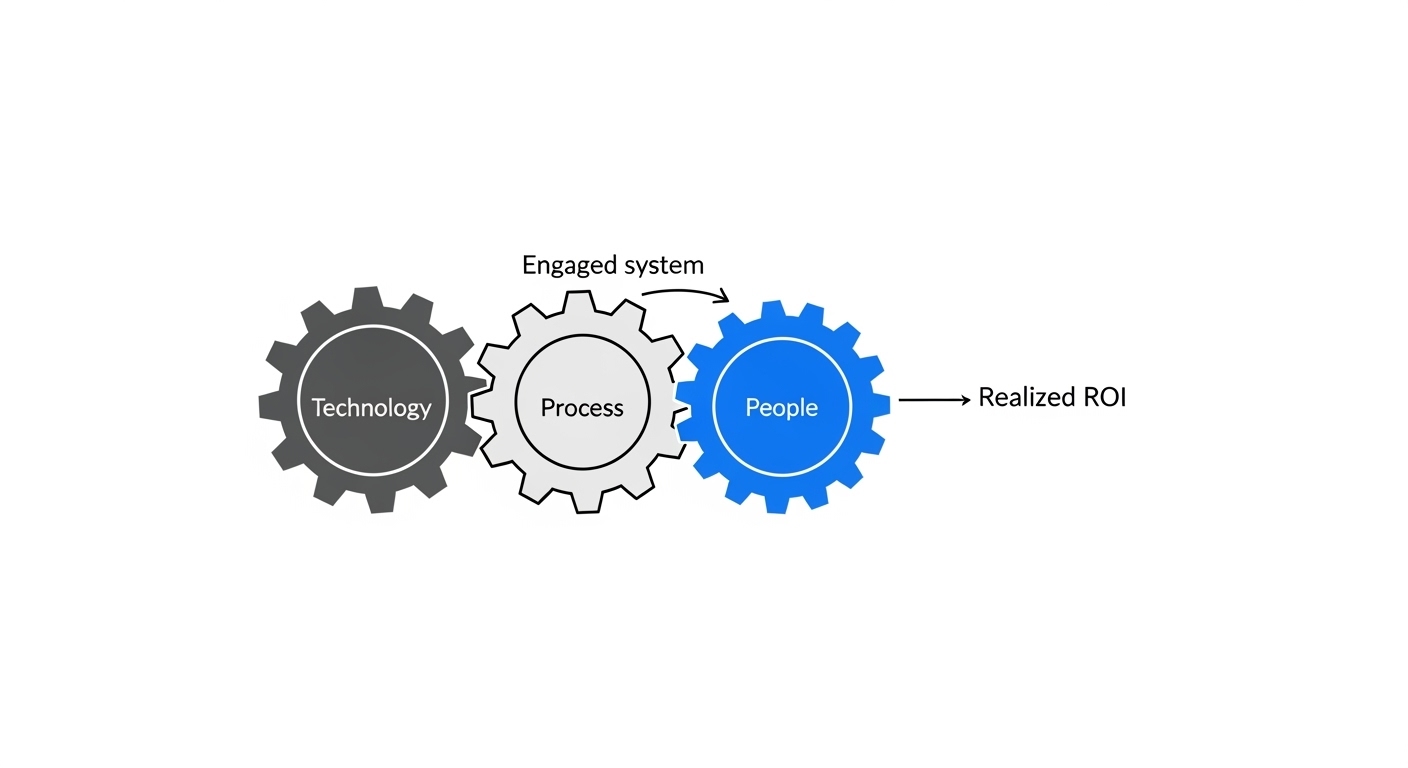

True business transformation from AI is not a technology problem; it is a people and process problem. Deploying a new tool, no matter how powerful, rarely leads to meaningful change. If the solution does not fit into existing workflows, or if users do not trust its outputs, it will be abandoned. This is why successful AI adoption requires significant, deliberate investment in change management, training, and upskilling.

Trust is the ultimate currency of AI adoption. Users are far more likely to trust and embrace a system they had a hand in shaping. A top-down deployment of a black-box tool from an external vendor breeds suspicion and resistance. In contrast, a collaborative development process, where end-users work directly with embedded engineers to define the problem and validate the solution, builds a sense of ownership and advocacy from the ground up. This human-centric approach is critical for moving beyond simple task automation toward the ultimate goal of "superagency"—a state where humans, empowered by AI, can achieve new levels of creativity, productivity, and strategic impact.

Technology leaders often calculate AI ROI based on a model's technical potential, such as its ability to automate a certain percentage of a task. However, the realized ROI is the technical potential multiplied by the adoption rate. If only 10% of employees use the tool, the organization only captures 10% of the potential value. Since adoption is driven by trust and perceived utility, the collaborative, human-in-the-loop model of co-creation is the most direct and effective path to maximizing financial returns. The "soft" work of human collaboration is, in fact, the hardest driver of financial success.

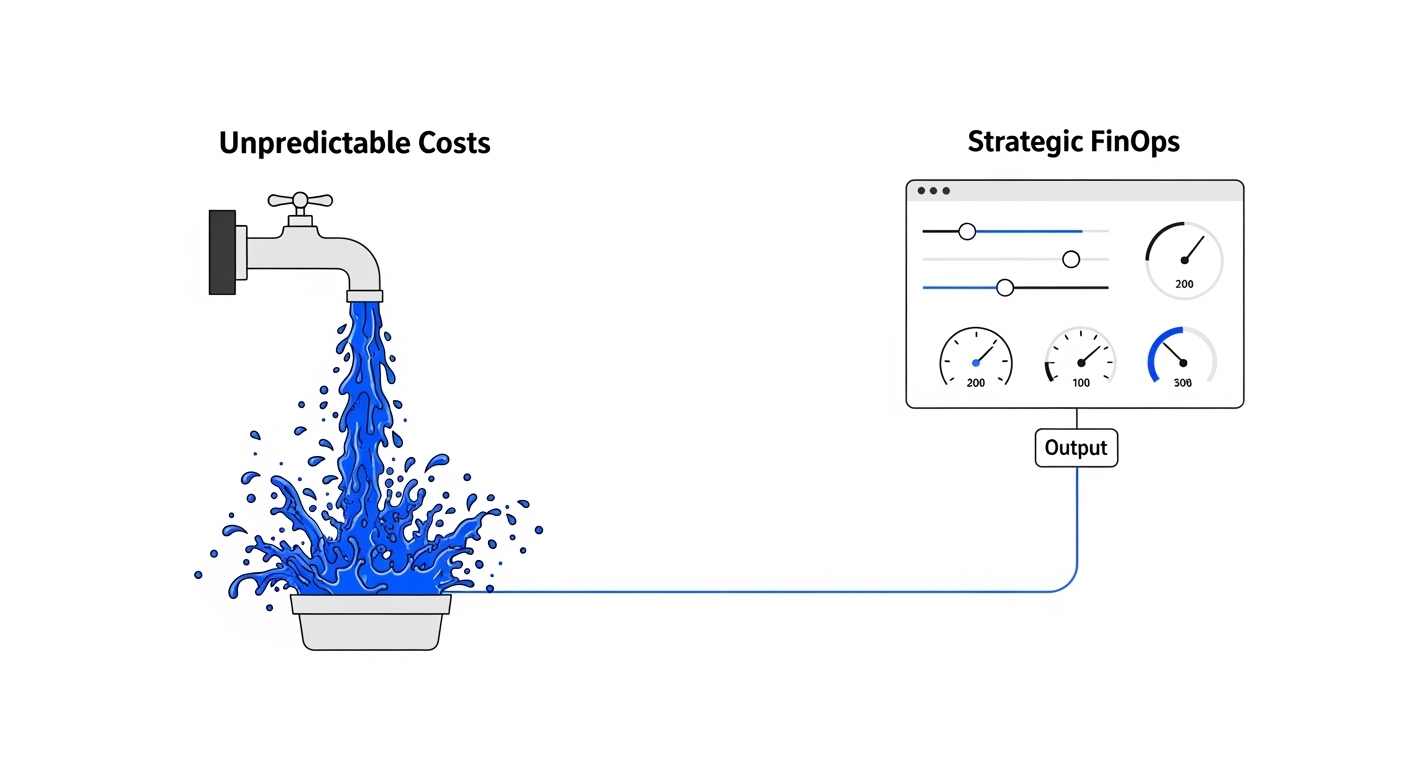

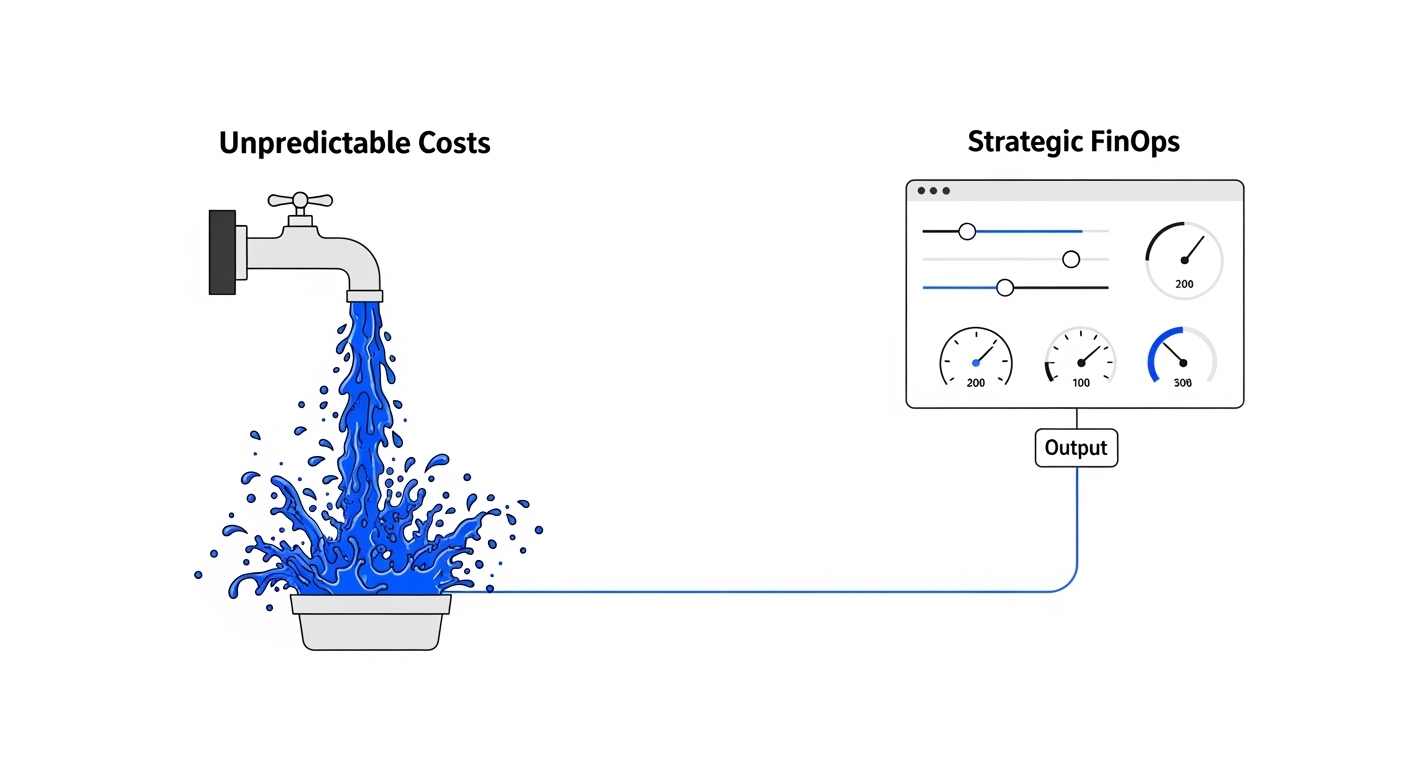

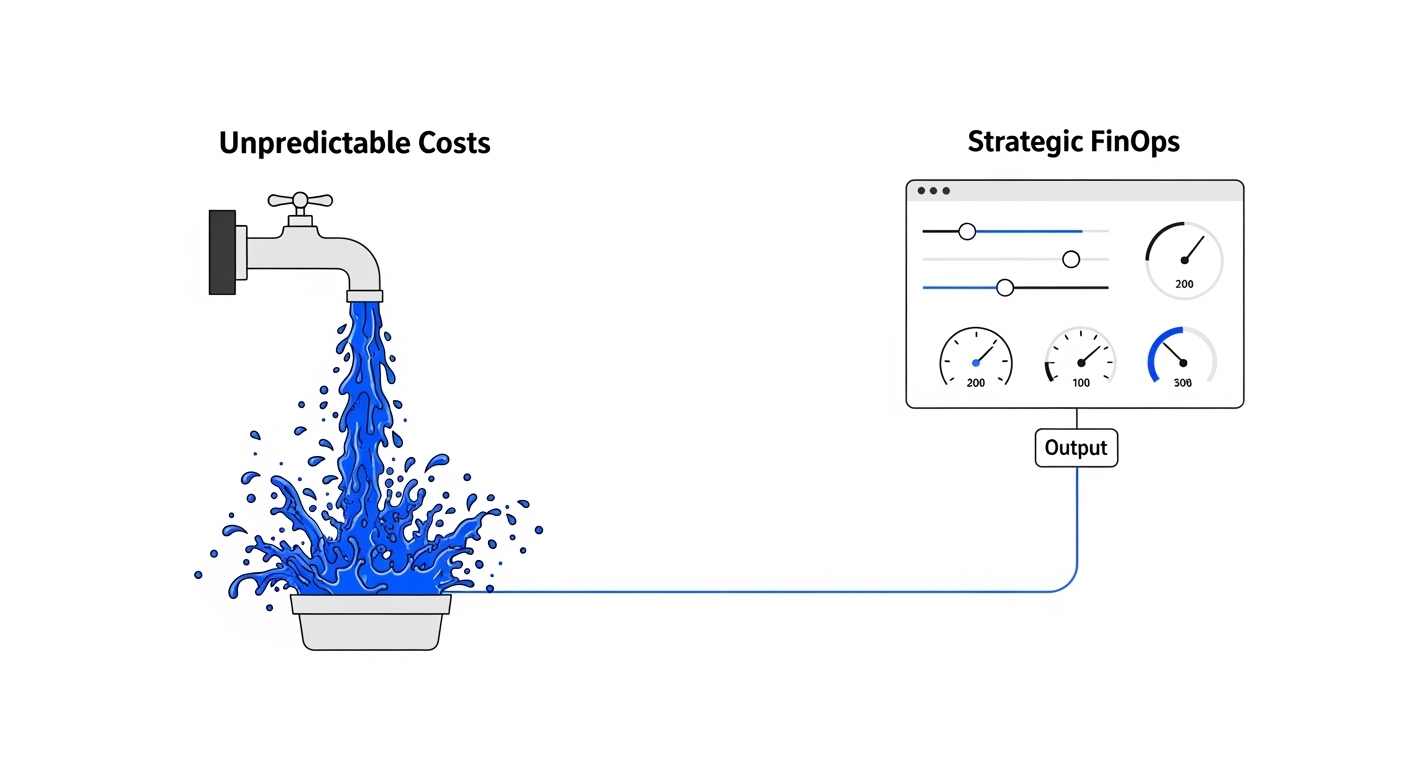

While public tools may appear inexpensive, they introduce significant financial uncertainty and obscure the true return on investment. For scaled usage via APIs, inference costs can spiral unpredictably, leading to "bill shock". Fixed per-seat licenses for tools like Copilot can become a substantial line item, but it is exceedingly difficult to measure whether the productivity gains justify the expense across the entire user base.

These products offer little to no granular control or observability over costs. A technology leader cannot easily track cost-per-query, attribute spending to specific business units, or implement budget controls, making it nearly impossible to manage an AI-related P&L. Furthermore, being locked into a single vendor's model means being subject to their pricing. If a smaller, fine-tuned open-source model could perform a specific task for a fraction of the cost, a closed platform provides no way to capitalize on that efficiency. This is analogous to the early days of cloud computing, where decentralized resource creation led to massive, unexpected bills and gave rise to the entire discipline of FinOps. Generative AI is creating the same pattern on an accelerated timeline.

A mature enterprise AI strategy requires a platform that serves as a financial control plane. It must provide detailed observability into costs and usage and, more importantly, the flexibility to route different workloads to the most appropriate and cost-effective model—a concept known as a "model router". This open, agnostic approach is the only way to manage AI costs strategically, prevent AI-driven bill shock, and ensure a defensible, predictable ROI.

The adoption of generic AI assistants like ChatGPT and Copilot is a tactical response to a strategic challenge. While they play a valuable role in familiarizing the workforce with the potential of AI, they are not a foundation upon which a lasting competitive advantage can be built. The seven risks outlined—to data sovereignty, ecosystem integration, innovation capacity, production readiness, organizational adoption, and financial control—are the predictable failure modes for enterprises that mistake a consumer-grade tool for an enterprise-grade strategy.

The path forward requires a deliberate architectural choice. Technology leaders must decide whether to rent generic capabilities or to build a core, strategic competency. The table below summarizes this choice.

The vision of a true Enterprise AI Operating System is one of a secure, open, and collaborative foundation. It is a system that does not force a choice between innovation and security, or between speed and scalability. It is an architecture that empowers the enterprise to not just use AI, but to master it—turning the immense potential of this technology into a durable, defensible, and uniquely valuable capability.

.jpg)

The viral, bottom-up adoption of generative AI assistants like ChatGPT and Microsoft Copilot is undeniable and, on the surface, a clear victory for productivity. Employees are leveraging these tools to draft emails, summarize documents, and generate code snippets with unprecedented speed, creating immense internal pressure on technology leaders to deploy them at scale. The appeal is tangible; the efficiency gains for individual tasks are immediate and compelling.

This creates a profound paradox for the modern technology executive. The very tools that empower individuals can introduce systemic risk and strategic debt to the enterprise as a whole. The frictionless experience for an employee masks a web of complex security, compliance, and integration challenges for the organization. The ease of use that drives adoption also sets a dangerously misleading precedent. Employees and even business leaders come to perceive AI as a kind of magic—instant, free or low-cost, and requiring no foundational work. When technology leaders then propose a more robust, strategic approach that requires investment in data governance, secure infrastructure, and operational discipline, it can be perceived as bureaucratic and slow. The tactical win for employee productivity thus creates a cultural and political headwind against building a durable, long-term AI capability.

The strategic question, therefore, is not if the enterprise should use AI, but how. How do we mature from scattered pockets of AI-driven efficiency to a secure, scalable, and integrated enterprise AI foundation that becomes a true competitive moat? This requires looking past the immediate allure of generic assistants and confronting the seven critical gaps they leave open.

The most significant and immediate risk posed by public AI tools is the loss of control over an organization's most valuable asset: its data. When employees input sensitive information—unannounced financial data, proprietary source code, customer personally identifiable information (PII), or confidential legal strategies—into a third-party-hosted AI tool, that data leaves the secure perimeter of the enterprise. This act fundamentally compromises the principle of data sovereignty.

These tools often operate as a black box, providing little to no transparency into how input data is stored, who has access to it, or whether it is used to train the vendor's future models. This creates a direct and unacceptable risk of data leakage, where a company's confidential information could be inadvertently regurgitated in a response to another user, who could be a competitor. This lack of control creates a compliance nightmare. Regulations like the General Data Protection Regulation (GDPR), the Health Insurance Portability and Accountability Act (HIPAA), and the California Consumer Privacy Act (CCPA) impose strict requirements on data processing, residency, and consent. Using a public AI tool for regulated data can lead to severe financial penalties, loss of customer trust, and lasting reputational damage, as the burden of compliance remains with the enterprise even when control is abdicated to a vendor.

This reality exposes a deep philosophical conflict between the operational model of public AI and the security posture of the modern enterprise. Today's security architectures are built on a "zero-trust" model: never trust, always verify, and assume breach. Access is granular, monitored, and meticulously controlled. Public AI tools, conversely, operate on a model of "implicit trust," forcing a Chief Information Security Officer (CISO) to trust a vendor's opaque security promises and multi-tenant architecture with their most sensitive data. This is not a technical gap; it is a fundamental misalignment of security principles. The only truly defensible posture is to bring AI capabilities to the data, not the other way around. This makes an architecture where models and applications run within the enterprise's own secure in-VPC (Virtual Private Cloud) deployment a non-negotiable prerequisite for any serious AI initiative, as it extends the zero-trust perimeter to encompass AI workloads, rather than contradicting it.

Microsoft Copilot is marketed on its deep integration within the Microsoft 365 suite, offering powerful features for users of Word, Excel, and Teams. While this provides a seamless experience for tasks confined to that ecosystem, it creates a strategic vulnerability for the enterprise: a walled garden that isolates intelligence.

The modern enterprise is a heterogeneous environment. Mission-critical data does not live solely in Microsoft products; it resides in Salesforce, Confluence, Jira, Slack, SAP, Snowflake, and countless bespoke internal applications. A tool that cannot natively access and reason over these disparate data sources cannot provide true enterprise-wide intelligence. It can only optimize tasks within its own silo, reinforcing the very information barriers that technology leaders have spent decades trying to dismantle. This limitation reframes the concept of vendor lock-in. Historically, lock-in was a financial and operational concern centered on high switching costs. In the AI era, it becomes a strategic constraint on intelligence itself. By tethering an AI strategy to a single vendor's ecosystem, an organization is not just locked into their pricing; it is locked into their model of the world. The AI can only be as intelligent as the data it can see.

The real, transformative value of enterprise AI lies in its ability to act as an orchestration layer, connecting data and workflows across different systems to generate novel insights and automate complex, cross-functional processes. This requires a platform that is fundamentally open and tool-agnostic, designed to integrate with any data source or application, whether commercial or open-source. Such a platform is architected to maximize the enterprise's collective intelligence by connecting to its entire "brain," not just one lobe.

A critical distinction for technology leaders is that between an AI product and an AI platform. ChatGPT and Copilot are products: feature-rich applications designed for a predefined set of tasks. They are not, however, platforms upon which an enterprise can build its own unique, mission-critical AI applications. An organization cannot use Copilot to construct a custom AI agent for real-time fraud detection or a specialized system for dynamic supply chain optimization; these use cases fall outside its scope.

These products also enforce a closed model stack, typically relying on OpenAI's models delivered via Azure. This is a significant limitation in a field where new, potentially more efficient or specialized models—both commercial and open-source—emerge constantly. A closed platform prevents the enterprise from leveraging these innovations for cost or performance advantages. Furthermore, the most valuable enterprise AI solutions are often "compound applications" that chain together multiple models, tools, and data sources to automate a complex business workflow. This level of composition is impossible with a locked-down product.

Relying solely on off-the-shelf AI products is akin to outsourcing a future core competency. In the coming years, the ability to rapidly build and deploy custom AI solutions will be a primary driver of competitive differentiation. By becoming mere consumers of AI, enterprises cede this capability to their vendors, limiting themselves to the same generic features available to all their competitors. True, durable innovation requires an AI operating system—a foundational layer that allows developers to compose solutions, treating best-of-breed models and tools as modular components in a larger, enterprise-owned system. This is an investment in building an internal engine for innovation, rather than simply renting one.

The path from a promising AI pilot to a production-grade system is a graveyard of failed initiatives. Industry analysis consistently shows that a vast majority of AI proofs-of-concept (POCs) never reach production. Gartner predicts that at least 30% of generative AI projects will be abandoned after the POC stage, with other studies suggesting the failure rate is as high as 88%.

Pilots fail because they are born in a sterile lab, not the chaotic real world. They succeed under ideal conditions—using clean, curated data, with a tightly defined scope, and free from the complexities of integration with messy legacy systems. Demos built with generic tools like ChatGPT are prime examples of this "sandbox illusion." They look impressive but are not architected to withstand the pressures of production, which include fragmented data, inadequate infrastructure, evolving business needs, and the lack of robust Machine Learning Operations (MLOps) for monitoring, retraining, and governance. Many pilots are also "technology in search of a problem," demonstrating technical feasibility without being tied to a clear business problem or measurable ROI, making it impossible to justify the significant investment required for scaling.

This high failure rate suggests that the "fail fast" mantra of agile software development is misapplied to enterprise AI. A failing AI pilot does not just represent wasted development time; it erodes organizational trust in AI as a whole. A model that produces biased or incorrect results in production can cause real financial and reputational harm. The foundational work for a production AI system—secure infrastructure, data governance, and operational resilience—cannot be "iterated" into existence from a simple demo. The system must be built to last from day one, requiring a strategic partner and a platform architected for production, not just for a flashy but fragile pilot.

Off-the-shelf AI tools, by their very nature, cannot navigate the unique, complex, and often undocumented "last mile" of enterprise integration. This final, critical step involves connecting to legacy systems, handling idiosyncratic data formats, and embedding AI capabilities into bespoke business workflows that define how a company operates. This is not a problem that can be solved with a generic API.

This challenge is compounded by the "context chasm." An external tool or a traditional consulting engagement often lacks deep, nuanced understanding of the business's specific problems. Handoffs between business stakeholders, data scientists, and engineers inevitably lead to lost context, resulting in solutions that are technically correct but practically useless in the hands of the end-user. Overcoming this last-mile challenge requires more than just a tool; it requires expert humans. It demands a human-in-the-loop approach where skilled engineers work inside the enterprise's environment, alongside its teams, to co-develop and productionize solutions. These experts bridge the gap between the AI model and the business process, ensuring the final system is not just deployed but deeply integrated and adopted.

This reveals a crucial truth about enterprise AI: for complex, high-value problems, the most effective "interface" is not a dashboard or an API, but a human expert. This embedded engineer acts as a translator, an architect, and a collaborative partner. This "human API" is what transforms a powerful platform from a set of technical capabilities into a solved business problem, ensuring that the AI initiative survives the perilous journey from concept to production and delivers lasting value.

True business transformation from AI is not a technology problem; it is a people and process problem. Deploying a new tool, no matter how powerful, rarely leads to meaningful change. If the solution does not fit into existing workflows, or if users do not trust its outputs, it will be abandoned. This is why successful AI adoption requires significant, deliberate investment in change management, training, and upskilling.

Trust is the ultimate currency of AI adoption. Users are far more likely to trust and embrace a system they had a hand in shaping. A top-down deployment of a black-box tool from an external vendor breeds suspicion and resistance. In contrast, a collaborative development process, where end-users work directly with embedded engineers to define the problem and validate the solution, builds a sense of ownership and advocacy from the ground up. This human-centric approach is critical for moving beyond simple task automation toward the ultimate goal of "superagency"—a state where humans, empowered by AI, can achieve new levels of creativity, productivity, and strategic impact.

Technology leaders often calculate AI ROI based on a model's technical potential, such as its ability to automate a certain percentage of a task. However, the realized ROI is the technical potential multiplied by the adoption rate. If only 10% of employees use the tool, the organization only captures 10% of the potential value. Since adoption is driven by trust and perceived utility, the collaborative, human-in-the-loop model of co-creation is the most direct and effective path to maximizing financial returns. The "soft" work of human collaboration is, in fact, the hardest driver of financial success.

While public tools may appear inexpensive, they introduce significant financial uncertainty and obscure the true return on investment. For scaled usage via APIs, inference costs can spiral unpredictably, leading to "bill shock". Fixed per-seat licenses for tools like Copilot can become a substantial line item, but it is exceedingly difficult to measure whether the productivity gains justify the expense across the entire user base.

These products offer little to no granular control or observability over costs. A technology leader cannot easily track cost-per-query, attribute spending to specific business units, or implement budget controls, making it nearly impossible to manage an AI-related P&L. Furthermore, being locked into a single vendor's model means being subject to their pricing. If a smaller, fine-tuned open-source model could perform a specific task for a fraction of the cost, a closed platform provides no way to capitalize on that efficiency. This is analogous to the early days of cloud computing, where decentralized resource creation led to massive, unexpected bills and gave rise to the entire discipline of FinOps. Generative AI is creating the same pattern on an accelerated timeline.

A mature enterprise AI strategy requires a platform that serves as a financial control plane. It must provide detailed observability into costs and usage and, more importantly, the flexibility to route different workloads to the most appropriate and cost-effective model—a concept known as a "model router". This open, agnostic approach is the only way to manage AI costs strategically, prevent AI-driven bill shock, and ensure a defensible, predictable ROI.

The adoption of generic AI assistants like ChatGPT and Copilot is a tactical response to a strategic challenge. While they play a valuable role in familiarizing the workforce with the potential of AI, they are not a foundation upon which a lasting competitive advantage can be built. The seven risks outlined—to data sovereignty, ecosystem integration, innovation capacity, production readiness, organizational adoption, and financial control—are the predictable failure modes for enterprises that mistake a consumer-grade tool for an enterprise-grade strategy.

The path forward requires a deliberate architectural choice. Technology leaders must decide whether to rent generic capabilities or to build a core, strategic competency. The table below summarizes this choice.

The vision of a true Enterprise AI Operating System is one of a secure, open, and collaborative foundation. It is a system that does not force a choice between innovation and security, or between speed and scalability. It is an architecture that empowers the enterprise to not just use AI, but to master it—turning the immense potential of this technology into a durable, defensible, and uniquely valuable capability.

The viral, bottom-up adoption of generative AI assistants like ChatGPT and Microsoft Copilot is undeniable and, on the surface, a clear victory for productivity. Employees are leveraging these tools to draft emails, summarize documents, and generate code snippets with unprecedented speed, creating immense internal pressure on technology leaders to deploy them at scale. The appeal is tangible; the efficiency gains for individual tasks are immediate and compelling.

This creates a profound paradox for the modern technology executive. The very tools that empower individuals can introduce systemic risk and strategic debt to the enterprise as a whole. The frictionless experience for an employee masks a web of complex security, compliance, and integration challenges for the organization. The ease of use that drives adoption also sets a dangerously misleading precedent. Employees and even business leaders come to perceive AI as a kind of magic—instant, free or low-cost, and requiring no foundational work. When technology leaders then propose a more robust, strategic approach that requires investment in data governance, secure infrastructure, and operational discipline, it can be perceived as bureaucratic and slow. The tactical win for employee productivity thus creates a cultural and political headwind against building a durable, long-term AI capability.

The strategic question, therefore, is not if the enterprise should use AI, but how. How do we mature from scattered pockets of AI-driven efficiency to a secure, scalable, and integrated enterprise AI foundation that becomes a true competitive moat? This requires looking past the immediate allure of generic assistants and confronting the seven critical gaps they leave open.

The most significant and immediate risk posed by public AI tools is the loss of control over an organization's most valuable asset: its data. When employees input sensitive information—unannounced financial data, proprietary source code, customer personally identifiable information (PII), or confidential legal strategies—into a third-party-hosted AI tool, that data leaves the secure perimeter of the enterprise. This act fundamentally compromises the principle of data sovereignty.

These tools often operate as a black box, providing little to no transparency into how input data is stored, who has access to it, or whether it is used to train the vendor's future models. This creates a direct and unacceptable risk of data leakage, where a company's confidential information could be inadvertently regurgitated in a response to another user, who could be a competitor. This lack of control creates a compliance nightmare. Regulations like the General Data Protection Regulation (GDPR), the Health Insurance Portability and Accountability Act (HIPAA), and the California Consumer Privacy Act (CCPA) impose strict requirements on data processing, residency, and consent. Using a public AI tool for regulated data can lead to severe financial penalties, loss of customer trust, and lasting reputational damage, as the burden of compliance remains with the enterprise even when control is abdicated to a vendor.

This reality exposes a deep philosophical conflict between the operational model of public AI and the security posture of the modern enterprise. Today's security architectures are built on a "zero-trust" model: never trust, always verify, and assume breach. Access is granular, monitored, and meticulously controlled. Public AI tools, conversely, operate on a model of "implicit trust," forcing a Chief Information Security Officer (CISO) to trust a vendor's opaque security promises and multi-tenant architecture with their most sensitive data. This is not a technical gap; it is a fundamental misalignment of security principles. The only truly defensible posture is to bring AI capabilities to the data, not the other way around. This makes an architecture where models and applications run within the enterprise's own secure in-VPC (Virtual Private Cloud) deployment a non-negotiable prerequisite for any serious AI initiative, as it extends the zero-trust perimeter to encompass AI workloads, rather than contradicting it.

Microsoft Copilot is marketed on its deep integration within the Microsoft 365 suite, offering powerful features for users of Word, Excel, and Teams. While this provides a seamless experience for tasks confined to that ecosystem, it creates a strategic vulnerability for the enterprise: a walled garden that isolates intelligence.

The modern enterprise is a heterogeneous environment. Mission-critical data does not live solely in Microsoft products; it resides in Salesforce, Confluence, Jira, Slack, SAP, Snowflake, and countless bespoke internal applications. A tool that cannot natively access and reason over these disparate data sources cannot provide true enterprise-wide intelligence. It can only optimize tasks within its own silo, reinforcing the very information barriers that technology leaders have spent decades trying to dismantle. This limitation reframes the concept of vendor lock-in. Historically, lock-in was a financial and operational concern centered on high switching costs. In the AI era, it becomes a strategic constraint on intelligence itself. By tethering an AI strategy to a single vendor's ecosystem, an organization is not just locked into their pricing; it is locked into their model of the world. The AI can only be as intelligent as the data it can see.

The real, transformative value of enterprise AI lies in its ability to act as an orchestration layer, connecting data and workflows across different systems to generate novel insights and automate complex, cross-functional processes. This requires a platform that is fundamentally open and tool-agnostic, designed to integrate with any data source or application, whether commercial or open-source. Such a platform is architected to maximize the enterprise's collective intelligence by connecting to its entire "brain," not just one lobe.

A critical distinction for technology leaders is that between an AI product and an AI platform. ChatGPT and Copilot are products: feature-rich applications designed for a predefined set of tasks. They are not, however, platforms upon which an enterprise can build its own unique, mission-critical AI applications. An organization cannot use Copilot to construct a custom AI agent for real-time fraud detection or a specialized system for dynamic supply chain optimization; these use cases fall outside its scope.

These products also enforce a closed model stack, typically relying on OpenAI's models delivered via Azure. This is a significant limitation in a field where new, potentially more efficient or specialized models—both commercial and open-source—emerge constantly. A closed platform prevents the enterprise from leveraging these innovations for cost or performance advantages. Furthermore, the most valuable enterprise AI solutions are often "compound applications" that chain together multiple models, tools, and data sources to automate a complex business workflow. This level of composition is impossible with a locked-down product.

Relying solely on off-the-shelf AI products is akin to outsourcing a future core competency. In the coming years, the ability to rapidly build and deploy custom AI solutions will be a primary driver of competitive differentiation. By becoming mere consumers of AI, enterprises cede this capability to their vendors, limiting themselves to the same generic features available to all their competitors. True, durable innovation requires an AI operating system—a foundational layer that allows developers to compose solutions, treating best-of-breed models and tools as modular components in a larger, enterprise-owned system. This is an investment in building an internal engine for innovation, rather than simply renting one.

The path from a promising AI pilot to a production-grade system is a graveyard of failed initiatives. Industry analysis consistently shows that a vast majority of AI proofs-of-concept (POCs) never reach production. Gartner predicts that at least 30% of generative AI projects will be abandoned after the POC stage, with other studies suggesting the failure rate is as high as 88%.

Pilots fail because they are born in a sterile lab, not the chaotic real world. They succeed under ideal conditions—using clean, curated data, with a tightly defined scope, and free from the complexities of integration with messy legacy systems. Demos built with generic tools like ChatGPT are prime examples of this "sandbox illusion." They look impressive but are not architected to withstand the pressures of production, which include fragmented data, inadequate infrastructure, evolving business needs, and the lack of robust Machine Learning Operations (MLOps) for monitoring, retraining, and governance. Many pilots are also "technology in search of a problem," demonstrating technical feasibility without being tied to a clear business problem or measurable ROI, making it impossible to justify the significant investment required for scaling.

This high failure rate suggests that the "fail fast" mantra of agile software development is misapplied to enterprise AI. A failing AI pilot does not just represent wasted development time; it erodes organizational trust in AI as a whole. A model that produces biased or incorrect results in production can cause real financial and reputational harm. The foundational work for a production AI system—secure infrastructure, data governance, and operational resilience—cannot be "iterated" into existence from a simple demo. The system must be built to last from day one, requiring a strategic partner and a platform architected for production, not just for a flashy but fragile pilot.

Off-the-shelf AI tools, by their very nature, cannot navigate the unique, complex, and often undocumented "last mile" of enterprise integration. This final, critical step involves connecting to legacy systems, handling idiosyncratic data formats, and embedding AI capabilities into bespoke business workflows that define how a company operates. This is not a problem that can be solved with a generic API.

This challenge is compounded by the "context chasm." An external tool or a traditional consulting engagement often lacks deep, nuanced understanding of the business's specific problems. Handoffs between business stakeholders, data scientists, and engineers inevitably lead to lost context, resulting in solutions that are technically correct but practically useless in the hands of the end-user. Overcoming this last-mile challenge requires more than just a tool; it requires expert humans. It demands a human-in-the-loop approach where skilled engineers work inside the enterprise's environment, alongside its teams, to co-develop and productionize solutions. These experts bridge the gap between the AI model and the business process, ensuring the final system is not just deployed but deeply integrated and adopted.

This reveals a crucial truth about enterprise AI: for complex, high-value problems, the most effective "interface" is not a dashboard or an API, but a human expert. This embedded engineer acts as a translator, an architect, and a collaborative partner. This "human API" is what transforms a powerful platform from a set of technical capabilities into a solved business problem, ensuring that the AI initiative survives the perilous journey from concept to production and delivers lasting value.

True business transformation from AI is not a technology problem; it is a people and process problem. Deploying a new tool, no matter how powerful, rarely leads to meaningful change. If the solution does not fit into existing workflows, or if users do not trust its outputs, it will be abandoned. This is why successful AI adoption requires significant, deliberate investment in change management, training, and upskilling.

Trust is the ultimate currency of AI adoption. Users are far more likely to trust and embrace a system they had a hand in shaping. A top-down deployment of a black-box tool from an external vendor breeds suspicion and resistance. In contrast, a collaborative development process, where end-users work directly with embedded engineers to define the problem and validate the solution, builds a sense of ownership and advocacy from the ground up. This human-centric approach is critical for moving beyond simple task automation toward the ultimate goal of "superagency"—a state where humans, empowered by AI, can achieve new levels of creativity, productivity, and strategic impact.

Technology leaders often calculate AI ROI based on a model's technical potential, such as its ability to automate a certain percentage of a task. However, the realized ROI is the technical potential multiplied by the adoption rate. If only 10% of employees use the tool, the organization only captures 10% of the potential value. Since adoption is driven by trust and perceived utility, the collaborative, human-in-the-loop model of co-creation is the most direct and effective path to maximizing financial returns. The "soft" work of human collaboration is, in fact, the hardest driver of financial success.

While public tools may appear inexpensive, they introduce significant financial uncertainty and obscure the true return on investment. For scaled usage via APIs, inference costs can spiral unpredictably, leading to "bill shock". Fixed per-seat licenses for tools like Copilot can become a substantial line item, but it is exceedingly difficult to measure whether the productivity gains justify the expense across the entire user base.

These products offer little to no granular control or observability over costs. A technology leader cannot easily track cost-per-query, attribute spending to specific business units, or implement budget controls, making it nearly impossible to manage an AI-related P&L. Furthermore, being locked into a single vendor's model means being subject to their pricing. If a smaller, fine-tuned open-source model could perform a specific task for a fraction of the cost, a closed platform provides no way to capitalize on that efficiency. This is analogous to the early days of cloud computing, where decentralized resource creation led to massive, unexpected bills and gave rise to the entire discipline of FinOps. Generative AI is creating the same pattern on an accelerated timeline.

A mature enterprise AI strategy requires a platform that serves as a financial control plane. It must provide detailed observability into costs and usage and, more importantly, the flexibility to route different workloads to the most appropriate and cost-effective model—a concept known as a "model router". This open, agnostic approach is the only way to manage AI costs strategically, prevent AI-driven bill shock, and ensure a defensible, predictable ROI.

The adoption of generic AI assistants like ChatGPT and Copilot is a tactical response to a strategic challenge. While they play a valuable role in familiarizing the workforce with the potential of AI, they are not a foundation upon which a lasting competitive advantage can be built. The seven risks outlined—to data sovereignty, ecosystem integration, innovation capacity, production readiness, organizational adoption, and financial control—are the predictable failure modes for enterprises that mistake a consumer-grade tool for an enterprise-grade strategy.

The path forward requires a deliberate architectural choice. Technology leaders must decide whether to rent generic capabilities or to build a core, strategic competency. The table below summarizes this choice.

The vision of a true Enterprise AI Operating System is one of a secure, open, and collaborative foundation. It is a system that does not force a choice between innovation and security, or between speed and scalability. It is an architecture that empowers the enterprise to not just use AI, but to master it—turning the immense potential of this technology into a durable, defensible, and uniquely valuable capability.